DeepMind's Genie 2 generates playable 3D worlds from single images

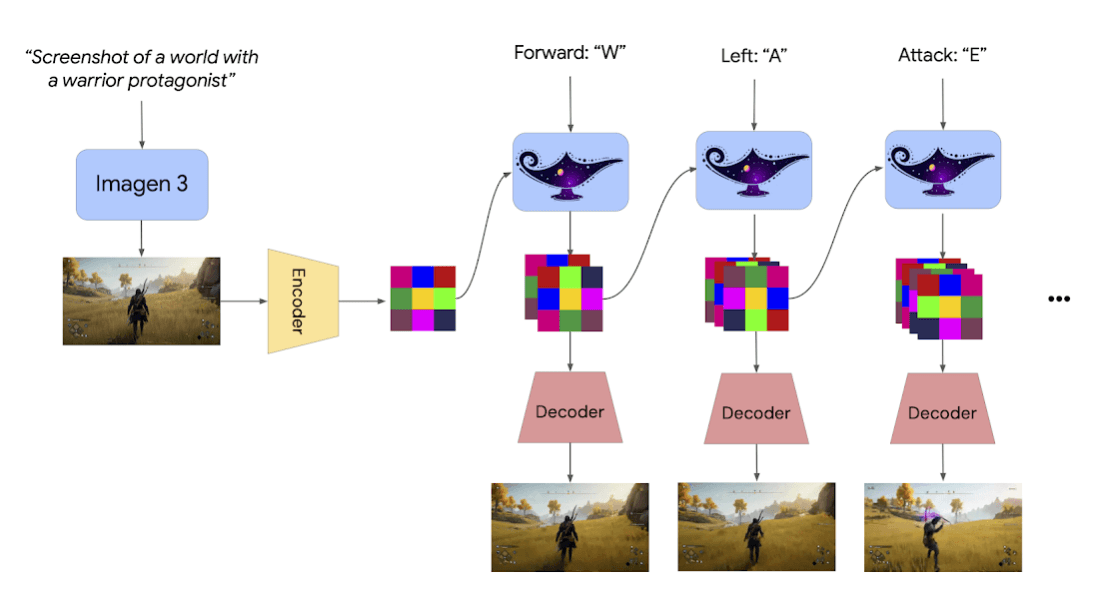

DeepMind has introduced Genie 2, an AI system that transforms single images into interactive 3D environments that users can explore for up to one minute.

The company calls Genie 2 a "Foundation World Model" and says it maintains consistency across the generated spaces. The system, trained on an extensive video dataset, produces environments in 720p resolution that users can navigate with a keyboard and mouse in both first- and third-person views.

According to DeepMind's researchers, Genie 2 simulates core physics, including gravity, collisions, and water movement. The system also manages complex lighting, reflections, and smoke effects.

DeepMind released a video showing these capabilities in action, using an undistilled base model at maximum quality. A distilled version allows real-time interaction, but runs at reduced visual quality.

Video: Google Deepmind

More consistent world generation

A key advancement in Genie 2 is its spatial memory - the system maintains areas that aren't currently visible to the user. When players return to previously visited locations, the environment remains consistent rather than being regenerated, addressing a common limitation of earlier 3D generators.

DeepMind suggests game developers could use Genie 2 to quickly create test environments from concept sketches or photographs. The system can transform basic drawings into fully realized 3D spaces with working physics and lighting systems.

The company also tested Genie 2 with its SIMA AI agent, which responds to natural language commands in digital environments. In one test, SIMA successfully navigated a Genie 2-generated room, following instructions such as "Open the blue door."

This combination of SIMA and Genie 2 could advance the development of AI systems that can perform complex tasks in both digital and physical spaces. SIMA learns from exploring digital training environments, while Genie 2 can generate unlimited training scenarios.

"When we started Genie 1 over two years ago, we always imagined a foundation world model will one day be able to generate an endless curriculum for training embodied AGI. Today, we made a big step towards that future," writes DeepMind researcher Tim Rocktäschel on Bluesky.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.