DeepThought-8B: Ruliad publishes open-source reasoning model

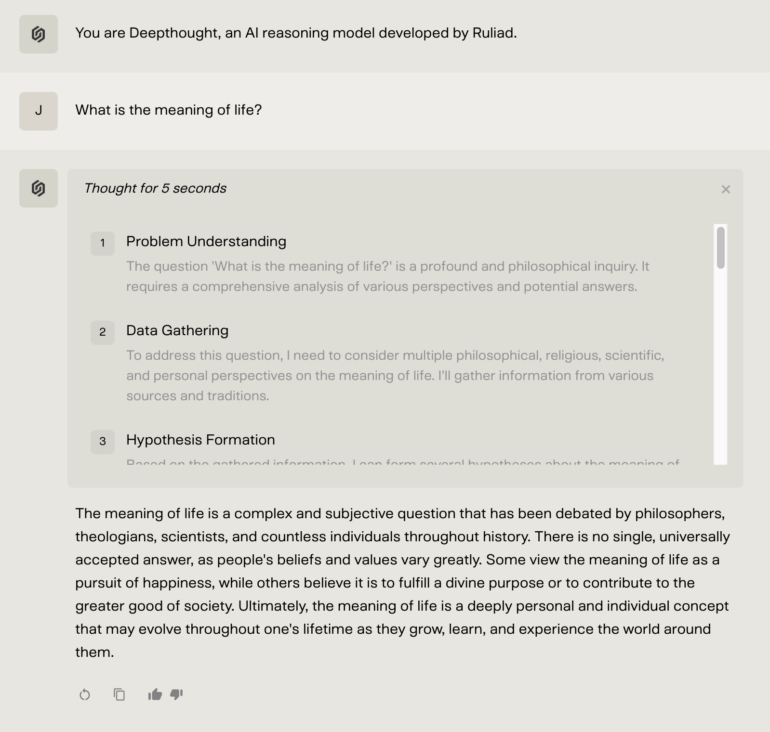

Ruliad, an AI startup, has introduced a new language model called DeepThought-8B that displays its reasoning process in a structured format. Despite its small size, the model claims to match the capabilities of much larger competitors.

The new AI model documents its conclusions step by step in a structured JSON format. According to its developers, this approach makes the model's decision-making more transparent and controllable.

Like OpenAI's o1, DeepThought-8B shows both its thinking duration and reasoning steps. The model runs locally on graphics cards with at least 16GB of memory and builds on the Llama-3.1 8B language model. However, unlike its base model, DeepThought-8B primarily solves problems through multiple steps, which the team calls a "Reasoning Chain" - essentially a chain-of-thought sequence. These steps are output in machine-readable JSON format, similar to OpenAI's "Structured Outputs."

{ "step": 1,

"type": "problem_understanding",

"thought": "The user is asking how many Rs there are in the word 'strawberry'" }

Example of structured DeepThought output

A key feature is the ability to modify these reasoning chains through "injections" called Scripted, Max Routing, and Thought Routing. Scripted allows users to define specific reasoning points in advance. Max Routing lets users choose both the maximum number of thinking steps and determine how DeepThought-8B should conclude its chain of thought. Thought Routing establishes if/then rules that activate dynamically based on the chat progression.

Demo video for "Thought Routing" from DeepThought-8B. | Video: Ruliad

Ruliad relies on test-time compute

Ruliad says the model can adjust its analysis depth based on task complexity, using what they call "Test-time Compute Scaling." This approach aims to boost language model performance by increasing computing power during inference. While OpenAI's o1 model uses a similar approach, it likely differs in its use of reinforcement learning during training and internal chain-of-thoughts. OpenAI hasn't revealed o1's exact training method or functioning.

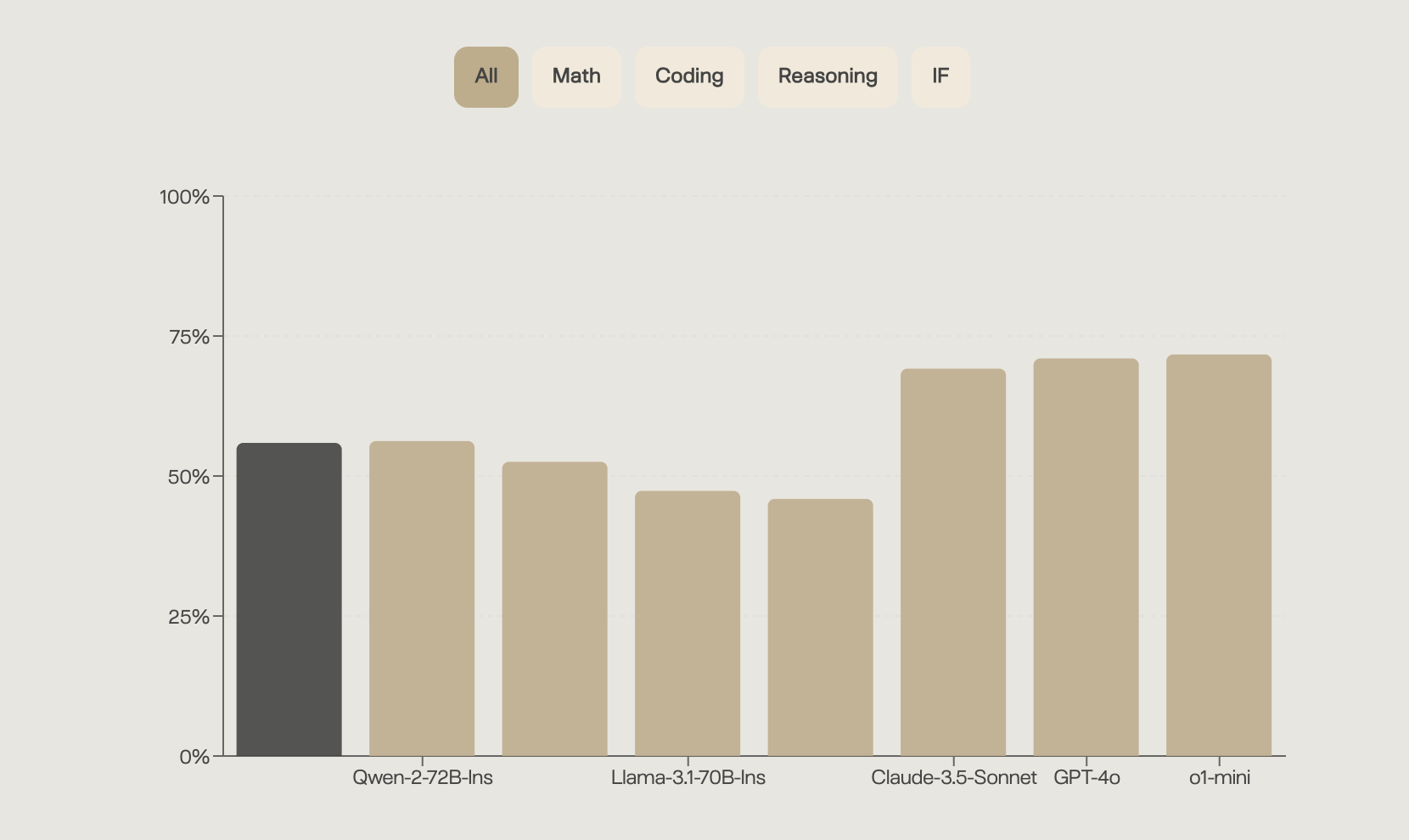

The company notes that DeepThought-8B achieves competitive results in reasoning, mathematics, and programming benchmarks despite its relatively small size. Across various benchmarks, it performs similarly to much larger models like Qwen-2-72B and Llama-3.1-70B, though it falls short of Claude 3.5 Sonnet, GPT-4o, and o1-mini.

The team acknowledges limitations in complex mathematical reasoning, processing long contexts, and handling edge cases. Ruliad has made the model weights available open-source on Hugging Face. A developer API is planned for the coming weeks and is currently in closed beta. Meanwhile, users can test DeepThought-8B for free at chat.ruliad.co after logging in with Google.

Other companies have also released reasoning models recently, including DeepSeek-R1 and Qwen QwQ.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.