FTC investigates OpenAI for ChatGPT spreading misinformation about individuals

The FTC is investigating OpenAI's ChatGPT over concerns about its handling of user data and the spread of misinformation about individuals, according to The Washington Post and The New York Times.

In a detailed 20-page letter, the agency asked OpenAI a series of questions about potential risks to users and requested information about data security incidents and precautions taken before software updates.

It's also asking for more information about the chat history leak that happened in March, as well as to what extent ChatGPT users are aware that ChatGPT can generate false information.

Despite its human-like communication abilities, the AI lacks an understanding of the content it generates, which has led to instances of it creating harmful falsehoods about people. There are at least two publicly known cases where ChatGPT has said damaging and untrue things about individuals.

In one case, ChatGPT accused a law professor of a sexual assault he didn't commit. In another known case, ChatGPT generated false claims about an Australian mayor who allegedly went to prison for bribery.

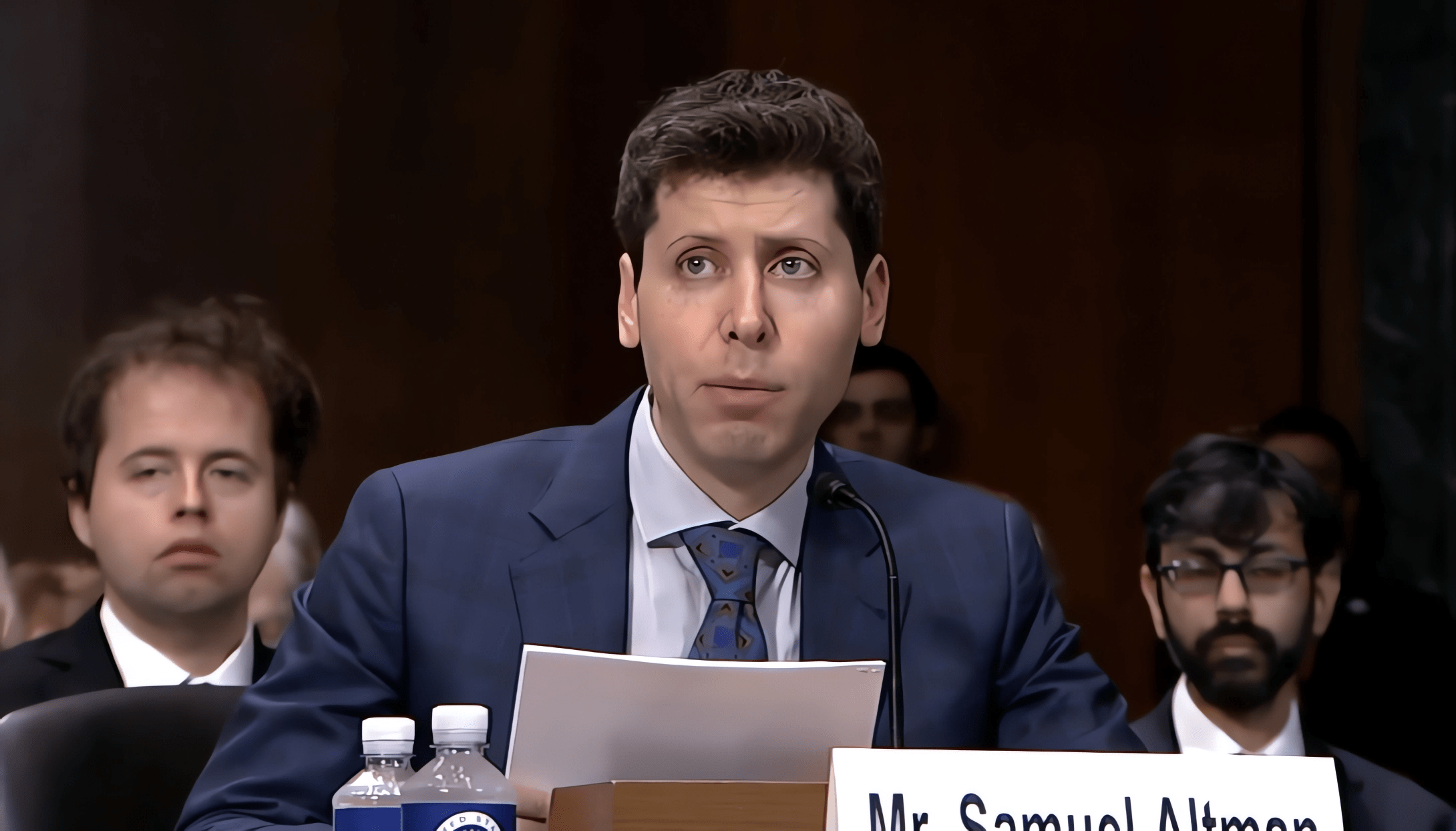

FTC investigation begins with a leak, to the disappointment of OpenAI CEO Sam Altman

The investigation marks the first significant regulatory challenge for OpenAI. The startup's co-founder, Sam Altman, previously welcomed AI legislation in congressional testimony, citing the industry's rapid growth and potential risks such as job losses and the spread of disinformation.

On Twitter, Altman reacts to the FTC's investigation, calling it disappointing that it "starts with a leak and does not help build trust."

Altman emphasizes that GPT-4 is built on "years of safety research," with an additional 6-month phase after initial training to optimize alignment and safety of the model.

"We protect user privacy and design our systems to learn about the world, not about individuals," Altman writes.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.