Gen-1: Stable Diffusion startup introduces generative AI for video

Key Points

- New York-based startup Runway is one of the organizations behind Stable Diffusion. Runway specializes in AI video editing.

- With Gen-1, it now introduces an AI model for video editing. It can, for example, use text prompts to replace objects in videos.

- Runway expects further advances in generative AI for video in the near future.

Runway's Gen-1 model allows you to visually edit existing video using text prompts.

Last year, New York-based AI video editor startup Runway helped launch Stable Diffusion, an open-source image AI, in partnership with Stability AI, LMU Munich, Eleuther AI, and Laion.

Now it's introducing a new model: "Gen-1" can visually transform existing videos into new ones. A realistically filmed train door can be transformed into a cartoon-like train door with a simple text prompt.

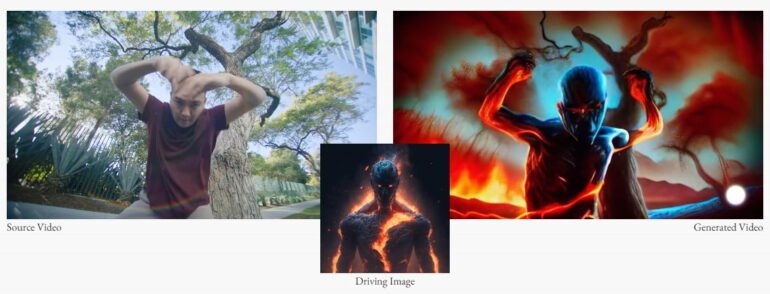

An actor in a video becomes a cartoon superhero. His transformation is based on an input image. The model can be refined with your own images for improved transformations.

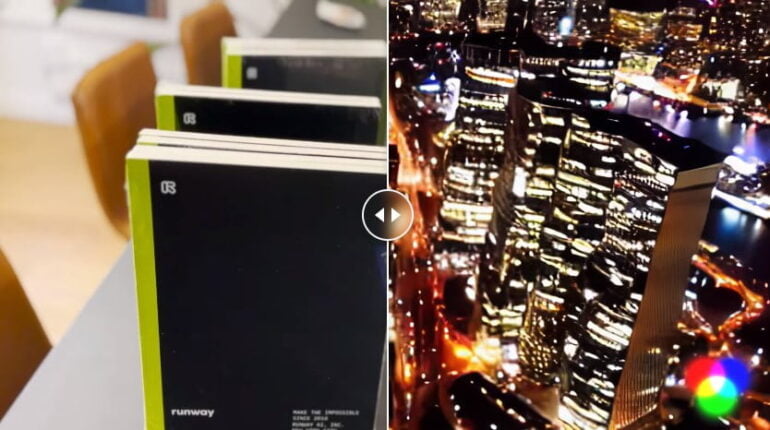

Even higher levels of abstraction are possible: from a few loosely assembled notebooks, Gen-1 can render a big city backdrop of skyscrapers via text command. "It's like filming something new, without filming anything at all," Runway writes.

Gen-1 can also isolate and modify objects in video, turning a golden retriever into a Dalmatian, and apply textures to untextured 3D objects. Textures are also created using a text prompt.

Runway expects rapid progress in AI video editing

AI-edited videos cannot yet compete with professionally edited videos. They contain image errors, distorted geometries, or simply look fake and unreal. But the project is still in its infancy.

"AI systems for image and video synthesis are quickly becoming more precise, realistic and controllable," the startup writes.

Video: Runway

Given the tremendous progress that image-generating AI systems have made in recent years, it takes little imagination to imagine that systems like Gen-1 could play a major role in video processing in a few years.

Open source question still open

Stable Diffusion became famous especially because it is open source and freely available on the web. For those who know a bit about computers and like to configure software, it is a free and uncensored alternative to DALL-E 2 or Midjourney that can also be used for applications.

According to Ian Sansavera, Runway's video workflow architect, the startup has not yet made a decision on the open source question for Gen-1. The software is still at "day zero," he said. Interested parties can sign up for a waiting list, and the scientific paper will be published soon. More information can be found on the project page.

Runway is likely to develop the model primarily for its own video software. The startup specializes in an AI-powered video editor that aims to simplify and automate video editing through AI tools. In the fall of 2022, the startup showed an integration of Stable Diffusion into its toolkit. Runway was founded in early 2018, and has since raised about $100 million from investors.

In addition to Runway, Google is working on text-to-video AI systems that can edit and generate video from scratch. Dreamix specializes on video editing via text prompts. Meta has also introduced a text-to-video model with Make-a-Video.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now