Google demonstrates a method with which large language models generate text significantly faster. In tests, the company was able to save almost 50 percent of compute time.

Large language models are used in various natural language processing tasks, such as translation or text generation. Models such as GPT-3, PaLM or LaMDA achieve impressive results and show that the performance of language models increases with their size.

However, such huge models are slow compared to smaller variants and require a lot of computation. Moreover, since they predict one word at a time and the prediction of one word must be completed before the model can predict the next, the generation of text cannot be parallelized.

Google's CALM grabs predictions from earlier layers

Google is now demonstrating Confident Adaptive Language Modeling (CALM), a method for speeding up text generation of large language models. The intuition behind the method is that predicting some words is easy, while others are hard. However, current language models use the same resources for each word in a sentence. CALM, on the other hand, dynamically distributes the computational resources used during text generation.

Video: Google

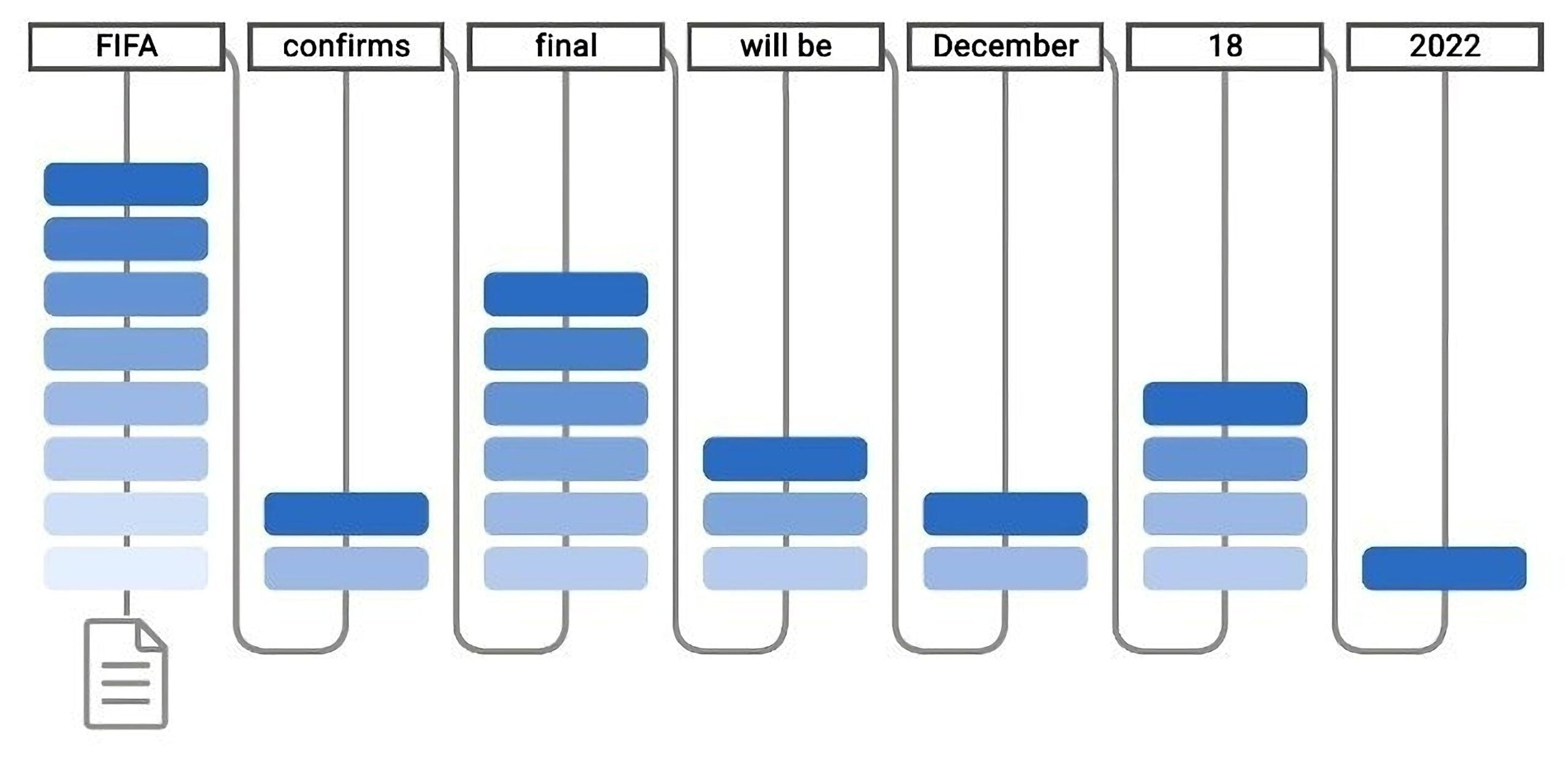

Language models rely on multiple transformer layers in which attention and feedforward modules modify internal representations of text. In the decoder, this process ultimately results in the prediction of the next word.

Instead of running this process through all layers, CALM measures the confidence of the model in its prediction early in the layers and uses those representations if the confidence is high enough. If the value is low, the prediction is moved to the later layers as usual.

CALM reduces compute time by almost 50 percent in tests

To test CALM, Google trains a T5 model and compares CALM's performance to a standard model. In doing so, the team shows that the method achieves high scores in various benchmarks for translation, summary, and question answering, and uses significantly fewer layers per word on average. In practice, CALM on TPUs saves up to 50 percent of computation time while maintaining quality.

CALM allows faster text generation with LMs, without reducing the quality of the output text. This is achieved by dynamically modifying the amount of compute per generation timestep, allowing the model to exit the computational sequence early when confident enough.

As models grow in size, efficient use of them is central, Google said. CALM is a key contributor to this goal and can be combined with well-known other efficiency approaches such as distillation or sparsity, according to Google.