Google Cloud unveiled new models and features for Vertex AI at its Next conference, including public test versions of its LLM with the largest context window to date, Gemini Pro 1.5, and "living images" with Imagen 2.0.

Gemini 1.5 Pro, now available as a public test version in Vertex AI, offers up to one million token context windows, exceeding the largest commercially available context window of 200K in Claude 3 by a factor of five. However, these large context windows still have significant weaknesses in seamlessly processing input information.

The large context window enables native multimodal inference over large amounts of data. Google says customers can use it to develop new use cases such as AI-powered customer service agents and academic tutors, analyze large collections of complex financial documents, identify documentation gaps, and explore entire code bases or natural language data collections.

Video: Google Deepmind

To improve language model response accuracy, Google is expanding Vertex AI's grounding capabilities, including the ability to ground answers directly from Google search or enterprise data, giving users access to current, high-quality information and improving model response accuracy.

Grounding in specific data is also key to developing the next generation of AI agents that go beyond chat to proactively search for information and perform user tasks, the company said.

Google has also expanded Vertex AI's MLOps capabilities with a new prompt management service and large model evaluation tools to help companies move from experimentation to production faster, and customers can now limit ML processing to the U.S. or European Union when using Gemini 1.0 Pro and Imagen.

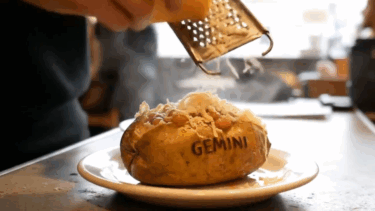

Imagen 2.0 animates images

The Imagen 2.0 family of image-generating models can now create short, four-second "live images" from prompts at 24 frames per second, with a resolution of 360x640 pixels. It is suitable for subjects such as nature, food and animals, and can produce a range of camera angles and movements while maintaining visual consistency, Google said.

Imagen 2.0 also offers advanced image editing features such as inpainting and outpainting to remove unwanted elements, add new ones, and extend image edges with text input.

The digital watermarking feature, based on Google's DeepMind SynthID, is now generally available, allowing customers to create invisible watermarks and verify images and live images generated by the Imagen model family.