Google positions Gemini as the "glue" for its new XR ecosystem

Key Points

- Google is integrating Gemini as the central AI for its XR platform, adding headset features that create realistic avatars and automatically convert 2D content into 3D.

- The company is partnering with Samsung and others on smart glasses that use cameras to let the AI analyze surroundings and provide proactive help like train schedules.

- Developers can now use the Gemini Live API to build apps that utilize visual and audio inputs from the glasses to guide users through real-world tasks.

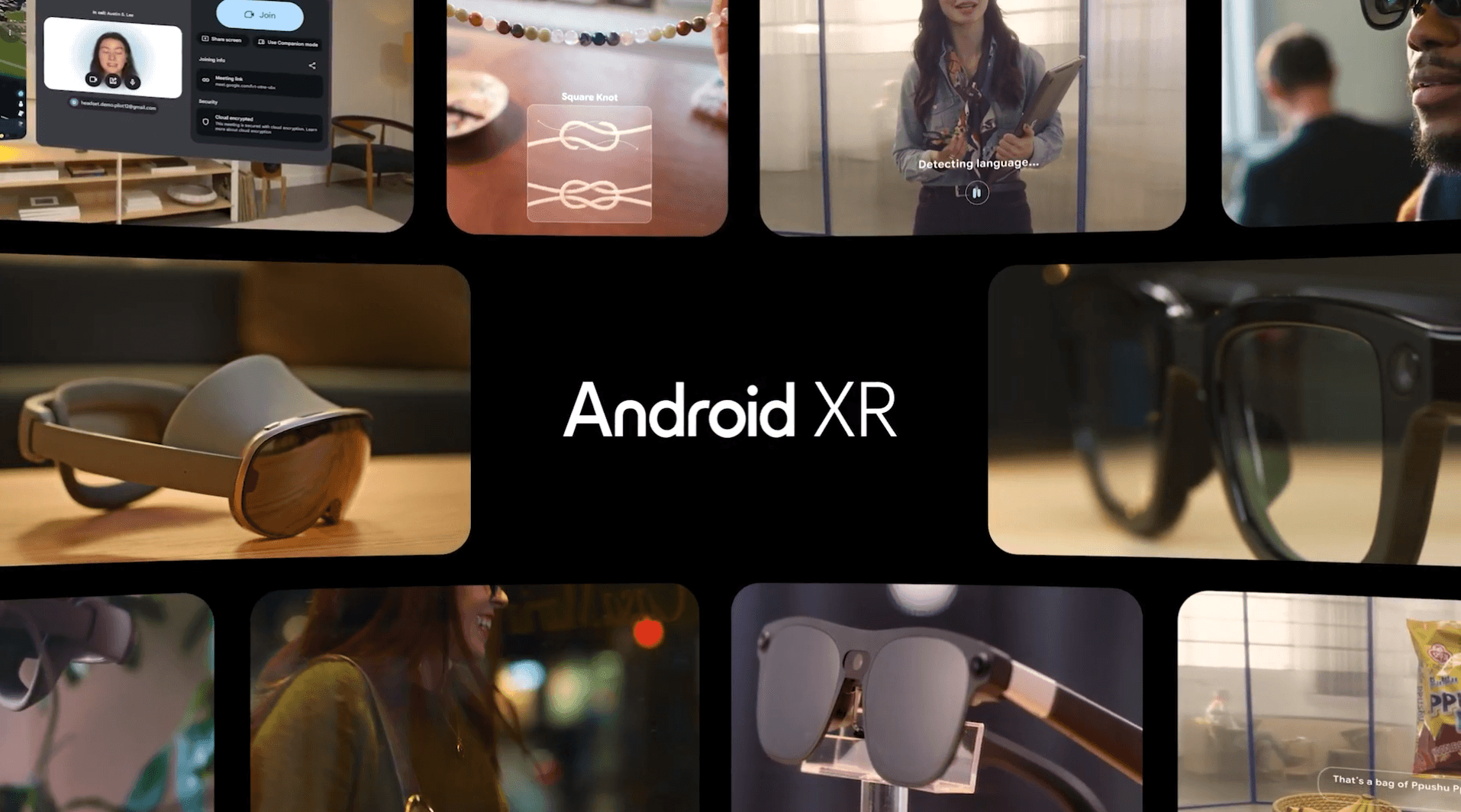

Google is expanding its XR ecosystem and positioning the Gemini language model as the central interface. Alongside generative AI features for headsets, the company announced partnerships for glasses designed primarily as hardware carriers for multimodal AI assistants.

During the "Android Show: XR Edition," Google clarified the strategic direction of its Android XR platform. While new hardware form factors were introduced, the technological focus is clearly on deep AI integration. Google describes Gemini as the "glue" holding the ecosystem together, enabling context-aware interaction across different device types.

Google is rolling out AI-powered features immediately for the already available Samsung Galaxy XR headset. One technically ambitious addition is the new "Likeness" function, which is entering beta according to the Google blog. This feature creates a realistic digital avatar of the user that mirrors facial expressions and hand gestures in real time. Designed to increase authenticity in video calls, the system relies on computer vision algorithms to capture user data.

Another AI feature announced for the coming year is system-wide "auto-spatialization." This uses on-device AI to analyze conventional 2D content - such as YouTube videos or games - and automatically convert it into stereoscopic 3D presentations.

Smart glasses become multimodal input devices

However, Google plans its most significant push toward ubiquitous AI assistance in the smart glasses segment. In cooperation with Samsung and eyewear brands Gentle Monster and Warby Parker, the company is developing "AI glasses" intended to compete directly with Meta's offerings.

Google also divides smart glasses into audio AI glasses and display AI glasses, which enable different forms of interaction. However, all models feature cameras and microphones to give Gemini access to the user's physical environment. In a demo, Google showed how the glasses could identify objects or translate text in real time - familiar territory for the tech giant. The AI is also designed to provide information proactively. For example, when a user arrives at a train station, the glasses can automatically display departure times for the next trains.

Developers get access to Gemini Live API

To fill the ecosystem with applications, Google is releasing Developer Preview 3 of the Android XR SDK. Crucially for AI developers, this includes the integration of the Gemini Live API for glasses.

This allows for the development of apps that use visual and auditory data from the glasses to trigger context-aware actions. Google demonstrated this with an Uber integration: the AI glasses recognize the user's location at an airport, visually guide them to the pickup point, identify the driver's license plate, and display status information.

In addition to the glasses, Google introduced "Project Aura" by XREAL, a wired XR headset that serves as an external monitor and AR interface. Gemini is integrated here as well, analyzing screen content and providing assistance via overlays.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now