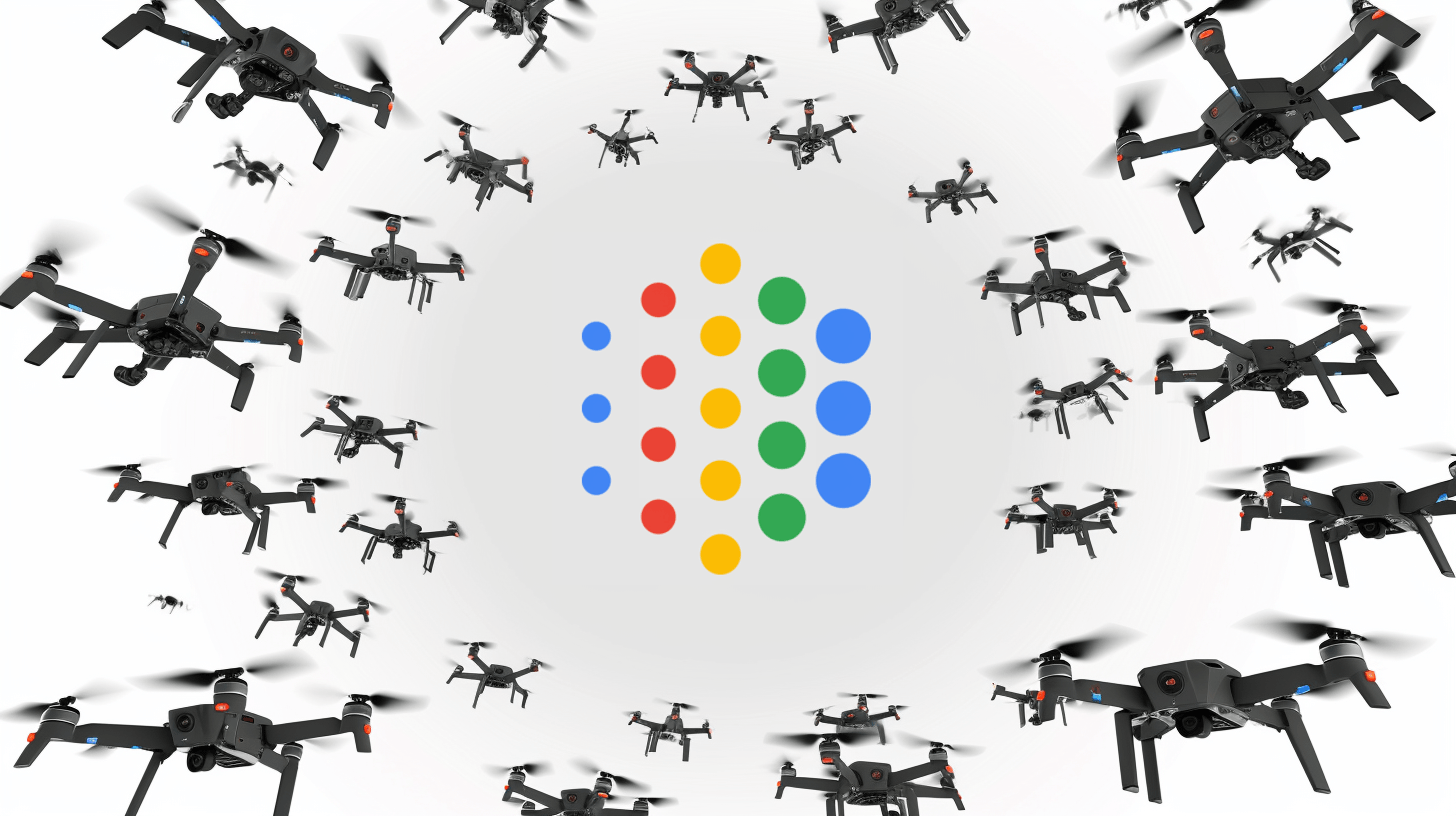

Google signals openness to AI defense contracts, dropping previous restrictions

The tech giant removed previous restrictions on weapons and surveillance systems from its AI guidelines, joining other major AI companies working with defense contractors.

Google has rewritten its ethical guidelines for artificial intelligence. According to the Washington Post, the company has dropped its previous ban on using AI technology in weapons and surveillance systems.

The old guidelines specifically prohibited four types of applications: weapons, surveillance, technologies that "cause or are likely to cause overall harm," and anything that violates international law and human rights.

The new guidelines focus on using human oversight and testing to align with "widely accepted principles of international law and human right" while "minimizing unintended or harmful outcomes."

Google says this change reflects the growing global race for AI leadership. " We believe democracies should lead in AI development, guided by core values like freedom, equality, and respect for human rights," wrote Google's Head of AI Demis Hassabis and Senior Vice President James Manyika in a blog post.

Tech sector embraces defense partnerships

The shift marks a stark contrast from 2018, when thousands of Google employees protested military contracts, declaring that Google should not be in the business of war. Those objections now feel like ancient history.

This shift extends beyond Google. OpenAI recently partnered with defense contractor Anduril to develop AI-powered drone defense systems for the US military. Meta has given the US military access to its Llama AI models, while Anthropic is working with Palantir to provide US intelligence and defense agencies specialized versions of Claude through Amazon Web Services. Microsoft reportedly proposed using OpenAI's DALL-E image generator to develop military operations software for the US Department of Defense last year.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.