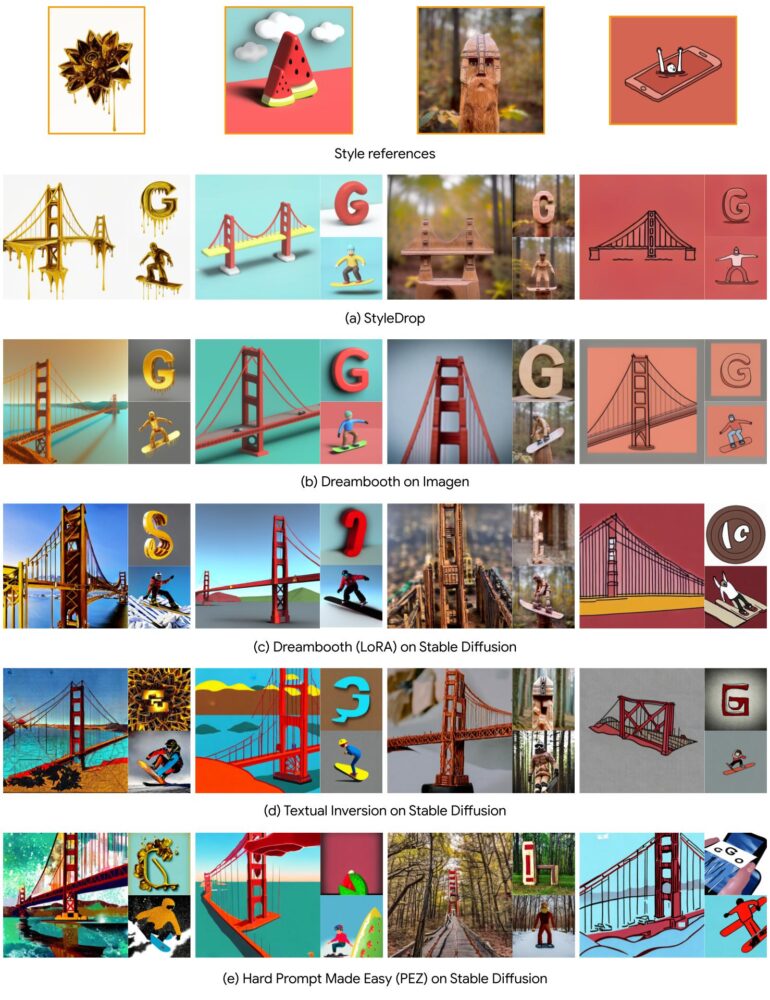

StyleDrop learns the style of any image and helps a generative AI model recreate it. Google's method outperforms others like Dreambooth, LoRA or Textual Inversion.

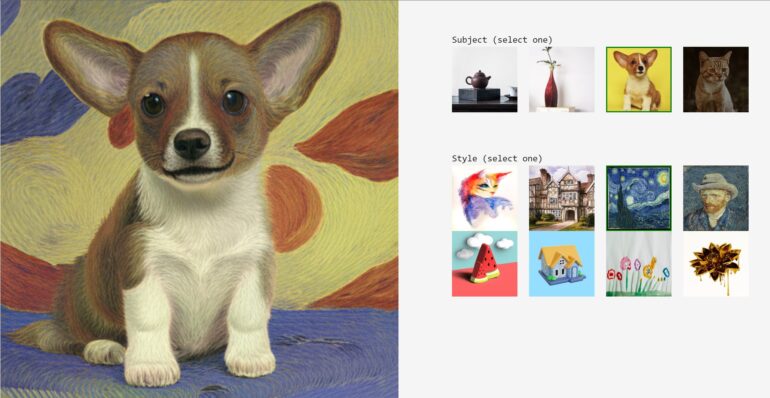

Google's new method enables the synthesis of images in a specific style using the Muse text-image model. StyleDrop captures the intricacies of a custom style, including color schemes, shading, design patterns, and local and global effects. According to Google, all that's needed as input is a single image.

StyleDrop learns the new style by fine-tuning a small number of trainable network parameters and then improves the quality of the model through iterative training with human or automatic feedback.

StyleDrop learns fast and with few examples

Specifically, StyleDrop is trained on the input image and generates a set of images to reproduce that image. From these, the highest quality images are selected either by a CLIP score or by human feedback and used for further training. An image is considered high quality if it does not reproduce the content but the style of the original image.

The whole process takes less than three minutes, even with human feedback, the team said. That's because StyleDrop needs less than a dozen images for iterative training, they said.

StyleDrop outperforms other methods for style transfer from text-to-image models, including Dreambooth, LoRAs, and Textual Inversion in Imagen and Stable Diffusion, according to the team.

StyleDrop for style, Dreambooth for objects

"We see that StyleDrop is able to capture nuances of texture, shading, and structure across a wide range of styles, significantly better than previous approaches, enabling significantly more control over style than previously possible," the team said.

The team also combines StyleDrop with Dreambooth to learn and create a new object in different styles as an image and can use the methods with Muse to create a custom object in a custom style.

Google sees StyleDrop as a versatile tool, with one use case being to allow designers or companies to train with their brand assets and quickly prototype new ideas in their style. More information is available on the StyleDrop project page.