Google's complex path to the future of search

Google plans to launch a new chatbot search later this year. How will it work?

Google's biggest challenge with a chatbot search is shifting responsibility: Right now, Google provides links to answers provided by website publishers. With a chatbot search, it would provide direct and authoritative answers to questions.

Today, Google is already partly responsible for the answers to search queries: by prioritizing search results, Google influences information retrieval. With zero-click search, Google confidently cites more or less appropriate snippets of web pages for search queries.

But it will be with chatbot search, planned for 2023 according to the New York Times, that Google will have to take full responsibility for every answer it provides - at least in theory. More on this later.

The pitfalls of chatbot search

Beyond monetization, there are at least four key challenges for Google:

- The chatbot's information cannot be wrong. Otherwise, the chatbot is untrustworthy for a search query, and thus redundant or even harmful.

- Even if Google were able to create a reliable search chatbot, taking responsibility for the answers ("Google said that ...") would be a major challenge at the scale at which Google operates. Even correct answers could be misinterpreted.

- Google needs a system that integrates site operators and content creators. Over time, they provide the information the chatbot needs to generate answers. They are also Google's advertising customers through search and display ads.

- As Google integrates site owners and content creators into chatbot search, it must do so in a way that preserves copyrights and allows publishers to profit. The years-long debate over an EU-wide ancillary copyright for press publishers shows how difficult this task is. With a chatbot, it could gain further momentum.

Google could, similar to OpenAI with ChatGPT, launch an experimental, standalone chatbot product that offers many other capabilities in addition to answering questions, is not explicitly designed as a search engine, and therefore does not have to fully meet all of the above requirements.

This chatbot could be Deepmind's Sparrow, taking the wind out of Microsoft's and OpenAI's sails and reassuring shareholders. It would be a short-term fix.

Google will optimize zero-click search with AI

In the long term, I think it's more likely that Google will use AI to incrementally optimize existing search, always planning for higher levels of monetization. Search has been Google's growth engine and cash cow for years, generating billions in revenue quarter after quarter. Google is not going to take a financial risk and reset its search engine.

Instead, Google will first identify areas where it has a high probability of providing competent answers. Language models such as Med-PaLM, which are tuned to specific question catalogs, can reliably answer at the level of human experts.

Google could train such models for different categories and fine-tune them through user feedback. In categories that AI can reliably serve, Google will experiment with new advertising formats, such as advertising links directly in AI-generated answers.

In addition, Google will use AI methods to reliably summarize multiple sources. It will weave these summaries and citations into its AI responses, attempting to provide a human source alongside each AI claim. In this way, Google could shift at least some responsibility to the cited sources, but would still benefit from drawing more attention to its platform.

At the same time, Google would become less dependent on the content that website operators and publishers provide for search. Google's AI responses would also likely increase the number of interactions and time spent on its platform, boosting its advertising value.

Meta's scientific AI model, Galactica, has shown that there are still technical problems to solve with citations. However, Google can set stricter guidelines.

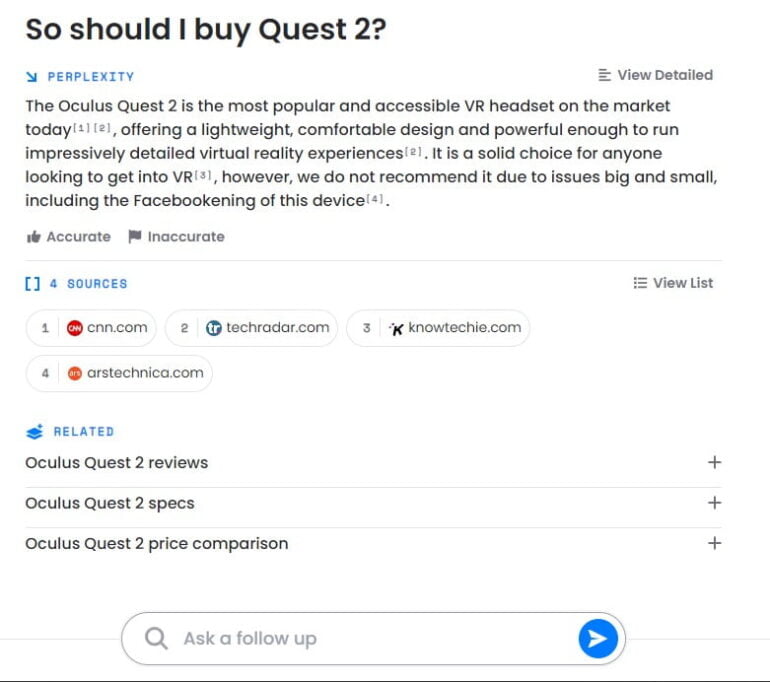

Perplexity.ai gives you a sneak peek at the future of Google search

If you are wondering how such a combined chatbot-quotation search could work in practice, head over to Perplexity.ai's website: The experimental chat search engine generates an answer to a question based on the content of the website operators and names further sources.

For example, if I ask about the best VR headset in 2022, it tells me Meta Quest 2, gives a reason for its recommendation, and points to further sources. This works well on the surface, but falls apart as soon as I ask a more in-depth question: Should I really buy the Quest 2?

Quest 2 is the best VR headset, answers Plerplexity.ai, but it does not recommend buying it because it is made by Facebook - and it refers to the opinion of a single tech editor.

Moreover, Perplexity's recommendation changes with each new query, even if the tenor remains similar. The experimental search engine is therefore only suitable as an interface demo, a proof of concept. This is how it might work. And Google? It could do better.

With a step-by-step approach, using specialized models and immense data training, combined with user feedback data that only Google has on this scale, the search company could gradually address content issues for more and more categories, and respond more and more reliably with AI.

This process will be fluid, it could take years, and publishers will suffer. I expect big discussions about copyright and regulation.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.