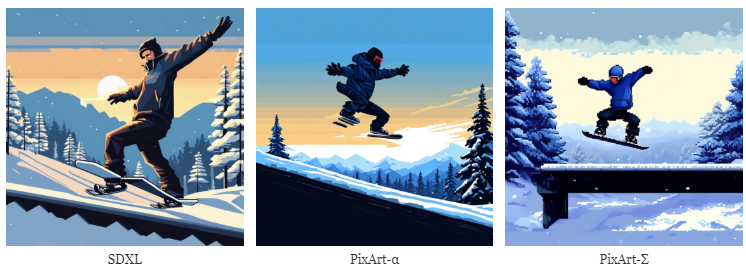

PixArt-Σ outperforms SDXL with significantly fewer parameters, and even outperforms commercial models.

Researchers from the Huawei Noah's Ark Lab and several Chinese universities recently introduced PixArt-Σ (Sigma), a text-to-image model based on the earlier results of PixArt-α (Alpha) and PixArt-δ (Delta), which offers improved image quality, prompt accuracy and efficiency in handling training data. Its unique feature is the superior resolution of the images generated by the model.

Images with higher resolution and closer to the prompt

PixArt-Σ can directly generate images up to 3,840 x 2,560 pixels without an intermediate upscaler, even in unusual aspect ratios. Previous PixArt models were limited to 1,024 x 1,024 pixels.

Higher resolution also leads to higher computational requirements, which the researchers try to compensate for with a "weak-to-strong" training strategy. This strategy involves specific fine-tuning techniques that enable a fast and efficient transition from weaker to stronger models, the researchers write.

The techniques they used include using a more powerful variable autoencoder (VAE) that "understands" images better, scaling from low to high resolution, and evolving from a model without key-value compression (KV) to a model with KV compression that focuses on the most important aspects of an image. Overall, efficient token compression reduced training and inference time by 34 percent.

According to the paper, the training material collected from the Internet consists of 33 million images with a resolution of at least 1K and 2.3 million images with a resolution of 4K. This is more than double the 14 million images of PixArt-α training material. However, it is still a far cry from the 100 million images processed by SDXL 1.0.

In addition to the resolution of the images in the training material, the accuracy of the descriptions also plays an important role. While the researchers observed hallucinations when using LLaVA in PixArt-α, this problem is largely eliminated by the GPT-4V-based share-captioner. The open-source tool writes detailed and accurate captions for the images collected to train the PixArt-Σ model.

In addition, the token length has been increased to approximately 300 words, which also results in a better content match between the text prompt and image generation.

Compared to other models, PixArt-Σ showed better performance in terms of image quality and prompt matching than existing open-source text-image diffusion models such as SDXL (2.6 billion) and SD Cascade (5.1 billion), despite its relatively low parameter count of 600 million. In addition, a 1K model comparable to PixArt-α required only 9 percent of the GPU training time required for the original PixArt-α.

PixArt-Σ can keep up with commercial alternatives such as Adobe Firefly 2, Google Imagen 2, OpenAI DALL-E 3 and Midjourney v6, the researchers claim.

The researchers do not show any textual content in their example images. While Stable Diffusion 3, Midjourney, and Ideogram in particular have recently made great strides in this area, PixArt is likely to perform less well due to its training focus on high-resolution photographs.

"We believe that the innovations presented in PixArt-Σ will not only contribute to advancements in the AIGC community but also pave the way for entities to access more efficient, and high-quality generative models," the scientists conclude in their paper.

Other research could benefit from their insights on how to handle training data more efficiently. PixArt-α was eventually released as open source, but we don't know yet if this will be the case for PixArt-Σ.