'Inscrutable Wizards': How Chinese AI startup Deepseek is making Silicon Valley look slow

Chinese AI startup Deepseek is turning heads in Silicon Valley by matching or beating industry leaders like OpenAI o1, GPT-4o and Claude 3.5 - all while spending far less money. Who's behind the team of academic researchers outmaneuvering tech's biggest names?

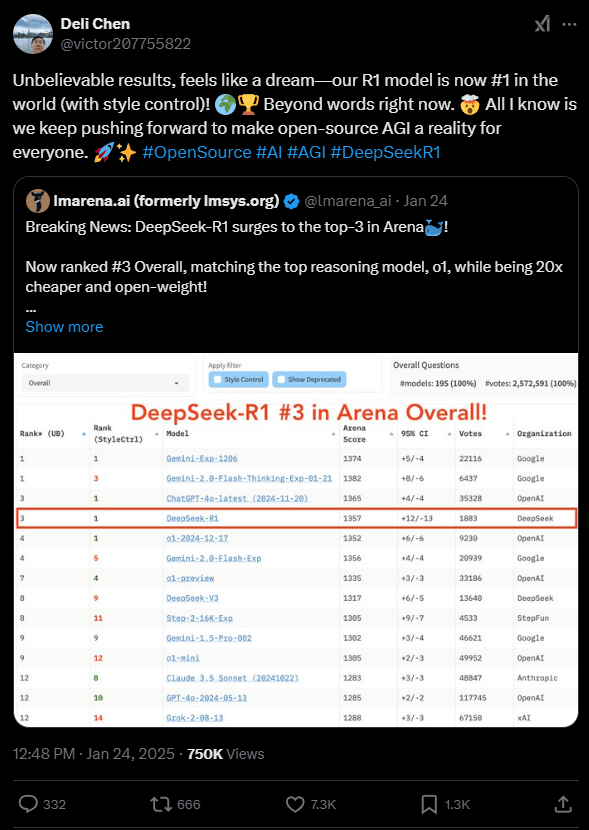

Deepseek out-acclerates Sillcon Valley accelerators: The company's latest model, Deepseek-V3, performs better than leading commercial AI systems in benchmark tests, according to independent evaluations. Just months earlier, their R1-Lite model had nearly matched OpenAI's o1-preview, with the final R1 version now performing at the same level.

While Deepseek builds on Western open-source work, it's also introducing fresh ideas. The company's rapid progress has caught the attention of tech leaders, including Meta CEO Mark Zuckerberg, who's reportedly concerned about their efficiency and speed.

From finance to AI breakthrough

The story begins with Liang Wenfeng, born in 1985 to a primary school teacher in Zhanjiang. After graduating from Zhejiang University in 2006, he explored machine learning in finance during his master's studies.

Unlike tech CEO's such as Sam Altman or Elon Musk, Wenfeng stays out of the spotlight. His IEEE profile shows he remains deeply involved in research, publishing papers in 2024 about AI in manufacturing and novel materials.

By 2015, Wenfeng and two classmates had founded the quant hedge fund High-Flyer, which grew to manage about 13 billion euros within six years, becoming one of China's "Four Kings of Quant Investing." This success led to the creation of High-Flyer AI in 2019.

In 2021, what seemed like an expensive hobby turned into something more significant. Wenfeng began buying thousands of Nvidia GPUs for what he called an AI "side project." One business partner remembers meeting a "very nerdy guy with terrible hair" who struggled to explain his vision, but simply wanted to create something meaningful.

That "hobby" proved prescient - High-Flyer acquired over 10,000 Nvidia GPUs before U.S. export restrictions kicked in, and used them to enhance its Fire-Flyer supercomputer focused on deep learning, laying the groundwork for its eventual success.

Building a research-first culture

When Deepseek officially launched in May 2023, it looked different from typical startups. The offices in Beijing and Hangzhou feel more like a "university campus for serious researchers" (via FT) than a tech company.

Deepseek quickly released its first product, Deepseek Coder, followed by the broader Deepseek LLM, and within a year had followed up with the much improved Coder-V2 and Deepseek-V2.

Between 100 and 140 people work on model development among the 200–300 employees. What sets Deepseek apart is its laser focus on fundamental research rather than commercial applications. The company is fully funded by High-Flyer and commits to open-sourcing its work - even its pursuit of artificial general intelligence (AGI), according to Deepseek researcher Deli Chen.

According to Wenfeng, they hire mainly top university graduates and late-stage PhD students who've published in leading journals but have little industry experience. While the team prioritizes research over profit, Deepseek matches ByteDance in offering China's highest AI engineer salaries, the Financial Times reports.

A focus on curiosity over commerce

Deepseek's approach stands apart from most Western AI companies. Their X profile simply states: "Unravel the mystery of AGI with curiosity. Answer the essential question with long-termism." You won't find the usual corporate promises about safety or competition.

Wenfeng is candid about putting research first: "If we have to find a commercial reason, we probably can't, because it's not profitable. From a commercial point of view, basic research has a very low return-on-investment ratio, and when OpenAI's early investors put in their money, they didn't think about the returns. They did it because they wanted it."

Wenfeng himself is focused on a bigger picture: changing China's tech culture. He hopes Deepseek will inspire more "hardcore innovation" throughout China's economy. "The real difference isn't a year or two, it's between originality and imitation," he says of catching up with the U.S. He believes that once society rewards true innovation, the mindset will follow.

AI industry leaders praise Deepseek's approach

The AI community has taken notice. Jack Clark, former OpenAI policy head and Anthropic co-founder, said Deepseek hired a group of "inscrutable wizards." Andrej Karpathy praised their efficiency: "DeepSeek making it look easy today with an open weights release of a frontier-grade LLM trained on a joke of a budget."

Senior Nvidia researcher Jim Fan sees their limited resources as an advantage: "Resource constraints are a beautiful thing. Survival instinct in a cut-throat AI competitive land is a prime drive for breakthroughs."

"Superior OSS models put huge pressure on commercial, frontier LLM companies to move faster," Fan wrote.

Meta's AI chief scientist Yann LeCun called their V3 model "excellent" and praised their open-source commitment, saying they've followed the true spirit of open research by improving existing technology and sharing their process.

Questions about government oversight and training methods

Despite the impressive benchmarks and industry praise, several questions cloud Deepseek's rise. Like all Chinese AI companies, Deepseek's models must comply with state censorship, and their relationship with the government remains unclear.

There's also uncertainty about their training methods - their models sometimes identify themselves as ChatGPT, suggesting they might train on Western AI outputs.

But while most Western AI companies prohibit this practice, they face their own copyright lawsuits over training data because they used copyrighted data to develop systems that might be competition to the people who created that data in the first place.

This raises questions about who gets to set the rules for AI development and training, and shines a light on the industry's blatant double standards. In a way, it seems poetic justice for Deepseek to ignore these rules to catch up.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.