Meta AI reconstructs typed sentences from brain activity with 80% accuracy

Meta's AI research team has demonstrated a breakthrough in decoding brain activity, successfully reconstructing typed sentences from brain recordings.

Working with scientists at the Basque Center on Cognition, Brain and Language in Spain, Meta's Fundamental AI Research Lab (FAIR) has published two studies that advance our understanding of how the human brain processes language. The research builds on previous work from French neuroscientist Jean-Rémi King, which focused on decoding visual perceptions and language from brain signals.

Tracking thoughts to full sentences

In their first study, researchers used MEG (magnetoencephalography) and EEG (electroencephalography) to capture brain activity from 35 participants as they typed sentences. An AI system then learned to reconstruct what they had typed based solely on these brain signals.

Video: Meta AI

The system achieved up to 80 percent accuracy at the character level, often managing to reconstruct complete sentences from brain activity alone. While impressive, the technology still has limitations - MEG requires participants to remain still in a shielded room, and additional studies with brain injury patients are needed to prove clinical usefulness.

Video: Meta AI

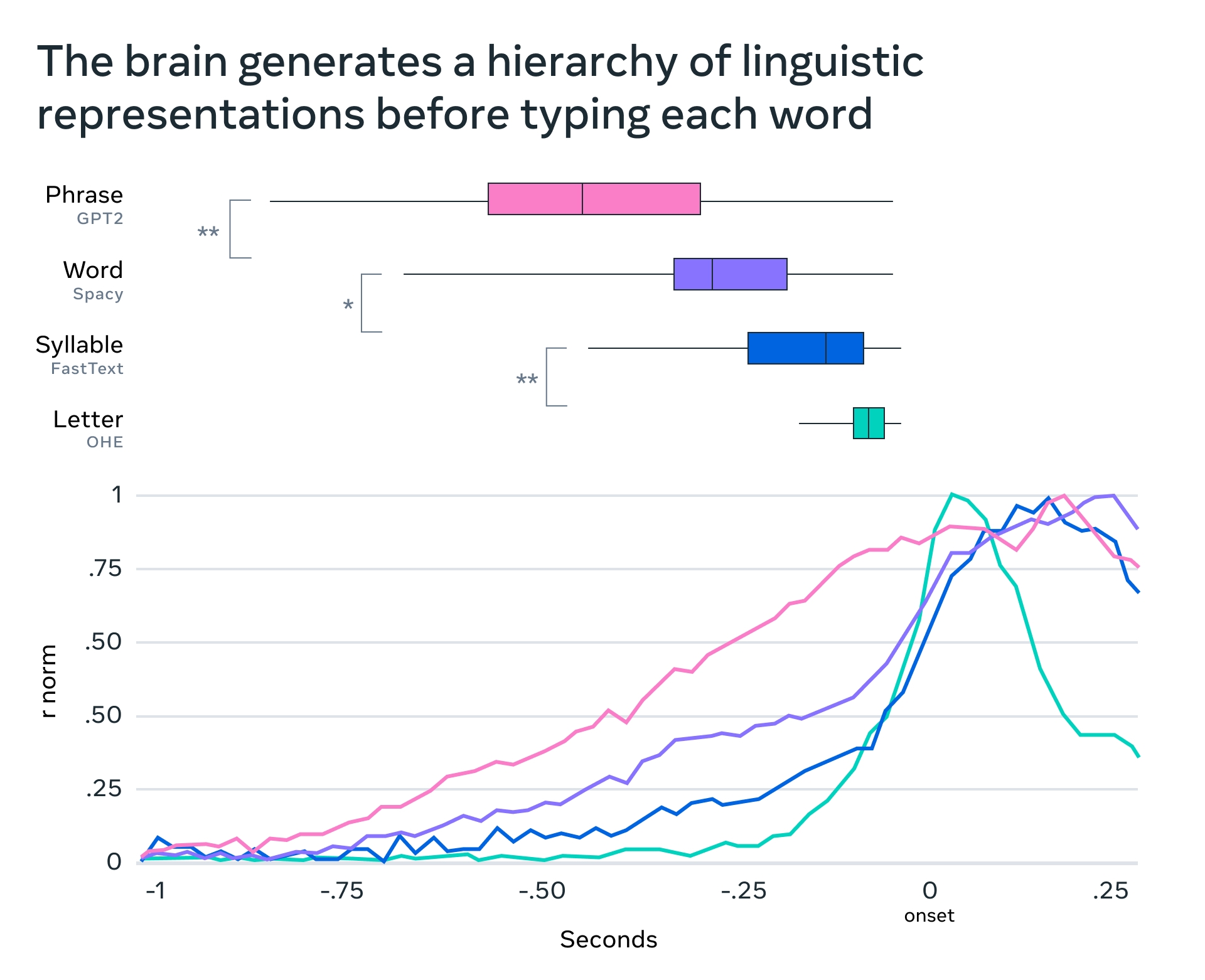

The second study explored how our brains transform thoughts into complex movement sequences. Since mouth and tongue movements typically interfere with brain signal measurements, researchers analyzed MEG recordings as participants typed instead. Using 1,000 recordings per second, they tracked the precise moment thoughts become words, syllables, and letters.

The findings show that the brain starts with abstract representations of meaning before gradually converting them into specific finger movements. A specialized "dynamic neural code" allows the brain to represent multiple words and actions simultaneously and coherently.

Deciphering neural code remains a challenge

Millions of people experience communication difficulties each year due to brain lesions. Potential solutions, such as neuroprostheses paired with AI decoders, face challenges because current non-invasive methods are limited by noisy signals. Meta points out that unraveling the neural code of language is a core challenge for AI and neuroscience, though gaining insights into the brain's language structure could propel AI advancements.

The research is already seeing practical applications in healthcare. The French company BrightHeart employs Meta's open-source model DINOv2 to detect congenital heart defects in ultrasound images. Similarly, the US company Virgo utilizes this technology to assess endoscopy videos.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.