OpenAI brings native image generation to ChatGPT

OpenAI has integrated image generation capabilities directly into ChatGPT, replacing its previous DALL-E integration. The new system aims to provide more consistent results and fewer content restrictions.

OpenAI has started rolling out the native image generation capability introduced alongside GPT-4o in May 2024. According to the company, this feature will become the standard image generator for all ChatGPT users, from the free tier to Enterprise customers. API access for developers is planned for the coming weeks. DALL-E will still be available as a separate option through a dedicated GPT.

Better precision through multimodal processing

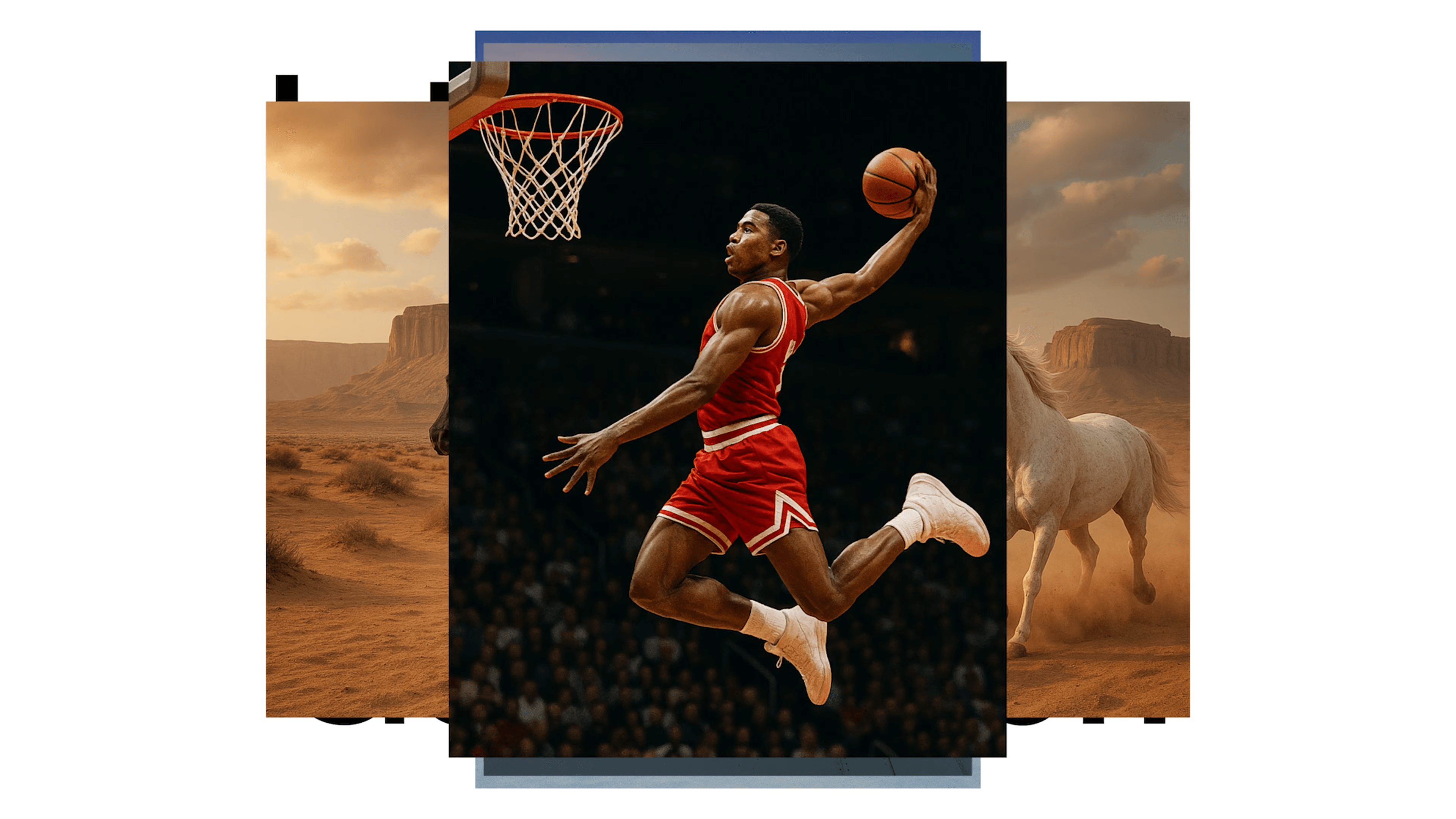

The new system processes text and images together, leading to more accurate results. According to OpenAI, it can handle up to 20 different objects simultaneously while maintaining correct relationships between them. This capability makes it particularly effective at generating text within images, such as creating infographics or logos.

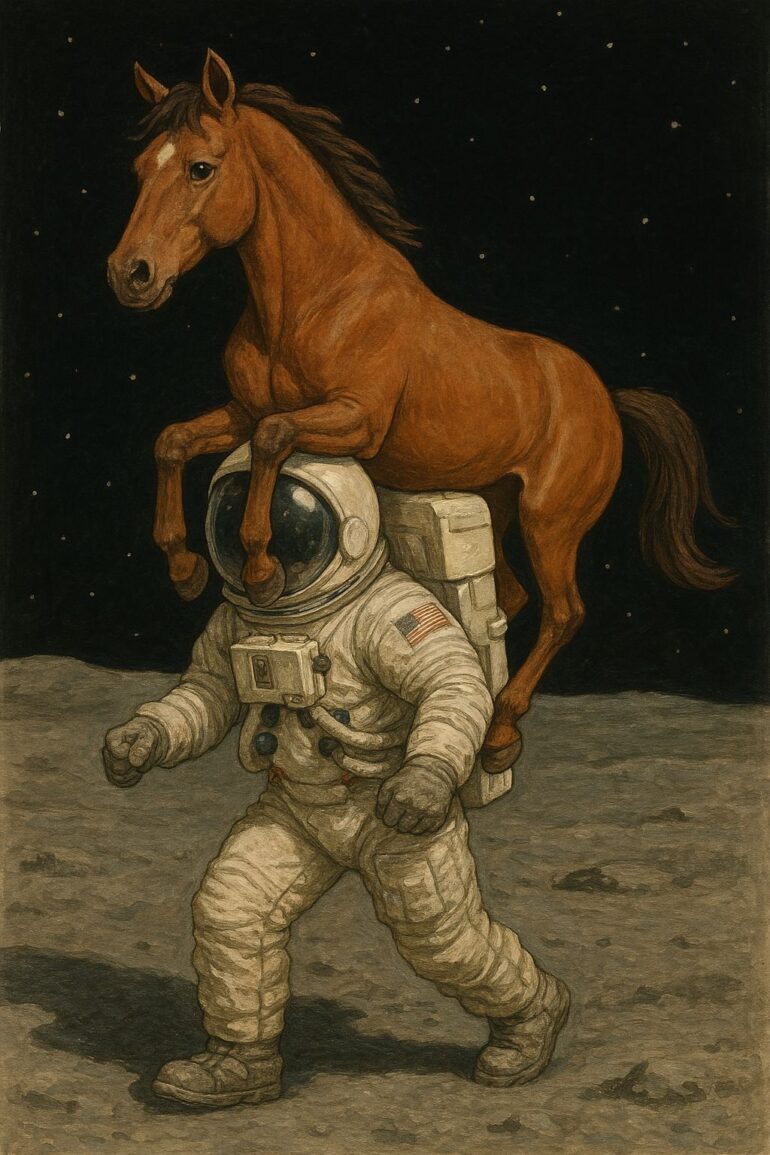

The system shows particular strength with unconventional concepts. When asked to generate "a horse riding an astronaut," previous models would typically default to the more common scenario of an astronaut riding a horse.

GPT-4o, however, accurately creates the unusual arrangement, suggesting it has a deeper understanding of spatial relationships rather than simply reproducing common patterns from its training data. This capability could significantly expand the creative possibilities for AI image generation.

The model is capable of "in-context learning", allowing it to analyze uploaded images and incorporate their details into new generations. Users can refine their results through natural conversation, with the AI maintaining context across multiple exchanges - making it easier to iteratively perfect an image through dialogue.

Early testing shows the system produces more consistent images than DALL-E 3, though it's not perfect yet. Users might notice small inconsistencies between generations, like slight changes in a character's hairstyle or clothing details.

OpenAI is upfront about the system's current limitations. The model sometimes crops images incorrectly, generates hallucinations similar to those seen in text models, and struggles with scenes containing many distinct concepts.

It also has trouble accurately rendering non-Latin text. The company says it's working to improve how users can edit specific parts of generated images.

OpenAI adds C2PA metadata to all generated images, clearly identifying them as AI-created. The company has also built an internal search system to track and identify images created through the new system.

Shifting toward less restrictive policies

In a shift from DALL-E 3's strict moderation, OpenAI CEO Sam Altman announced the new system allows more creative freedom, including potentially offensive content "within reason." However, the platform still blocks requests for deepfakes, violence, and unauthorized depictions of real people.

This launch follows Google's recent release of a similar function for its Gemini model, which also emphasized benefits like cross-image consistency, conversational editing, and accurate text rendering.

While dedicated image generators like Midjourney or Ideogram still offer user interfaces specifically designed for image creation, they may not match the accuracy provided by natively integrated multimodal models like ChatGPT's new feature—an aspect often critical for image creation tasks.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.