Tencent releases "Hunyuan World Model 1.0-Lite" for faster, resource-efficient 3D scene generation

Update as of August 15, 2025:

Tencent has released a streamlined version of its Hunyuan World Model 1.0 called "1.0-Lite." According to the company, this Lite variant is designed to run on consumer GPUs and requires about 35 percent less VRAM than the original model, allowing it to work with less than 17 gigabytes of graphics memory. The Lite version also runs faster and uses fewer resources overall.

Like the original, 1.0-Lite is open source and available via the interactive sceneTo3D demo, GitHub, and Hugging Face. A technical report is available on arxiv.org.

Original article from July 28, 2025:

Tencent releases Hunyuan World Model 1.0 as an open-source AI for 3D scene generation

Tencent has released Hunyuan World Model 1.0, an open-source generative AI model that creates 3D virtual scenes from text or image prompts.

The company says it's the first open-source model designed for standard graphics pipelines, making it compatible with game engines, VR platforms, and simulation tools. The goal is to help creators move quickly from concept to 3D content without running into proprietary barriers.

A key feature is the model's ability to separate objects within a scene, letting users move or edit elements like cars, trees, or furniture individually. The sky is also isolated and can be used as a dynamic lighting source to help with realistic rendering and interactive experiences.

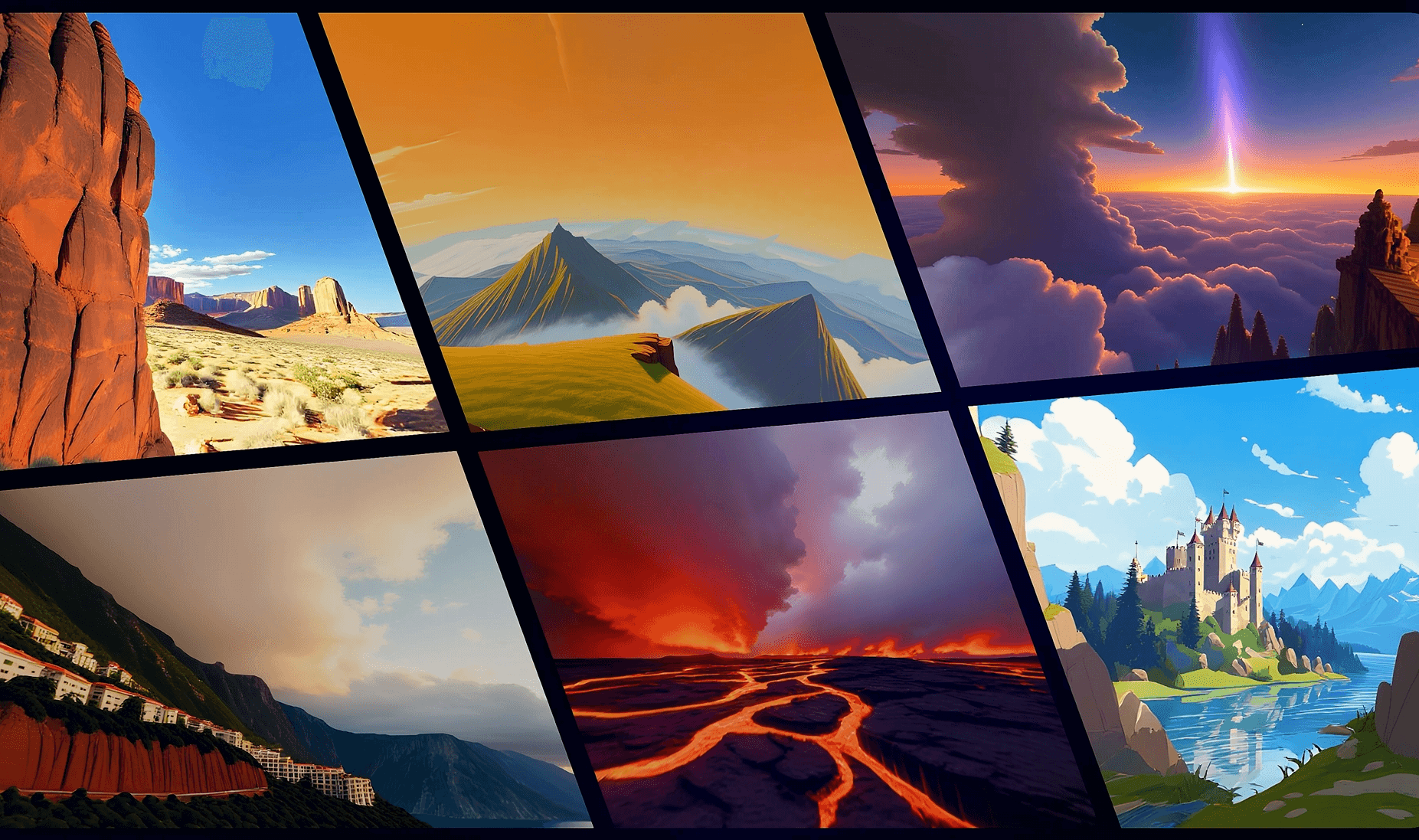

360-degree panoramas with limited exploration

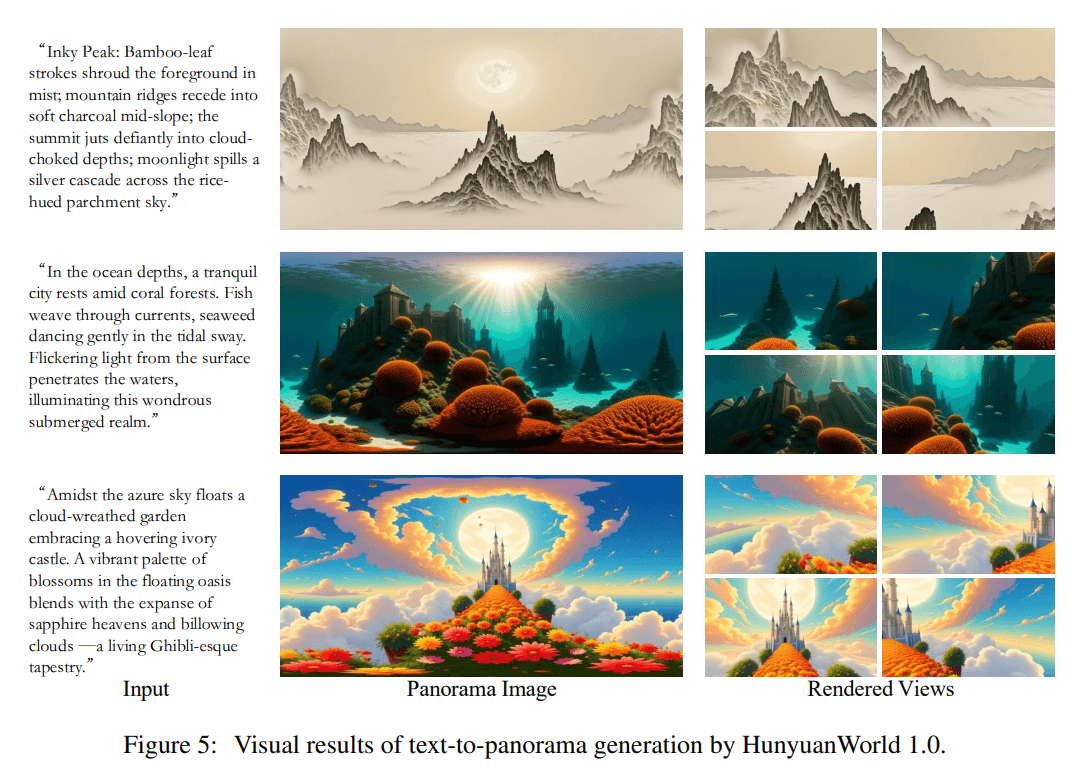

Hunyuan World Model 1.0 combines panoramic image generation with hierarchical 3D reconstruction. It supports two main input types: text-to-world and image-to-world. The generated scenes can be exported as mesh files and, according to Tencent, integrate smoothly into standard 3D workflows.

In practice, the model doesn't produce fully explorable 3D worlds like those in modern video games. Instead, users get interactive 360-degree panoramas. They can look around and navigate to a limited extent, but free movement is restricted. For more advanced camera movement or longer, consistent 3D video sequences, the Voyager add-on is required, as detailed in a recent research paper.

Video: Tencent

Tencent sees these visualizations as a starting point for VR, but the model is also suitable for a wide range of interactive and creative applications. Its text interpretation is designed to map complex scene descriptions into virtual spaces accurately, and it supports various compression and acceleration techniques for web and VR environments. The architecture uses a generative, semantically layered approach, producing scenes in a range of styles for creative and design uses.

Hunyuan World Model 1.0 is available as open source on GitHub and Hugging Face. An interactive demo is also available at sceneTo3D, but access requires a China-compatible login.

The release is part of Tencent's broader open-source push in AI. Alongside Hunyuan World Model 1.0, the company has released Hunyuan3D 2.0 for textured 3D model generation, HunyuanVideo for AI-powered video, and the Hunyuan-A13B language model with dynamic reasoning.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.