What is AI hardware? Approaches, advantages and examples

Artificial intelligence algorithms are becoming more widespread in various industries and applications, but their performance is often limited by the processing power of traditional CPUs.

To improve the capabilities of AI algorithms, some companies are developing new hardware offerings such as graphics processing units (GPUs) and AI accelerators like Google's TPUs. These new processors can give AI algorithms a significant speed boost and enable better results.

AI-optimized alternatives to the standard CPU

The central processing unit (CPU) of a computer is responsible for receiving and executing instructions. It is the heart of the computer, and its clock rate determines the speed at which it can process calculations.

For tasks that require frequent or intense computation, such as AI algorithms, specialized hardware may be used to improve efficiency. This hardware does not typically use different algorithms or input, but is designed specifically to handle large amounts of data and provide enhanced computing power.

AI algorithms often rely on processors that can perform computation in parallel, allowing for the creation of new AI models more quickly. This is particularly the case with graphics processing units (GPUs), which were originally designed for graphics calculations but have proven to be effective for many AI tasks due to the similarities in computational operations between image processing and neural networks. To optimize their performance for AI, these processors may also be adapted to handle large amounts of data efficiently.

AI hardware for different requirements

The hardware requirements for training and using AI algorithms can vary significantly. Training, which involves recognizing patterns in large amounts of data, typically requires more processing power and can benefit from parallelization. Once the model has been trained, the computing requirements may decrease.

To meet these varying needs, some CPU manufacturers are developing AI inference chips specifically for running trained models, although fully trained models can also benefit from parallel architectures.

Traditionally, PCs have separated memory and CPUs in their layout. However, GPU manufacturers have integrated high-speed memory directly onto the board to improve the performance of AI algorithms. This allows compute-intensive AI models to be loaded and executed directly on the GPU memory, saving time that would be spent transferring data and enabling faster arithmetic calculations.

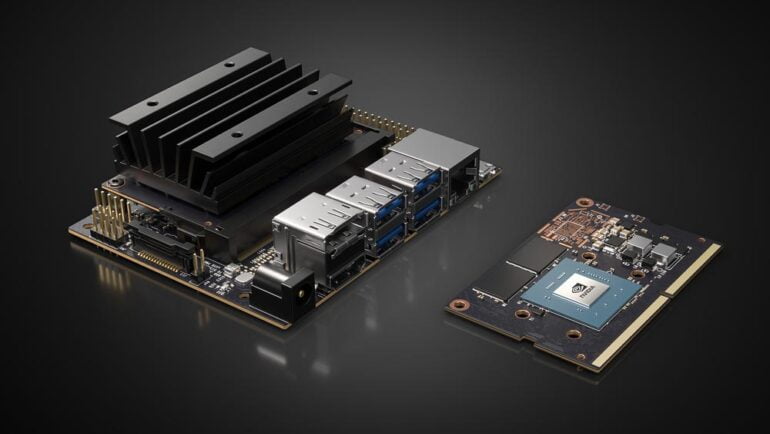

In addition to traditional CPUs and GPUs, there are also compact AI chips designed for use in devices like smartphones and automation systems. These chips can perform tasks such as speech recognition more efficiently and with less power consumption. Some researchers are also exploring the use of analog electrical circuits for AI computation, which offer the potential for faster, more accurate, and more power-efficient computing in a smaller space.

Examples of AI hardware

Graphics processors, or GPUs, remain the most common AI hardware used for processing, especially machine learning tasks. Due to the aforementioned advantage of extensive parallelization, GPUs often provide computations several hundred times faster than CPUs.

As the demand for machine learning continues to grow, tech companies are developing specialized hardware architectures that prioritize the acceleration of AI learning algorithms over traditional graphics capabilities.

Nvidia, the market leader in this field, offers products like the A100 and H100 systems that are used in supercomputers around the world. Other companies, like Google, are also creating their own hardware specifically for AI applications, with Google's Tensor Processing Units (TPUs) now on their fourth generation.

In addition to these generalized AI chips, there are also specialized chips that are designed for specific parts of the ML process, such as handling large amounts of data or being used in devices with limited space or battery life, like smartphones.

On top of this, traditional CPUs are being adapted to better handle ML tasks by performing calculations at a faster speed, even if it means a decrease in accuracy. Finally, many cloud service providers are also allowing for compute-intensive operations to run in parallel across multiple machines and processors.

Key AI hardware manufacturing companies

Most GPUs for machine learning are produced by Nvidia and AMD. Nvidia, for example, enables more precise computing operations when training ML models by using so-called "tensor cores".

The H100 cards also support the mixing of different accuracies with the Transformer Engine. AMD offers its own approach to adapt GPUs to the requirements of machine learning with the CDNA-2 and the CDNA-3 architectures, which will be released in 2023.

Google continues to lead the way in pure ML acceleration with its TPUs, available for rent through the Google Cloud platform and accompanied by a suite of tools and libraries.

Google relies on TPUs for all of its machine learning-powered services, including those found in its Pixel smartphone line. These chips handle tasks such as speech recognition, live translation, and image processing locally.

Meanwhile, other major cloud providers like Amazon, Oracle, IBM, and Microsoft have opted for GPUs or other AI hardware. Amazon has even developed its own Graviton chips, which prioritize speed over accuracy. Front-end applications like Google Colab, Microsoft's Machine Learning Studio, IBM's Watson Studio, and Amazon's SageMaker allow users to utilize specialized hardware without necessarily being aware of it.

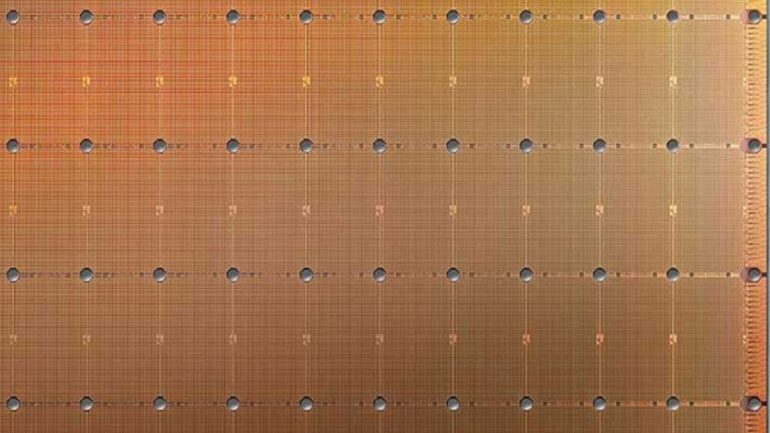

Startups are also getting in on the AI chip game, with companies like California-based D-Matrix producing chips for in-memory computing (IMC) that bring arithmetic calculations closer to data stored in memory.

Start-up Untether is using a method called "at memory computing" to achieve high computing power of two petaops in just one smart card. This approach involves performing calculations directly in RAM cells.

Other companies, such as Graphcore, Cerebras, and Celestial, are also exploring in-memory computing, alternative chip designs, and light-based logic systems for faster AI calculations.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.