Early users report real-world performance of Apple Intelligence in iOS 18.1

Apple is introducing its AI features, called "Apple Intelligence," with the first developer beta of iOS 18.1, iPadOS 18.1, and macOS Sequoia 15.1.

This is an unusual move, as iOS 18 hasn't been officially released yet. However, given the significant changes that generative AI brings to the operating system, Apple likely wants to allow itself and developers a longer testing period.

The released developer beta already includes some features announced at this year's WWDC, though not the full range. The complete rollout is expected in the first half of 2025, according to recent leaks.

Users can now test writing aids for rephrasing, proofreading, and summarizing texts system-wide in Apple's apps and most third-party apps.

Siri has also been updated with a new look and now allows seamless switching between voice and text input while maintaining context across different requests.

Other new features include automatic call recording and transcription, email prioritization and summarization, and natural language photo search.

Some announced features like ChatGPT integration, Genmoji, and image creation are still missing in this early beta. Additionally, Apple Intelligence features are not yet available in the EU due to regulatory matters.

Even those who install the early, unstable developer beta won't be able to try out the AI features right away. Parts of Apple Intelligence run in a "private cloud," and access to the beta is via a waiting list due to high server demand. Access requires an iPhone 15 Pro, an iPad with M1 or later, or a Mac with M1 or later.

Early performance reports

Early reports show that while Apple Intelligence is relatively slow, it is efficient. Rephrasing a short email takes about ten seconds on the device, as shown in the following video by indie developer Zaid Mukaddam.

Video: X/@zaidmukaddan

Tech journalist Max Weinbach reports that the AI system is efficient, using a maximum of 6 watts for rephrasing tasks and 1–2 watts for email summaries. It can process longer texts of around 1,000 words without issues.

Video: X/@MaxWinebach

The new Siri design features a futuristic glow around the screen when launched, but no fundamental changes to its responses yet.

Video: X/@VadimYuryev

By developing its own AI system with deep OS integration, Apple may be able to reduce users' reliance on third-party AI tools. Apple is focusing on tasks suitable for its smaller model, leaving more complex queries to services like ChatGPT. This move could especially challenge existing grammar and writing tools like Grammarly and DeepL Write.

Technical paper provides insight into Apple Intelligence

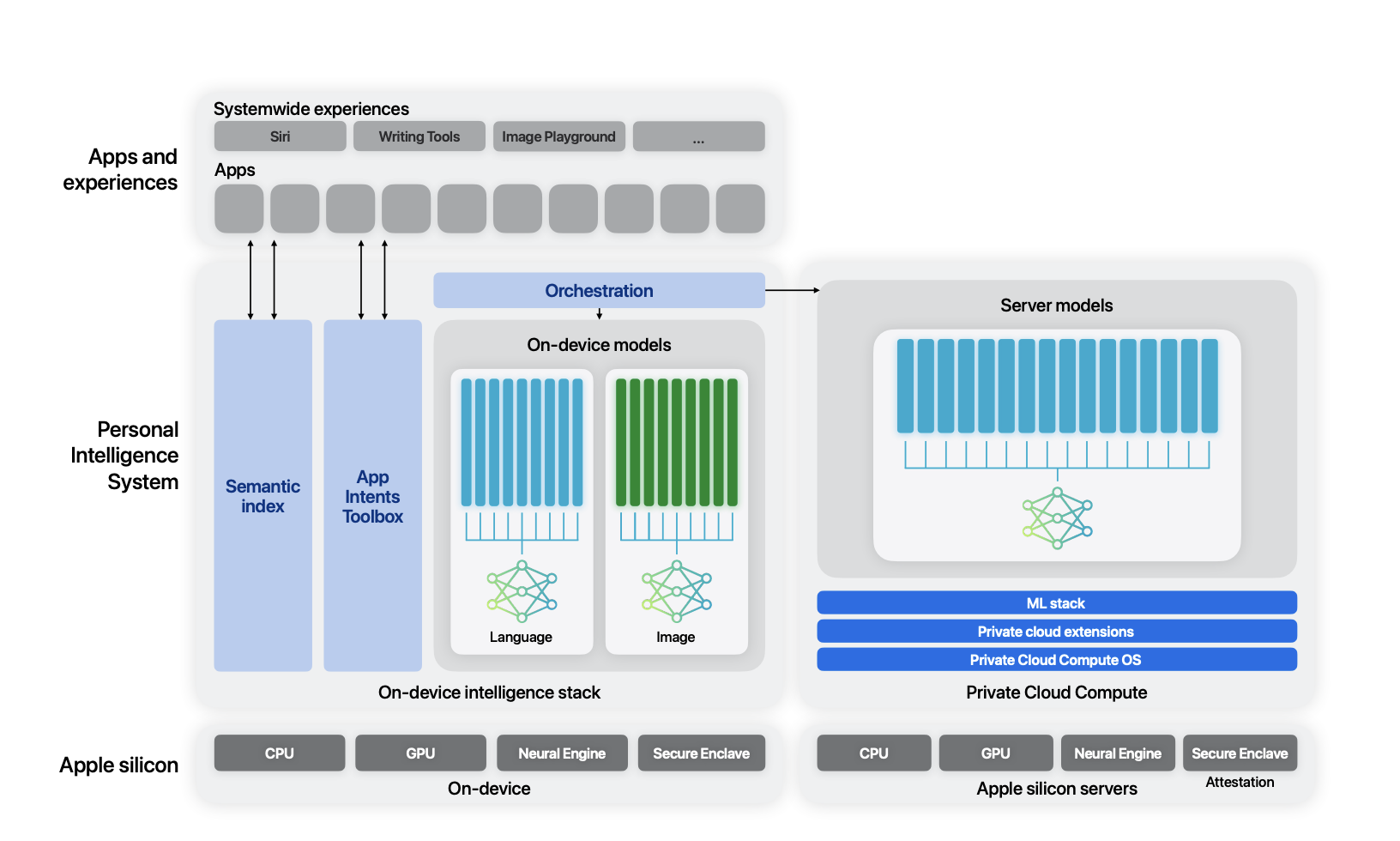

Apple has published a technical report on Apple Intelligence. The system consists of AFM-on-device, a model with about 3 billion parameters designed for efficient on-device operation, and AFM-server, a server-based language model using Apple's private cloud infrastructure.

Training data comes from licensed datasets, curated open-source data, and public web crawl data. Apple says it didn't use any private data.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.