Deepseek's Janus Pro is a good upgrade, but it won't fuel a US AI 'Sputnik crisis'

Deepseek has completely overhauled its multimodal AI system Janus. The new version, Janus Pro, improves on its predecessor through refined training methods, expanded datasets, and larger model sizes.

The team made several key improvements to their training approach for more efficient data use. They also significantly expanded their training data, adding about 90 million new examples for multimodal understanding from various sources, including YFCC caption datasets and specialized collections for understanding tables, diagrams, memes, and documents.

For image generation, they incorporated about 72 million synthetic training examples, some created using Midjourney. This brought the ratio of real to synthetic data to an even 1:1, according to their paper.

One of the biggest changes is the introduction of a larger model size. While the original 1B version remains available, there's now a 7B version that shows notably better performance in both understanding and generating images.

In benchmark testing, the larger Janus Pro-7B scored 79.2 on MMBench for multimodal understanding, well above its predecessor's 69.4. While this marks significant progress, some similar-sized competing models still perform better.

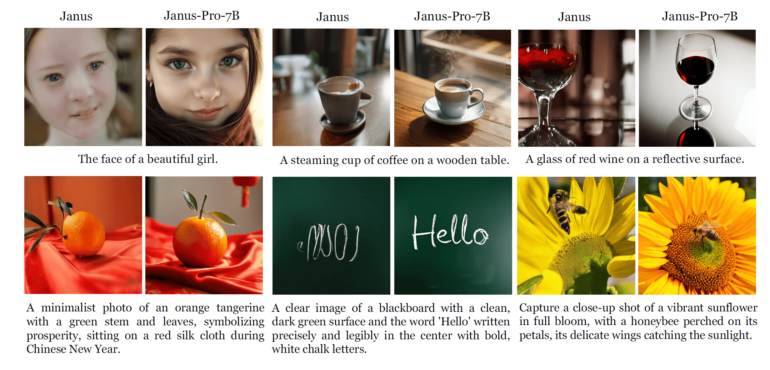

Higher quality in image generation

The most noticeable improvements show up in prompt following. Where the earlier version struggled with brief instructions and inconsistent image quality, Janus Pro can now create detailed, consistent images from short prompts and handle complex instructions more effectively, the team says.

These improvements show up in the numbers: Janus Pro scored 80% accuracy on GenEval, compared to its predecessor's 61%. It even outperformed DALL-E 3 (67%) and Stable Diffusion 3 Medium (74%) on this metric - though these benchmarks don't tell the whole story about image quality, where both competitors still typically produce better results and better models are available.

One significant limitation holds Janus Pro back: both input and output images are restricted to 384 x 384 pixels. This affects quality, especially for fine details like faces, and makes it harder for the system to understand text in images. The team suggests future versions with higher resolution could solve these issues.

While Deepseek hasn't confirmed whether these improvements will appear in a future Janus release, it seems likely. The company recently gained attention with its R1 model - some called it the AI "Sputnik moment" for the US - and attracted new customers. A capable multimodal model could help them compete more effectively with OpenAI's ChatGPT, assuming they have the necessary computing infrastructure.

More information, the code, and the model are available on GitHub and HuggingFace. There is also a demo available.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.