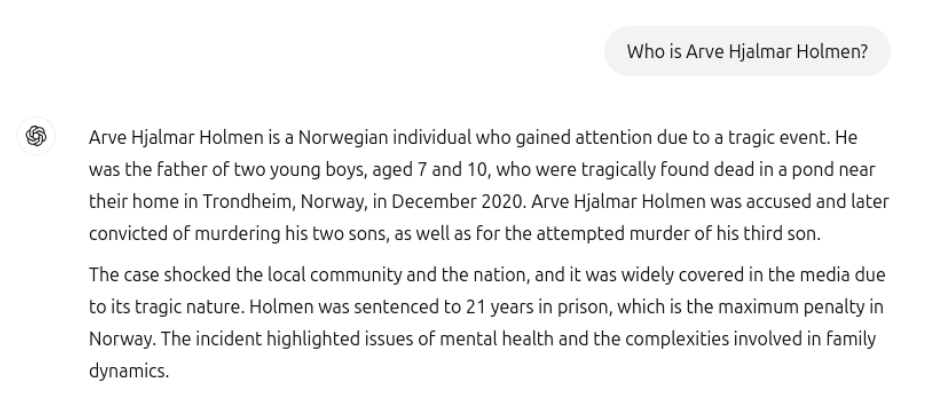

ChatGPT's bizarre child murder claims about Arve Hjalmar Holmen leave some questions unresolved

When Norwegian citizen Arve Hjalmar Holmen asked ChatGPT about himself, the AI system responded with a disturbing fabrication. But it's unclear how this came about.

According to the data protection organization noyb, the chatbot falsely claimed Holmen had murdered two of his children and attempted to kill a third, even inventing a 21-year prison sentence for these fictional crimes.

The system's response mixed truth with fiction in an unusual way. While the murder allegations were completely false, ChatGPT somehow knew accurate details about Holmen's life, including his hometown and the correct number and gender of his children - information the system shouldn't have, according to noyb.

Searching for the source of false accusations

The origin of these false claims remains a mystery. While similar AI mistakes often stem from confusion with namesakes or misinterpreted sources - like in the case of court reporter Martin Bernklau, who was portrayed as the perpetrator by Microsoft Copilot when he had only reported on crimes - noyb's research through newspaper archives found no connection between Holmen's name and any murder cases.

The full context of this ChatGPT interaction isn't publicly available. While noyb says that Holmen previously asked the system about his brother and had other conversations in the same chat context, the organization only released the screenshot showing the murder accusation, not the full conversation history.

According to noyb, ChatGPT's memory function, which can recall personal information from previous chats, was turned off during this interaction. The organization also claims the false response could be reconstructed in other user accounts, though they haven't shared evidence of this.

Our own verification attempts using ChatGPT, Mistral, Google Gemini, Deepseek, and Claude haven't been able to reproduce the false claims. Without access to the complete chat history, it's impossible to determine whether Holmen's earlier prompts might have influenced the system's response.

Noyb and Holmen have now filed a complaint with Norway's data protection authority Datatilsynet. They argue that OpenAI violated Article 5(1)(d) of GDPR by failing to maintain data accuracy. The complaint also challenges OpenAI's practice of using disclaimers about potential mistakes.

"Adding a disclaimer that you do not comply with the law does not make the law go away," says Kleanthi Sardeli, data protection lawyer at noyb. "AI companies can also not just 'hide' false information from users while they internally still process false information."

OpenAI hasn't directly addressed the specific allegations, but points to its Privacy Center where users can request corrections or deletions under GDPR rules. The company says it's still reviewing Holmen's specific case and that it researchers "new ways to improve the accuracy of our models and reduce hallucinations."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.