Google's "Robotics Transformer 1" to usher in the era of large robot models

Key Points

- Advances in large AI models for language and images come from huge data sets and efficient model architectures.

- According to Google, robotics lacked both of these prerequisites. The company is introducing a large real-world dataset for robot training and the Robotics Transformer 1 (RT-1) robot model.

- RT-1 depends on other approaches such as Deepmind's Gato in real-world tests and can be combined with Google's SayCan.

Google shows a new AI model with a large dataset for real-time robot control.

Recent successes in the development of AI systems that process images or natural language are based on a common approach: large and diverse datasets processed by powerful and efficient models.

Generative AI models for text or image, such as GPT-3 and DALL-E, obtain their data from the Internet and rely only on specific datasets for fine-tuning. OpenAI, for example, uses human feedback datasets to better adapt a large AI model to human needs.

Robotics lacks gigantic data sets like those that exist for texts and images. Masses of robot data would have to be collected from autonomous operation or with human teleoperation - making them expensive and difficult to create. Furthermore, there is not yet an AI model that could learn from such data and generalize in real-time.

Some researchers are instead relying on training AI robots in simulations. Others are trying to have AI learn from Internet videos.

Google's Robotics Transformer 1 learns from different modalities

Google now introduces the Robotics Transformer 1 (RT-1), an AI model for robot control. The model is accompanied by a large real-world dataset for robot training.

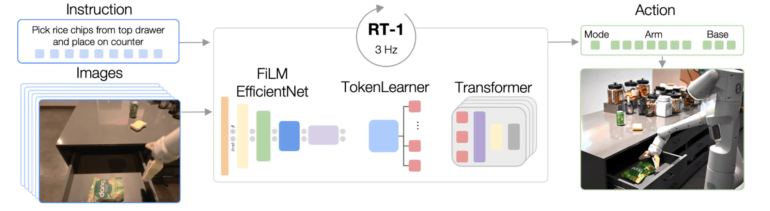

The model uses text instructions and images as input, which are transformed into tokens by a FiLM EfficientNet model and compressed with an additional method (TokenLearner). The inputs are then forwarded to the Transformer, which outputs the commands to the robot. According to Google, this makes the model fast enough to control robots in real-time.

Google's RT-1 learns from more than 100,000 examples

To train RT-1, Google used a large dataset of 130,000 examples of more than 700 robotic tasks such as picking up, depositing, opening, and others that the company collected over 17 months with 13 robots from Everyday Robots a robotics company under Alphabet. Included in the data are movements of the robots' joints, robot bases, camera footage, and text descriptions of the tasks.

After training, Google's team compared RT-1 to other methods in various seen and unseen tasks, as well as how robustly the compared models coped with different environments.

RT-1 clearly outperformed the other methods, including Deepmind's Gato, in all scenarios. Google also experimented with other data sources from another robot model. The results from these experiments would suggest that RT-1 can learn new skills with training data from other robots, Google writes.

Google's RT-1 improves SayCan

The team also checked to see if the performance of Google's SayCan could be improved with RT-1. Indeed, the combined system performed nearly 20 percent better and was able to maintain that success rate even in a more complicated kitchen environment.

The RT-1 Robotics Transformer is a simple and scalable action-generation model for real-world robotics tasks. It tokenizes all inputs and outputs, and uses a pre-trained EfficientNet model with early language fusion, and a token learner for compression. RT-1 shows strong performance across hundreds of tasks, and extensive generalization abilities and robustness in real-world settings.

The team hopes to increase the number of robot skills learned more quickly in the future. To do this, it plans to bring in people with no experience in robotic teleoperation to contribute to the training data set. It also aims to further improve reaction time and the ability to retain context over time.

The code for RT-1 is available on GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now