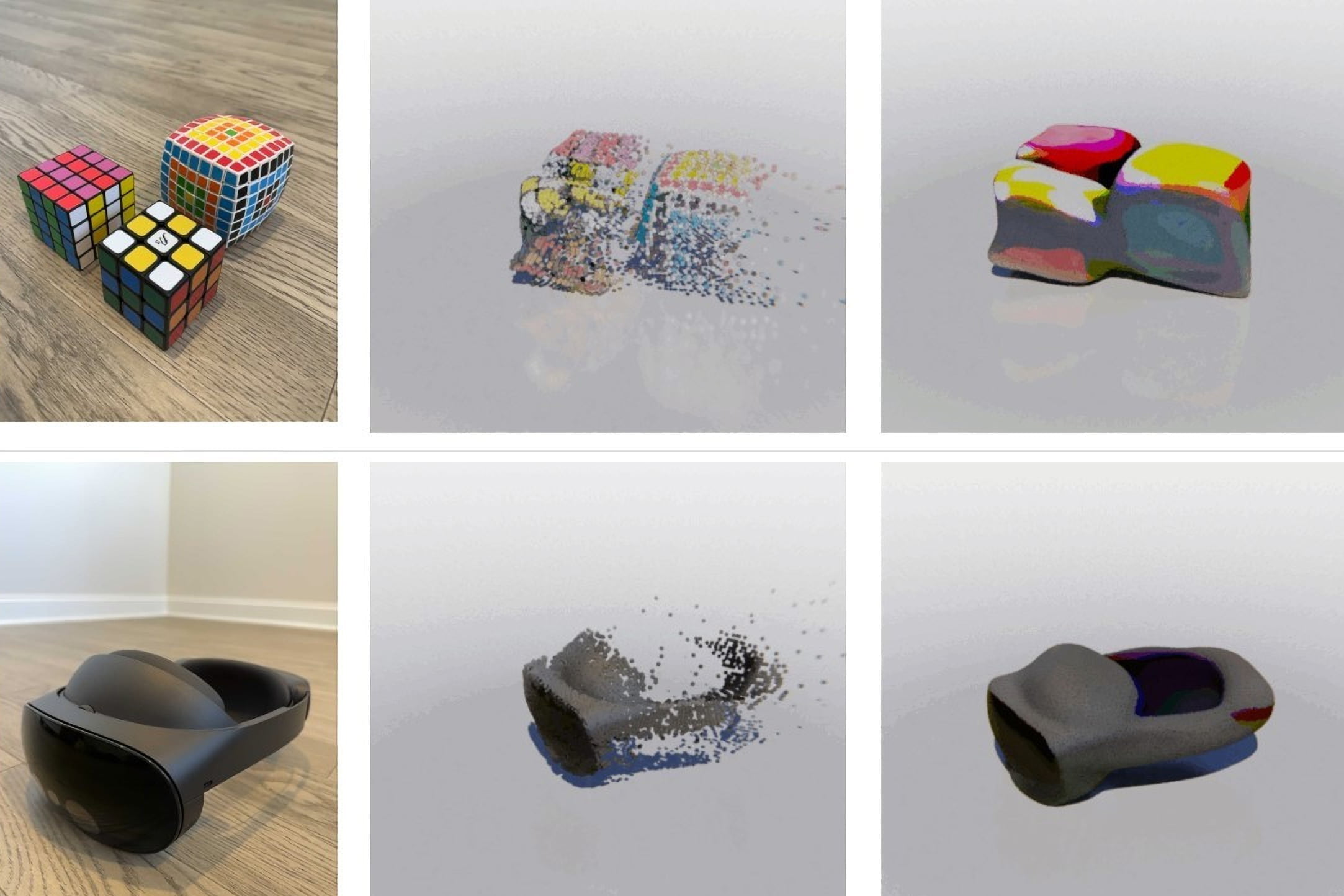

Meta MCC creates a 3D model from a single 2D image

Researchers at Meta present MCC, a method that can reconstruct a 3D model from a single image. The company sees applications in VR/AR and robotics.

AI models that rely on architectures like Transformers and massive amounts of training data have produced impressive language models like OpenAI's GPT-3 or, most recently, ChatGPT.

The breakthroughs in natural language processing brought a key insight: scaling often enables foundation models that leave previous approaches behind.

The prerequisites are domain-independent architectures such as transformers that can process different modalities, as well as self-supervised training with a large corpus of unlabeled data.

Those architectures, in combination with large-scale, category-independent learning, have been applied in fields outside language processing, such as image synthesis or image recognition.

Metas MCC brings scale to 3D reconstruction

Metas FAIR Lab now demonstrates Multiview Compressive Coding (MCC), a transformer-based encoder-decoder model that can reconstruct 3D objects from a single RGB-D image.

The researchers see MCC as an important step towards a generalist AI model for 3D reconstruction with applications in robotics or AR/VR, where a better understanding of 3D spaces and objects or their visual reconstruction opens up numerous possibilities.

While other approaches like NeRFs require multiple images, or train their models with 3D CAD models or other hard-to-obtain and therefore non-scalable data, Meta relies on reconstructing 3D points from RGB-D images.

Such images with depth information are now readily available due to the proliferation of iPhones with depth sensors and simple AI networks that derive depth information from RGB images. According to Meta, the approach is therefore readily scalable, and large data sets can be easily produced in the future.

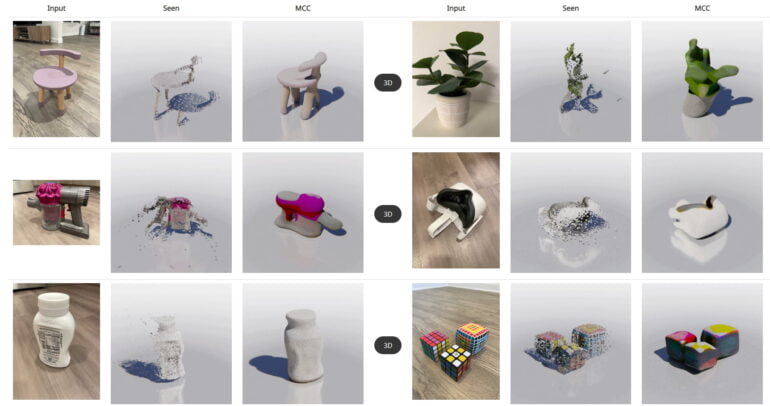

To demonstrate the advantages of the approach, the researchers are training MCC with images and videos with depth information from different datasets, showing objects or entire scenes from numerous angles.

During training, the model is deprived of some available views of each scene or object which are used as a learning signal. The approach is similar to the training of language or image models, where parts of the data are often masked as well.

Meta's 3D reconstruction shows strong generalizability

Meta's AI model shows in tests that it works and outperforms other approaches. The team also says MCC can handle categories of objects or entire scenes it hasn't seen before.

Video: Meta

In addition, MCC shows the expected scaling characteristics: Performance increases significantly with more training data and more diverse object categories. IPhone footage, ImageNet, and DALL-E 2 images can also be reconstructed into 3D point clouds with appropriate depth information.

We present MCC, a general-purpose 3D reconstruction model that works for both objects and scenes. We show generalization to challenging settings, including in the-wild captures and AI-generated images of imagined objects.

Our results show that a simple point-based method coupled with category-agnostic large-scale training is effective. We hope this is a step towards building a general vision system for 3D understanding.

From the paper

The quality of the reconstructions is still far from human understanding. However, with the relatively easy possible scaling of MCC, the approach could quickly improve.

A multimodal variant that enables text-driven synthesis of 3D objects, for example, could be only a matter of time. OpenAI is pursuing similar approaches with Point-E.

Numerous examples including 3D models are available on the MCC project page. The code is available on Github.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.