OpenAI suddenly remembers that copyright law exists after a few days of wild Sora videos

Key Points

- OpenAI has announced that Sora will enforce stricter copyright rules in the future and will give rights holders more control over the use of their characters, with initial users already seeing terms like "South Park" being blocked.

- The company plans to introduce an expanded control system allowing authors to specify in detail how their works may be used, along with a revenue-sharing model for rights holders when their content is generated by Sora users.

- OpenAI's rapid response suggests the company may have been testing how normalizing copyright infringements by AI would be received, but it remains unclear whether markets and courts will accept the monetization of such content.

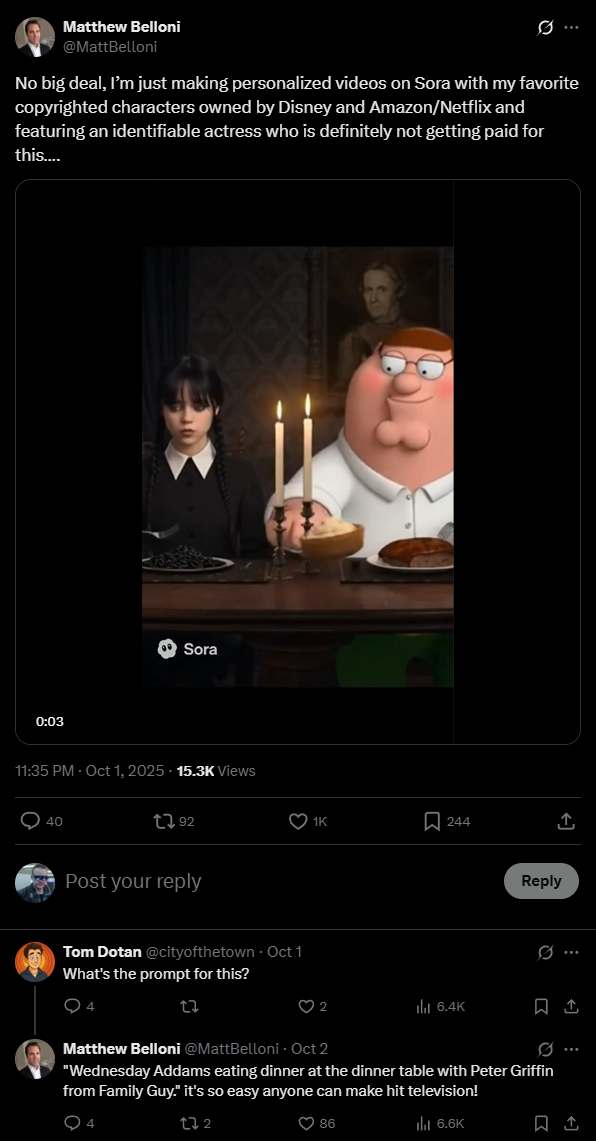

When OpenAI rolled out Sora just a few days ago, copyright wasn't much of a concern. That was likely by design.

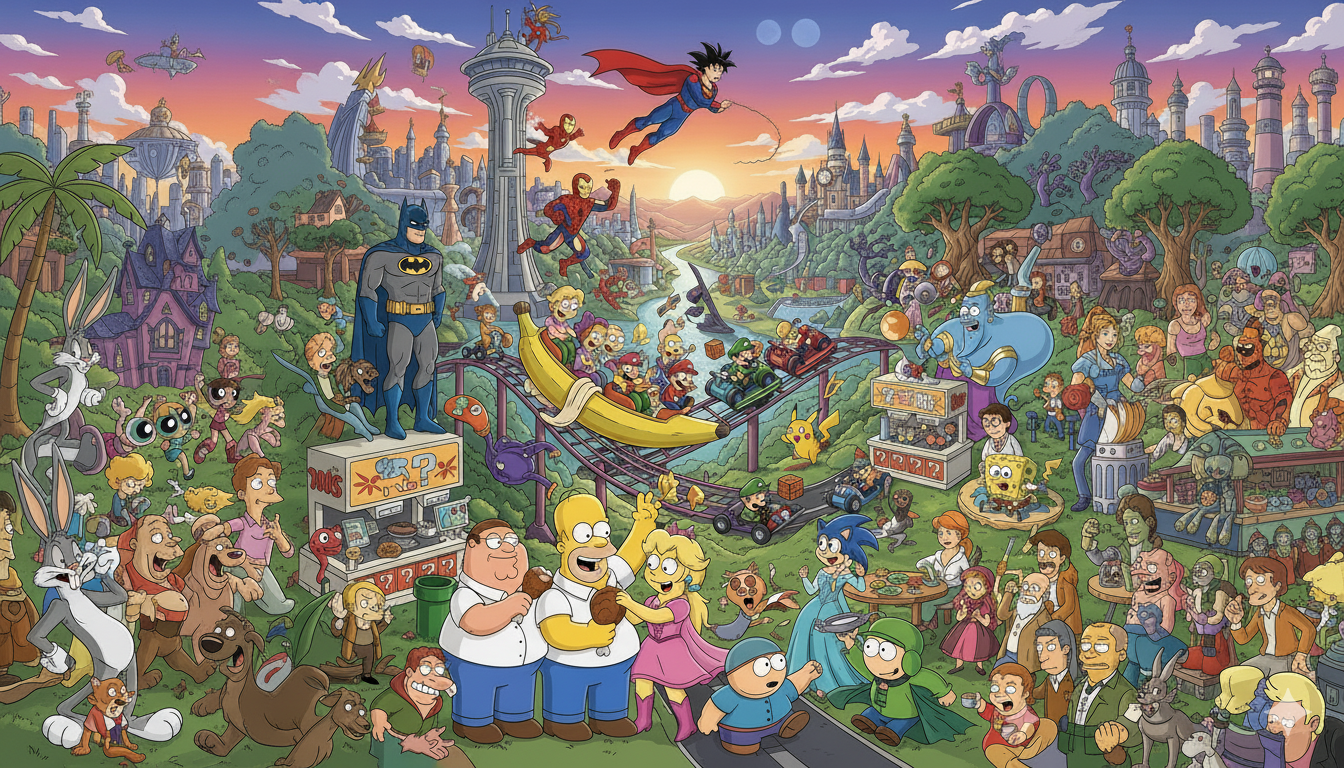

For example, the model could generate full episodes of "South Park" and plenty of other questionable content at scale. Compared to that, the earlier debate over Studio Ghibli-style images seems minor.

OpenAI's quick reversal suggests copyright holders responded fast and forcefully. The company isn't alone: Midjourney, Google, and many others are also facing legal pressure as lawsuits pile up across the AI sector. But Sora's audiovisual tools and level of detail pushed copyright concerns to a new level. Sora also shot to the top of the Apple App Store charts almost instantly.

OpenAI tightens restrictions

Some users noticed in the past 24 hours that Sora is tightening up: prompts tied to copyrighted material, like "South Park," are now apparently blocked.

At the same time, OpenAI CEO Sam Altman posted that rights holders will soon have more control over the use of their characters in Sora. The company is working on an expanded control system, letting creators set detailed rules for how (or if) their characters can be generated.

"We are hearing from a lot of rightsholders who are very excited for this new kind of "interactive fan fiction" and think this new kind of engagement will accrue a lot of value to them, but want the ability to specify how their characters can be used (including not at all)," Altman writes.

He also announced plans for a revenue model tied to video generation. The company says it wants to share revenue with rights holders when their content is generated in Sora, but hasn't shared any concrete details or timelines. "The exact model will take some trial and error to figure out, but we plan to start very soon," Altman writes.

Testing the limits of copyright

It's unlikely that OpenAI was caught off guard by Sora's copyright problems. The company had already weathered the Studio Ghibli-style images controversy and faces ongoing lawsuits over AI-generated content. OpenAI knows the legal landscape.

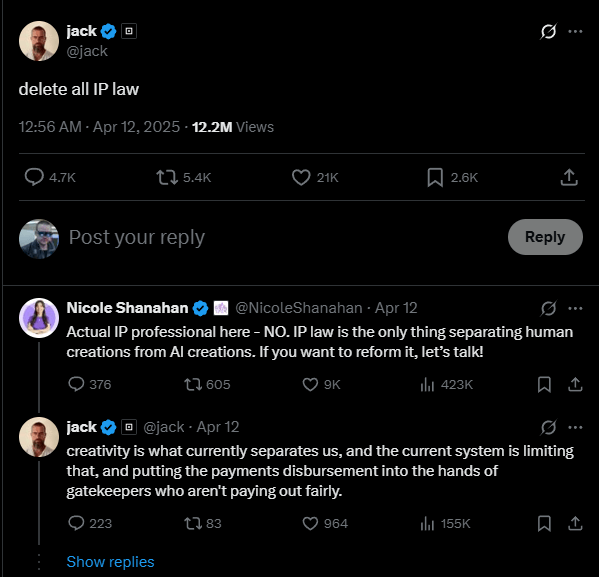

So why launch Sora with so few restrictions, only to reverse course just days later? One theory: OpenAI wanted to see how far it could go after the Ghibli incident. Some voices in the AI world openly push for abolishing or overhauling copyright law.

An app like Sora can help normalize copyright infringement: if everyone does it, enforcement becomes nearly impossible. The sheer number of potential lawsuits would overwhelm the courts. The big question before launch was how strong the backlash would be. Apparently, it was loud enough that OpenAI had to tighten the rules just days after Sora's debut.

Copyright and AI are complicated

There's another layer to all this: even if OpenAI wants to respect copyright and pay creators, actually making that work is a technical and legal challenge.

Back in April, Sam Altman said in a TED interview that it would be "cool" if artists could get paid when AI models use their style, picturing a voluntary system where creators opt in and earn a share of revenue. But Altman didn't offer any specifics, pointing out the technical problem of tracking influences, especially when several styles are blended in a single prompt. "How do you divvy up how much money goes to each one? These are like big questions," he said.

Altman also argued that all creativity builds on existing work, which undercuts the push for direct compensation. Meanwhile, a "Media Manager" tool promised in May 2024 to let artists opt out of AI training still hasn't launched.

It's clear that OpenAI could never have launched Sora if it had accepted full copyright restrictions on data training and generation up front. Reaching any real agreement on these issues could have taken years, and current copyright law simply can't keep up with the pace of AI. Whether models like Sora and the ways they're monetized get a green light will be decided by the courts and the market.

Sora is also facing criticism for the quality of its output. Critics argue that most of what it generates is "AI slop": generic, shallow videos aimed at driving clicks, with little real creativity or value. Even Sam Altman has acknowledged the issue, saying OpenAI would shut Sora down if it fails to actually improve users' lives, though he hasn't explained how that would be determined.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now