AlphaZero learns human concepts

Deepmind's AlphaZero is considered an AI milestone. A new paper examines how exactly the AI system has learned chess - and how close it is to humans in doing so.

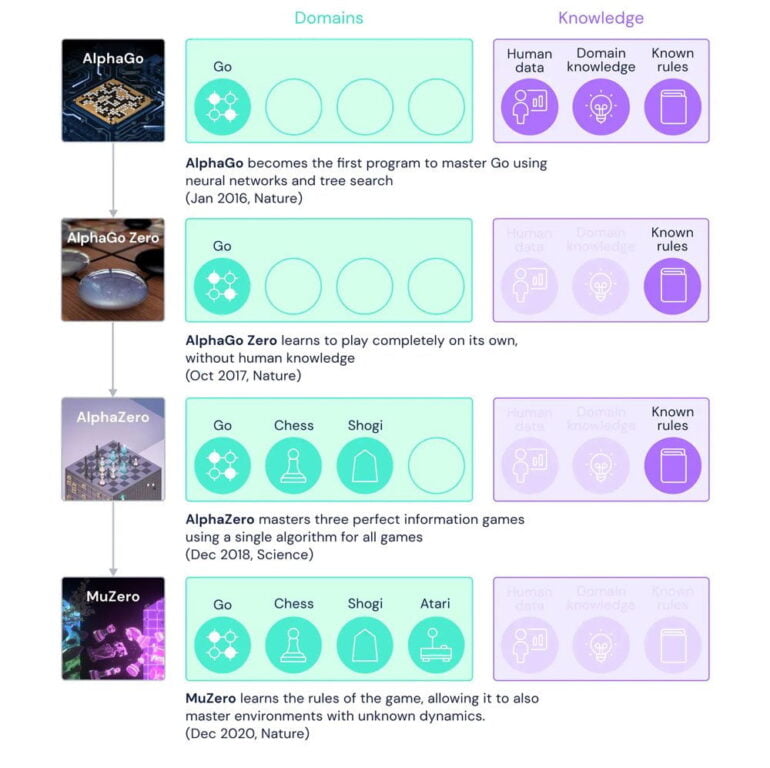

In 2017, Deepmind demonstrated AlphaZero, an AI system that can play world-class Chess, Shogi, and Go. The company combined different methods for the AI system, such as self-play, reinforcement learning, and search. World chess champion Magnus Carlsen called AlphaZero an inspiration for his transformation as a player.

In a new paper by Deepmind, Google, and former world chess champion Vladimir Kramnik, the authors now analyze how exactly AlphaZero learns to play chess.

AlphaZero's representations resemble human concepts

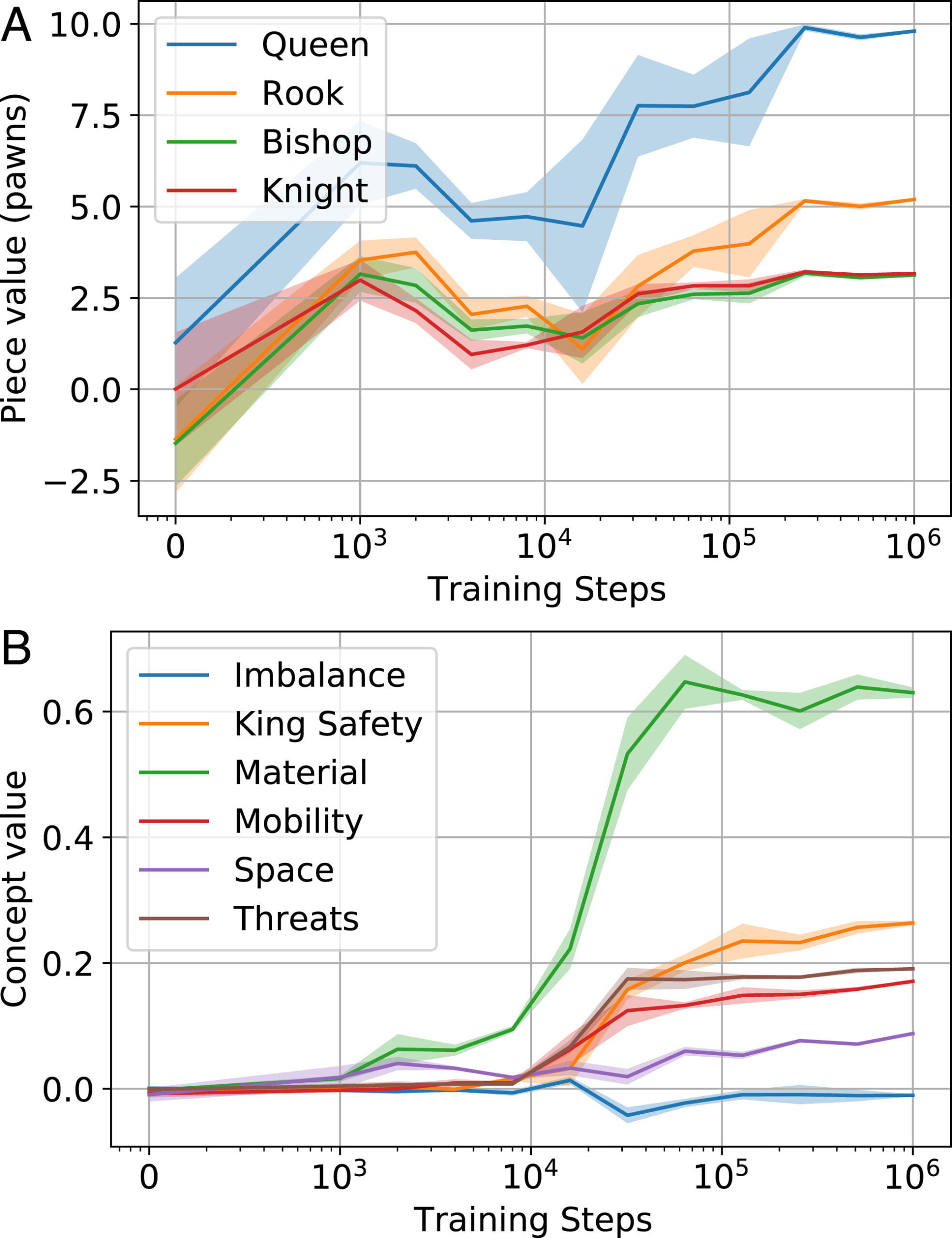

In their research, the team found "many strong correspondences between human concepts and AlphaZero’s representations that emerge during training, even though none of these concepts were initially present in the network."

So even though the AI system did not have access to human games and is not supported by humans, it appears to learn concepts that are similar to those of human chess players.

In a quantitative analysis, the team applies linear probes to assess whether the network is representing concepts like "King Safety", "Material Advantage" or "Positional Advantage" which are familiar to human chess players.

In a qualitative analysis, the team uses a behavioral analysis by Kramnik to examine AlphaZero's learning process in player openings and compares it to humans.

Despite all the similarities, AlphaZero is a little different

The researchers use about 100,000 human games from the ChessBase archive for their study. For each position in the set, the team computed concept values and AlphaZero's activations and found commonalities in the learning process: "First, piece value is discovered; then an explosion of basic opening knowledge follows in a short time window. Finally, the opening theory of the net is refined in hundreds of thousands of training steps."

This rapid development of specific elements of AlphaZero's behavior mirrors observations of emergent abilities or phase transitions in large language models, the paper says.

Further research could also uncover more concepts, possibly including previously unknown ones. The research also shows that human concepts can be found even in an AI system that has been trained through self-play. This, they said, "broadens the range of systems in which we should expect to find existing or new human-understandable concepts."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.