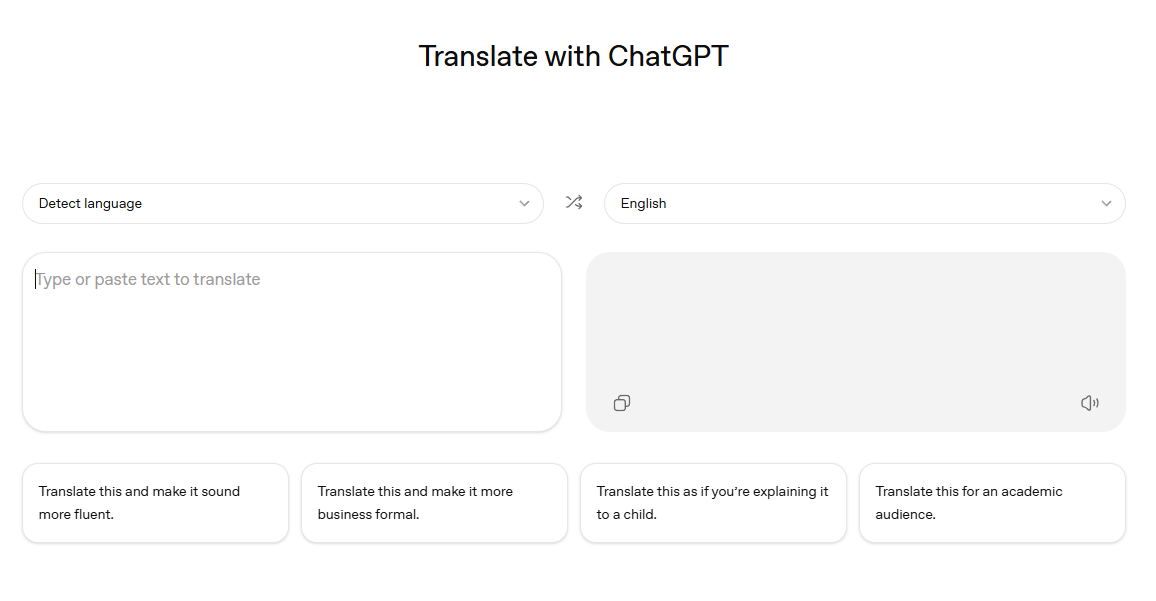

Wikipedia has landed major AI companies as partners: Amazon, Meta, Microsoft, Mistral AI, and Perplexity have joined the Wikimedia Enterprise partner program, with Google, Ecosia, and others already on board. These companies use the APIs to integrate Wikipedia content into their products.

Wikipedia is considered one of the highest-quality datasets for training large language models, and its content powers chatbots, search engines, and voice assistants. The Wikimedia Foundation argues that human-curated knowledge is more valuable than ever in the AI era, but without financial contributions from companies profiting from this data, the open knowledge model could be at risk.

In late October, Wikipedia raised concerns about declining traffic from AI systems that display its content without sending users to the website and later called for fair licensing through its API.

This tension is likely to grow. Chatbots are extracting value from the web at scale, and the legal landscape remains murky. Not every site can follow Wikipedia's path, or that of Tailwind, by offsetting lost revenue through partnerships and paid APIs.