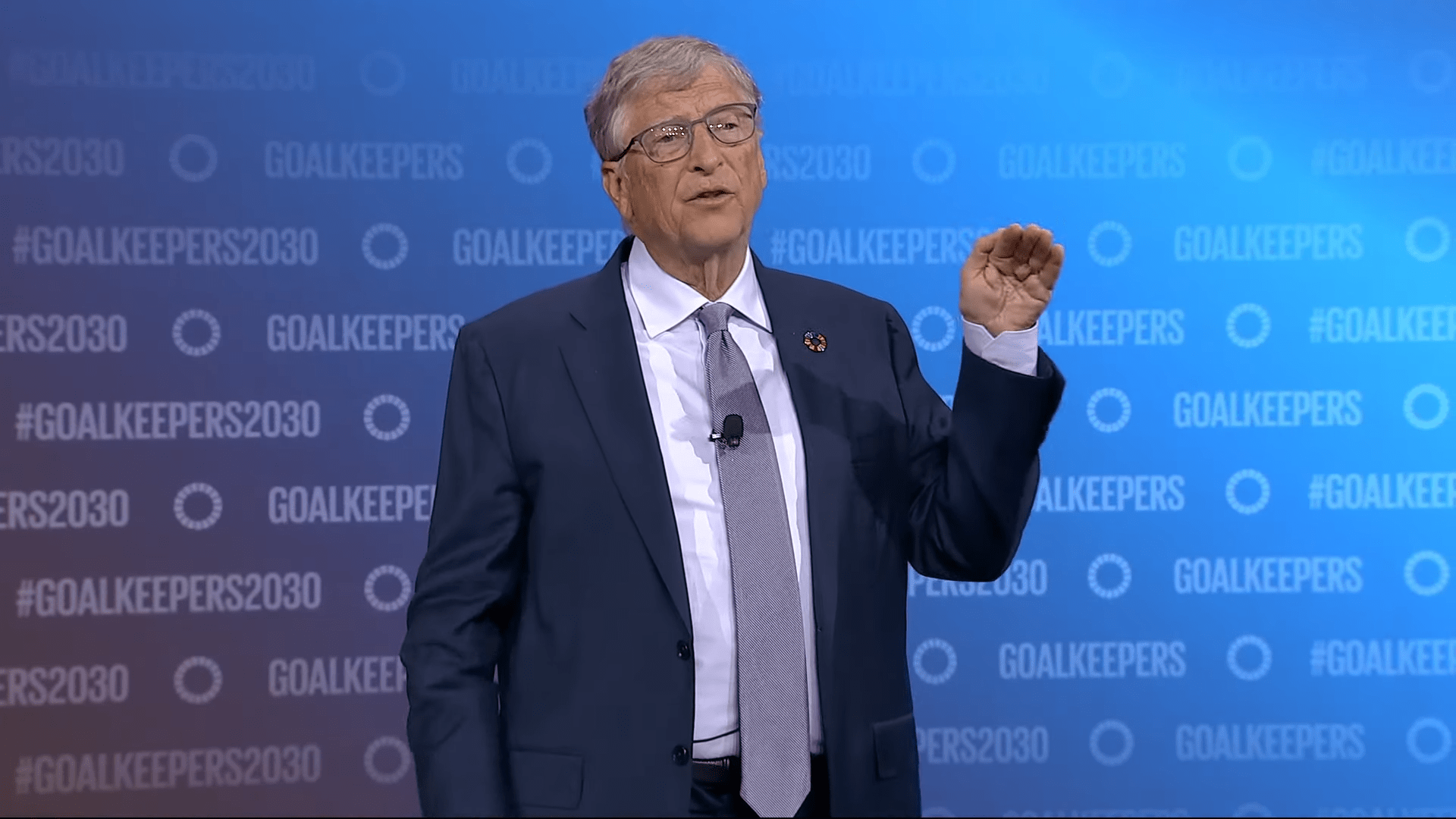

Bill Gates downplays AI's potential for spreading disinformation

In a recent interview with The Verge, Microsoft founder Bill Gates made comments about AI's potential to spread false information that miss the core of the problem.

Gates said: "People can type misinformation into a word processor. They don’t need AI, you know, to type out crazy things. And so I’m not sure that, other than creating deepfakes, AI really changes the balance there."

While Gates is technically correct, he fails to address the main concern. The primary risk of AI-generated text isn't just that false information can be created – it's the potential for massive scaling.

Two studies on AI's persuasive abilities have shown that AI models can be more convincing than humans. In one study, GPT-4 with access to personal information increased agreement with opposing arguments by 81.7 percent compared to human debates.

OpenAI CEO Sam Altman has warned about the "superhuman persuasion" of AI models "which may lead to some very strange outcomes." Security researchers fear, for example, much more convincing phishing emails.

AI makes disinformation more effective and scalable

AI disinformation can be done much faster, on a much larger scale, at a high human level, and possibly even at a superhuman level in the future. This is certainly not the same as humans simply typing "misinformation into a word processor."

Gates' argument misses the point, much like those who downplay the risks of deepfakes by saying that images and videos have always been manipulable, citing Photoshop as an example.

Again, it's not about the technical ability to create fake content - it's about simplicity and accessibility, scalability. There's a big difference between having to buy and learn professional software to create a politically motivated fake image and being able to do it with a single sentence on X.

Given that figures like Donald Trump and Elon Musk are fulfilling the deepfake scenarios that some researchers predicted years ago, it would be helpful if influential people like Gates made more nuanced statements about the dangers of AI-generated disinformation.

There may be arguments that the disinformation potential of AI is overstated. But Gates' argument isn't one of them. It's an inaccurate comparison that distracts from and trivializes the real problem.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.