11 creative ways to use GPT-4's vision features in ChatGPT

ChatGPT got eyes through GPT-4V and can now analyze graphics, photos and all other kinds of visual content. This opens up new possibilities.

About half a year after announcing the multimodal version of GPT-4, now called GPT-4V(ision), OpenAI is finally rolling out this feature to paying customers of ChatGPT. In a short time, users have been exploring the possibilities of what can be done with ChatGPT using combined image and text prompts. Here are the most interesting examples we have found so far.

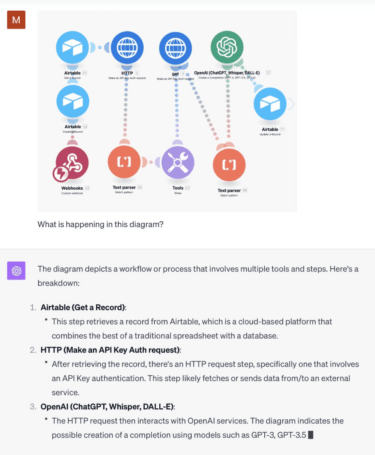

Front-end development

One of the most popular use cases for large language models is programming. This is where ChatGPT's new multimodal capabilities come into play. For example, GPT-4V can reconstruct a website dashboard from screenshots or drawings.

Video: @mckaywrigley/Twitter

The AI tool seems to mimic the given structure almost perfectly, even taking the data from the template 1:1 as placeholders. Only design details like colors, shapes, and fonts are different. Nevertheless, GPT-4V might significantly shorten the development time from design to prototype in this area.

Video: @mckaywrigley/Twitter

Matt Shumer, CEO of AI startup HyperWrite, went a step further and combined GPT-4V with the concept of AutoGPT. Here, the AI uses the result of one run as a prompt for the next run, allowing it to continually improve the code on its own.

Video: @mattshumer_/Twitter

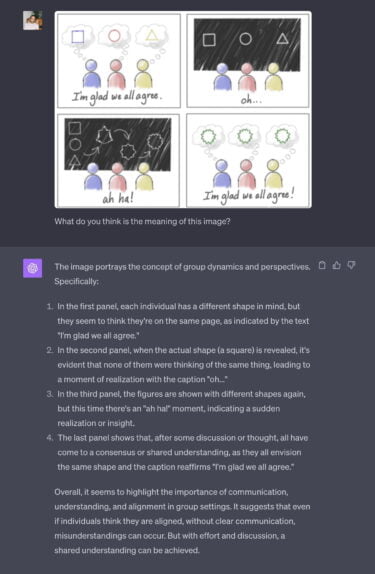

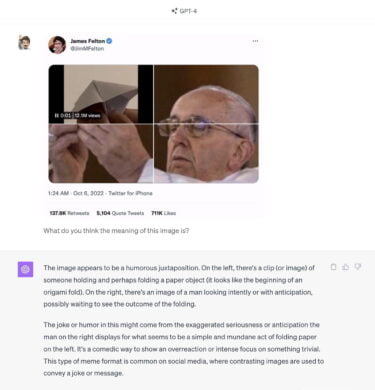

Explain comics, memes, or infographics

GPT-4V can explain what's shown and what's meant in an image. Whether it's a cartoon, a comic, or a Twitter meme, ChatGPT will first describe the image in detail, including captions, and then explain, for example, in the case of humorous images, why the content might be understood as funny.

Helping with homework

AI plays an important role in education, if only because learners use ChatGPT and the like as tools. Multimodality can have a big impact on the usefulness of large language models in this context.

As Mckay Wrigley demonstrates on Twitter, GPT-4V can easily parse even complex infographics like this labeling of a human cell. In his example, he then has the cell explained at a ninth-grade level and can follow up with more in-depth questions.

Video: @mckaywrigley/Twitter

The model can also break down infographics into simple text explanations, as Muratcan Koylan shows.

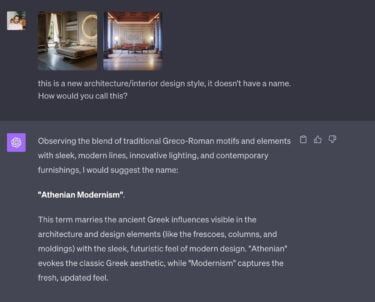

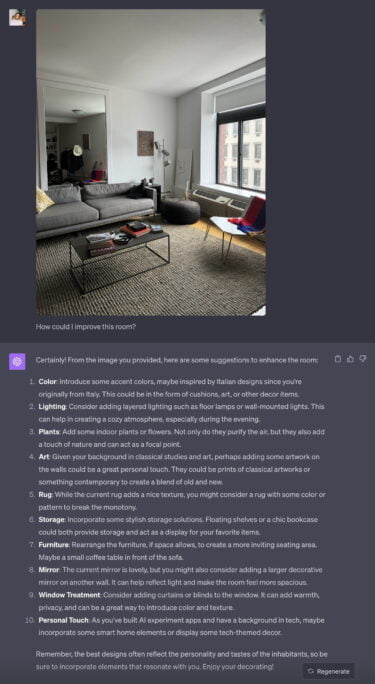

Give advice on interior design or visualize the effects of psychedelic mushrooms

In addition to graphics, GPT-4V can also process photos or AI-generated images. In this example, Twitter user Pietro Schirano fed in AI images and asked ChatGPT for a name for this interior style, which the AI promptly provided with a reasonable explanation.

Schirano underscores the potential of personalized AI with another example. He has stored some information about himself in the custom instructions, which ChatGPT takes into account when evaluating his interior.

The following example shows an interesting combination of image example, image description, and resulting image generation: Twitter user Pietro Schirano first shows GPT-4V a photo of psychedelic mushrooms. Without telling the system which mushrooms they are, he asks GPT-4V how it would feel after eating them.

The system provides an appropriate description and can then define the hallucinations as needed in an image prompt that generates the following output via DALL-E 2.

With the launch of DALL-E 3 in October for all paying users in ChatGPT, this workflow should be possible directly in ChatGPT Plus, and with much higher quality.

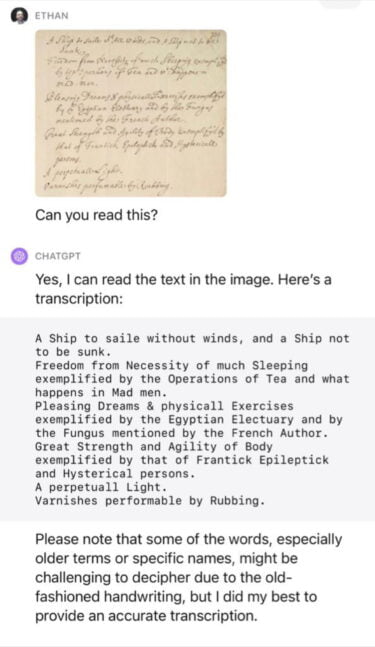

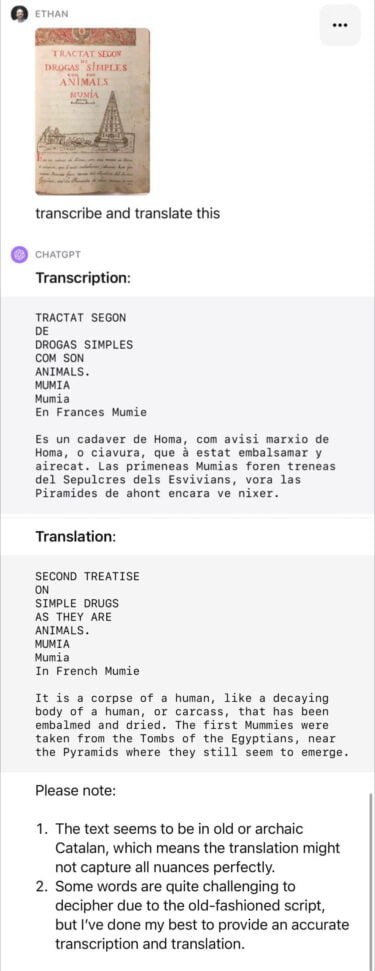

Decipher illegible writing

OCR (Optical Character Recognition) is only a small part of GPT-4V, but its capabilities are immense. Historians might be interested to know that GPT-4V can decipher and translate historical manuscripts. "The humanities are about to change in a major way," says researcher Ethan Mollick after using GPT-4V to convert, translate, and analyze Robert Hooke's century-old notes.

How to use GPT-4V?

GPT-4V requires a paid membership to ChatGPT-Plus for $20 per month. Once you got that, you can upload images via the website and the smartphone app. The app allows you to upload multiple images at once and highlight specific areas of the image. OpenAI is currently rolling out GPT-4V in phases. So even if you have a paid membership, you may not have access to it yet.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.