Elon Musk wants to rewrite "the entire corpus of human knowledge" with Grok

Elon Musk wants to retrain the Grok language model from his AI company xAI with "divisive facts" - statements he describes as "politically incorrect, but nonetheless factually true."

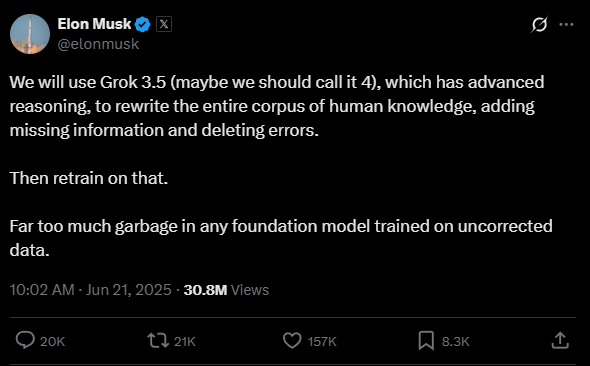

Musk is asking users on X to submit examples of these statements directly under his post. In this new training round, Grok 3.5 will first be used to rewrite data, a version Musk says might also be called "Grok 4."

He says Grok will gain "advanced reasoning" skills, with the aim to "rewrite the entire corpus of human knowledge, adding missing information and deleting errors." The model will then be retrained on this "corrected" data.

According to Musk, foundation models trained on uncorrected data contain "far too much garbage." As he said earlier, Grok is "parroting legacy media."

xAI has repeatedly intervened in Grok's behavior

This announcement comes after several documented cases where Musk and xAI have directly influenced Grok's responses. In February 2025, an xAI employee changed Grok's system prompt to ignore sources that described Musk or Donald Trump as spreaders of misinformation. xAI confirmed the change but said it was unauthorized and later reversed, calling it inconsistent with the company's mission.

Earlier versions of Grok had described Trump, Musk, and Putin as the biggest threats to US democracy, warned about climate change, and criticized cost-cutting on Musk's Doge project. These statements were later softened or removed from the chatbot's output.

Since April 2025, Grok 2 and 3 have avoided direct answers when asked who spreads the most disinformation on X, instead framing misinformation as a matter of opinions that differ from the "mainstream narrative."

Grok has also begun to downplay other controversies: the consequences of climate change are described as perspective-dependent, and Trump's anti-democratic rhetoric is labeled simply as border crossings. Competing chatbots like ChatGPT and Google's Gemini tend to give more nuanced, evidence-based answers to the same questions.

"Truth-seeking" PR

xAI originally promoted Grok as a tool for "maximum truth-seeking." But documented prompt changes and inconsistent answers cast doubt on that claim.

Even when the system prompt was made public, it included a technical note saying it shouldn't be shown if users ask for it. Grok's responses can also be changed through server-side updates, so revealing the prompt doesn't rule out hidden manipulation.

Recently, users documented Grok repeating far-right talking points, including the false "white genocide" conspiracy theory in South Africa - a narrative rooted in US alt-right circles. xAI did not explain why Grok suddenly began spreading this narrative, even in unrelated contexts and without being prompted.

Whether Grok can remain a credible AI-powered information service is now deeply uncertain for anyone outside Musk's own worldview. With Musk's new approach to training, the chatbot is drifting further toward amplifying political opinions, shaped directly by its owner's influence.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.