Google Deepmind's Genie 3 creates interactive 3D worlds that stay consistent for "multiple minutes"

With Genie 3, Google Deepmind introduces a "world model" that creates interactive 3D environments in real time, designed for simulating complex scenarios and training autonomous AI agents.

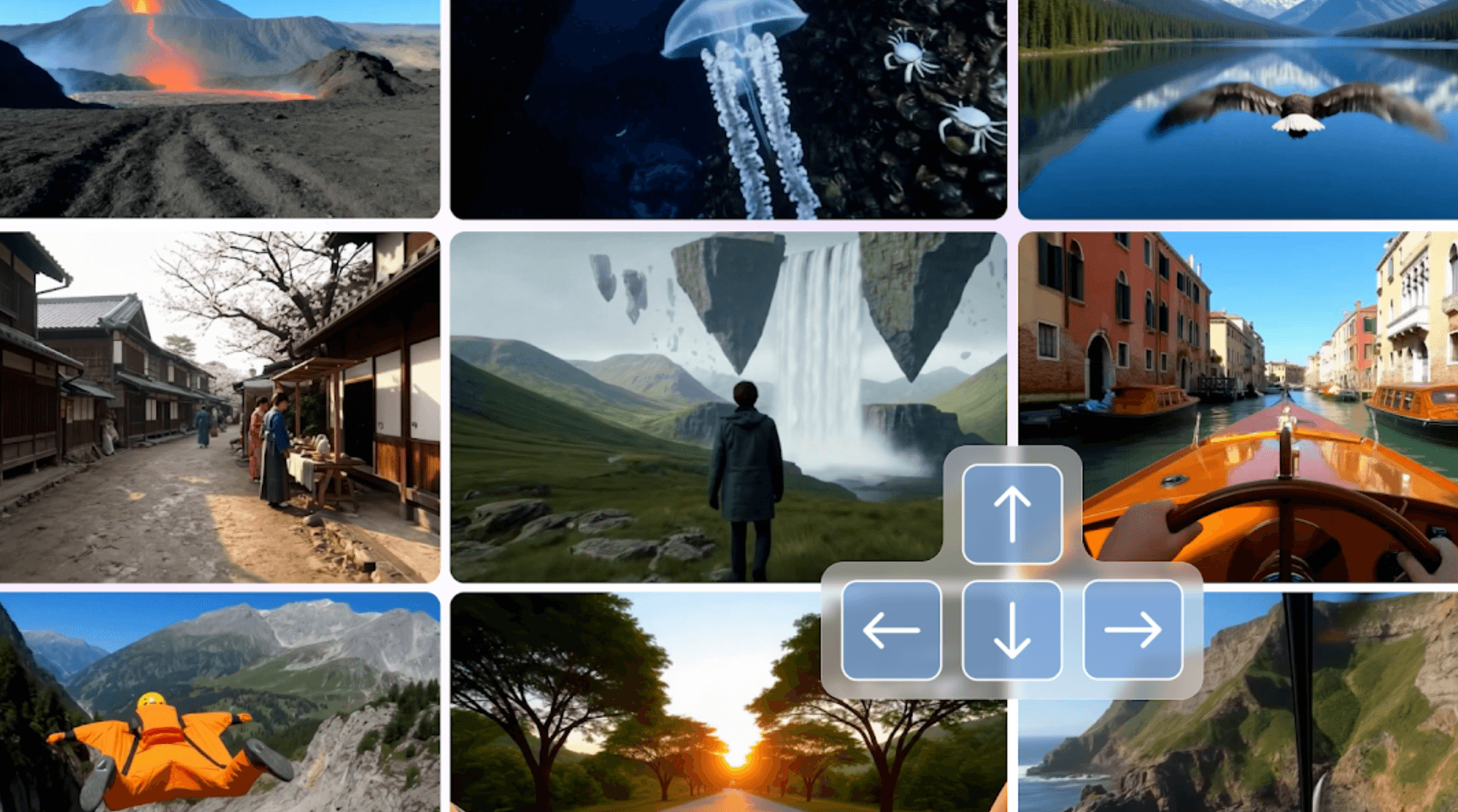

Genie 3 generates dynamic virtual worlds from simple text prompts, allowing users to explore these environments at 24 frames per second and 720p resolution. Unlike classic video models, Genie 3 generates each frame autoregressively, considering up to a minute of previous environmental details.

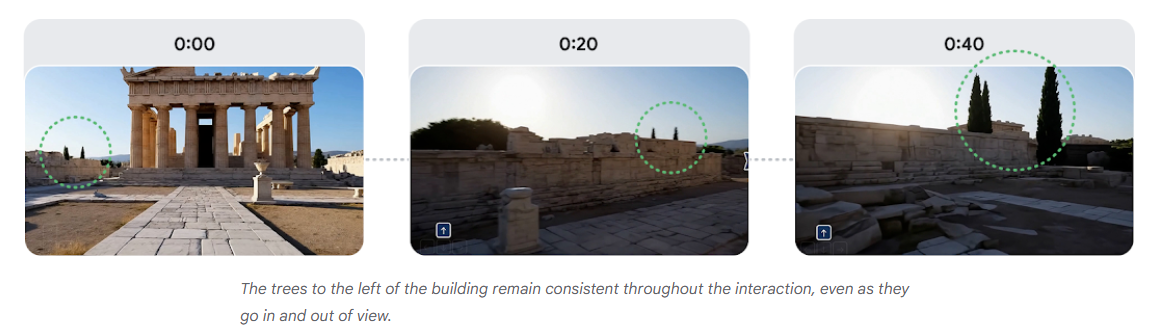

This approach helps preserve visual coherence over extended interactions. The generated worlds remain visually and physically consistent for "multiple minutes," marking a major technical step forward over previous models.

According to Deepmind, Genie 3 is the first model to combine real-time interactivity with longer-term physical consistency in its environments, and the team sees Genie 3 as a foundation for developing more general AI systems (AGI). This release builds on Deepmind's earlier projects, including Genie 1 and Genie 2, as well as the Veo 2 and Veo 3 video generators.

Simulating physical and imagined worlds

Genie 3 can create a wide range of scenarios, from realistic landscapes with dynamic weather effects like lava, wind, and rain, to fantastical settings featuring portals, flying islands, or animated creatures. The model can also reconstruct historical locations such as Venice or ancient Knossos.

Users interact with these worlds by entering text commands—so-called "promptable world events"—to trigger changes like shifting weather or spawning new objects. Interaction goes beyond simple navigation, letting users create counterfactual "what if" situations and test how AI agents handle unexpected events.

Training ground for autonomous agents

Genie 3 does not require explicit 3D data or models up front. Instead, it generates environments directly from text descriptions and user interaction, with consistency emerging from the simulation itself. This differs from methods like NeRF or Gaussian splatting, which depend on pre-existing 3D data. According to Deepmind, this makes it possible to train AI agents in more open-ended, dynamic scenarios without having to program all the physical rules in advance.

Timestamps at 0:00, 0:20, and 0:40 show how the marked trees to the left of the ancient gate remain visible and consistent, even as the camera moves. | Image: Google DeepmindDeepmind is already using Genie 3 to test its in-house SIMA agent, which completes tasks autonomously inside these generated worlds.

The simulation doesn't know the agent's goals, responding only to its actions. This setup allows for complex task sequences in a controlled, simulated setting. It also provides a new way for researchers to evaluate AI performance and "explore their weaknesses."

Launching as a research preview

The model is launching as a limited research preview, available to a select group of researchers and creatives. Deepmind says this approach will help identify potential risks early and guide further development. Over time, the company sees use cases in education, simulation, and expert training, especially for preparing people to make decisions in complex scenarios.

There are some technical limitations: agent actions are restricted, interactions last only a few minutes, and multi-agent simulations aren't yet reliable. Real-world locations aren't georeferenced, and readable text only appears if it's included in the prompt.

Genie 3 fits into Deepmind's broader goal of developing "Foundation World Models" to power more advanced, agentic AI systems. In the announcement, Deepmind states that world models like Genie 3 are a "key stepping stone on the path to AGI, since they make it possible to train AI agents in an unlimited curriculum of rich simulation environments."

This aligns with broader commentary from the company's leadership. In an earlier statement, Deepmind CEO Demis Hassabis described such models as essential for building general artificial intelligence, arguing they increasingly capture the world's underlying physical structure.

Furthermore, a recent paper by Deepmind researchers Richard Sutton and David Silver calls for a fundamental shift in AI research, moving away from systems trained on static human data toward agents that learn from their own experiences in simulated worlds—a vision that models like Genie 3 are designed to support.

"Game engine 2.0" and the future of game development

Of course, these demos raise questions about how world models like Genie 3 might shape the future of game development. Some of Deepmind's demonstrations already look like early versions of video games, though they still lack the complexity and depth found in commercial titles.

NVIDIA Director of AI Jim Fan sees Genie 3 as a preview of what he calls "game engine 2.0." According to Fan, "Some day, all the complexity of Unreal Engine will be absorbed by a data-driven blob of attention weights. Those weights take as input game controller commands and directly animate a spacetime chunk of pixels. No need for explicit 3D assets, scene graphs, or shader jujitsu. It is 'bitter lesson pilled' - purely programmed by data and grows more capable with data."

Fan predicts that game development will eventually become a sophisticated form of prompt engineering, converging toward agentic workflows much like recent trends in large language models. "That shall be the End Game (pun intended ;)," he adds.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.