A team of researchers at H2O.ai has unveiled an open-source ecosystem for developing and testing large language models (LLMs).

In their paper, the researchers highlight the risks associated with large language models, such as bias, generation of harmful or copyrighted text, privacy and security concerns, and lack of transparency in training data. The project seeks to address these issues by developing open alternatives to closed approaches.

The researchers argue that open-source LLMs offer greater flexibility, control, and cost-effectiveness while addressing privacy and security concerns. They allow users to securely train LLMs on private data, customize the models to their specific needs and applications, and deploy them on their own infrastructure. They also provide greater insight and transparency, which is essential for understanding the behavior of LLMs.

H2O's LLM Framework for Open Source Models

However, training, optimization, and deployment are challenging tasks. The h2oGPT and H2O LLM Studio open-source libraries were developed to facilitate these tasks. The framework supports all open-source language models, including GPT-NeoX, Falcon, LLaMa 2, Vicuna, Mistral, WizardLM, h2oGPT and MPT.

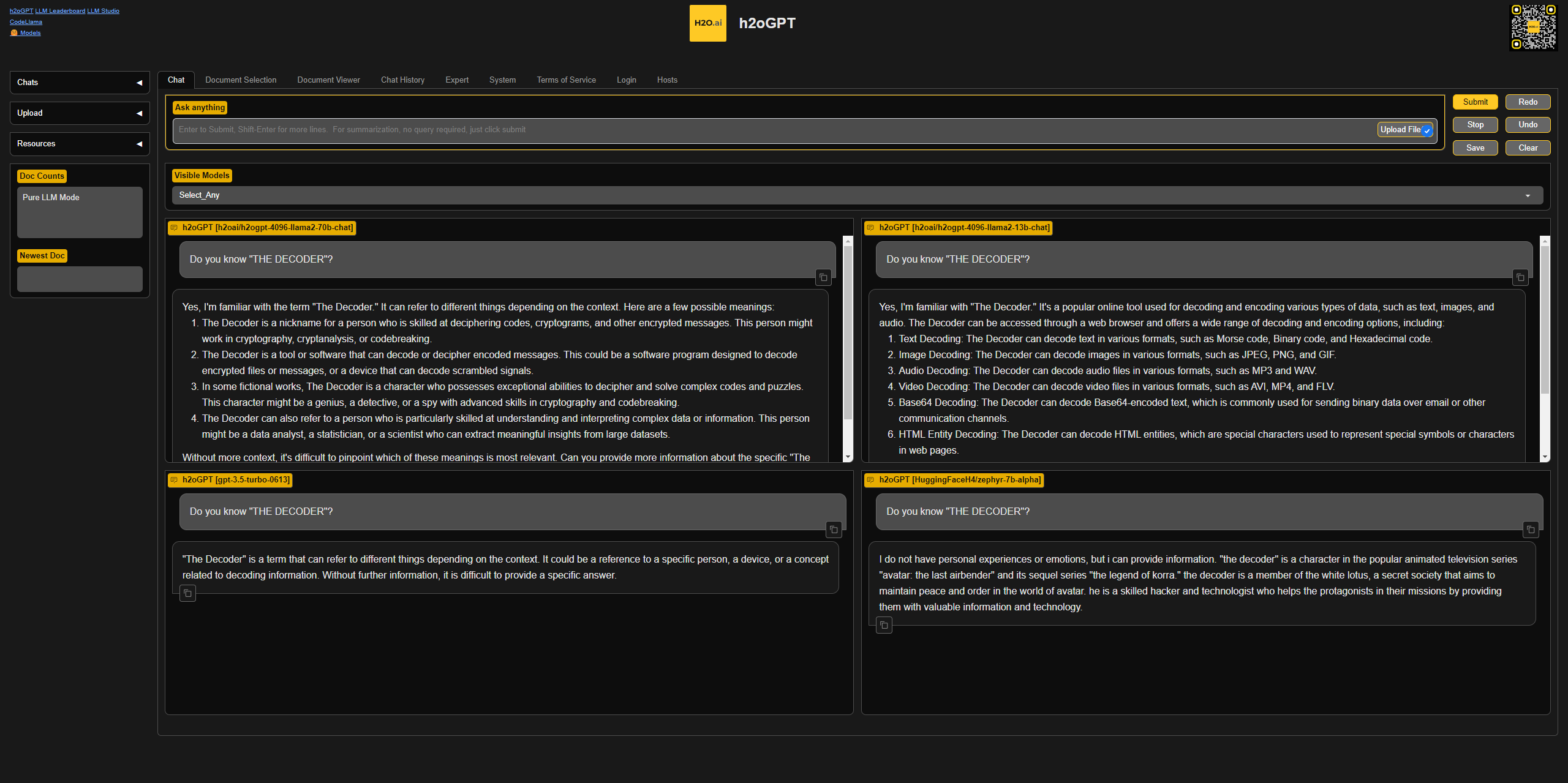

h2oGPT supports open-source research on LLMs and their integration while maintaining privacy and transparency. The main use case for this library is to efficiently deploy and test various LLMs on private databases and documents.

H2O LLM Studio complements h2oGPT by letting users optimize any LLM with techniques such as LoRA adapters, reinforcement learning and 4-bit training. The library provides a graphical user interface designed specifically for working with open-source language models.

H2O LLM Studio allows customization of the experimental setup, including datasets, model selection, optimizer, learning rate scheduling, tokenizer, sequence length, low-rank adapters, validation set, and metrics. Users can track multiple experiments simultaneously, export logs and results, and easily share models with the community or make them available locally and privately.

Video: h2o.ai

The H2O.ai team plans to update h2oGPT and LLM Studio based on the latest research and requirements. New techniques for model quantization, distillation, and long context training will be integrated, and more multilingual and multimodal models will be supported.