Humans can barely distinguish AI-generated content from human-created content

AI-generated images, text, and audio files are now so convincing that humans can no longer reliably distinguish them from human-made content, according to a study by researchers at the CISPA Helmholtz Center for Information Security.

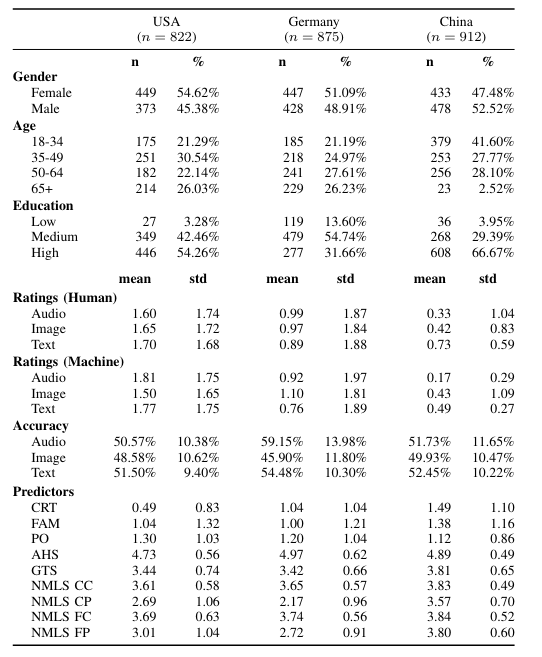

The online survey, conducted between June and September 2022 with 3,002 participants from Germany, China, and the U.S., covered audio, image, and text media types. The researchers generated photorealistic portraits, fake news articles, and literary audio files. A total of 2,609 data sets were analyzed (822 USA, 875 Germany, 922 China).

Across all media types and countries, most people thought the AI-generated media was created by humans. Respondents had already reached a point in 2022 "where it is difficult - though not yet impossible - for humans to tell if something is real or AI-generated," said Thorsten Holz, a professor at CISPA.

Holz sees risks in this trend. "People can misuse artificially generated content in many ways. [...] I see this as a major threat to our democracy," he said. Lea Schönherr, a CISPA faculty member, said developing ways to defend against such attack scenarios is an important task.

The study looked at several factors that may influence the ability to recognize AI-generated media. Overall confidence, cognitive reflection, and self-reported familiarity with deepfakes had a strong influence on participants' decisions across all media categories.

But other factors, such as age, education, political views, or media literacy, did not show consistent effects. Once the quality of the AI-generated media was high enough, demographic variables had much less influence.

The CISPA study highlights the need to better integrate technological progress with the societal impact of AI systems. Regulations and safeguards are lagging the rapid pace of AI development.

"Our results clearly show that machine-generative media are indistinguishable from real media. Since perfect technical detection seems unattainable, we argue that future research should not focus on how to avoid generative AI but rather, how to live with it," the study concludes.

Since the 2022 study, AI systems like ChatGPT and Midjourney have gotten even better at generating content that seems real and is easy for many people to use. If the researchers did the study again today, the results would probably be even clearer. They plan to conduct further studies to track these developments.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.