Meta's Make-A-Video3D generates dynamic 3D scenes from text descriptions that also run in real-time in 3D engines.

After text and images, generative AI models will soon synthesize videos and 3D objects. Models like Make-A-Video, Imagen Video, Phenaki for video and 3DiM, Dreamfusion or MCC for 3D show possible methods and already generate some impressive results.

Meta now shows a method that combines video and 3D: Make-A-Video3D (MAV3D) is a generative AI model that generates three-dimensional dynamic scenes from text descriptions.

Meta's Make-A-Video3D relies on NeRFs.

In September 2022, Google showed Dreamfusion, an AI model that learns 3D representations from text descriptions in the form of Neural Radiance Fields (NeRFs). For this, Google combined NeRFs with the large image model Imagen: It generates images matching text, which serve as learning signals for the NeRF.

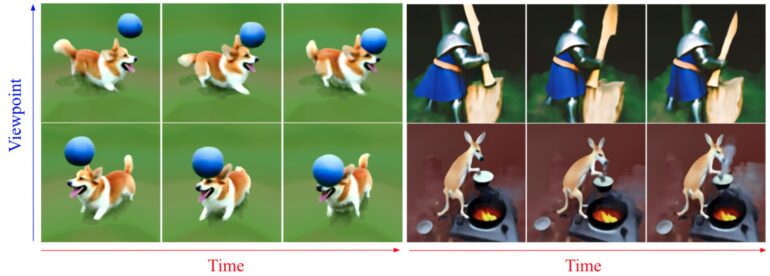

Meta takes a similar approach with MAV3D: A NeRF variant (HexPlane) suitable for dynamic scenes generates a sequence of images from a sequence of camera positions.

These are passed as video together with a text prompt to Meta's video model Make-A-Video (MAV), which scores the content provided by HexPlane based on the text prompt and other parameters.

The score is then used as a learning signal for the NeRF, which adjusts its parameters. In several passes, it learns a representation that corresponds to the text.

MAV3D content can be rendered in real-time

For example, MAV3D generates 3D representations of a singing cat, a baby panda eating ice cream, or a squirrel playing the saxophone. There is currently no qualitatively comparable model, but the results shown by Meta clearly match the text prompts.

Video: Meta

The learned HexPlane model can also be converted into animated meshes, the team says. The result could then be rendered in any standard 3D engine in real-time - and would thus be suitable for applications in virtual reality or in classic video games. However, the process is still inefficient, and the team is looking to improve it - as well as the resolution of the scenes.

More video examples and renderings are available on the MAV3D project page. The model and code are not available.