OpenAI positions ChatGPT as a search engine for work data with Company Knowledge

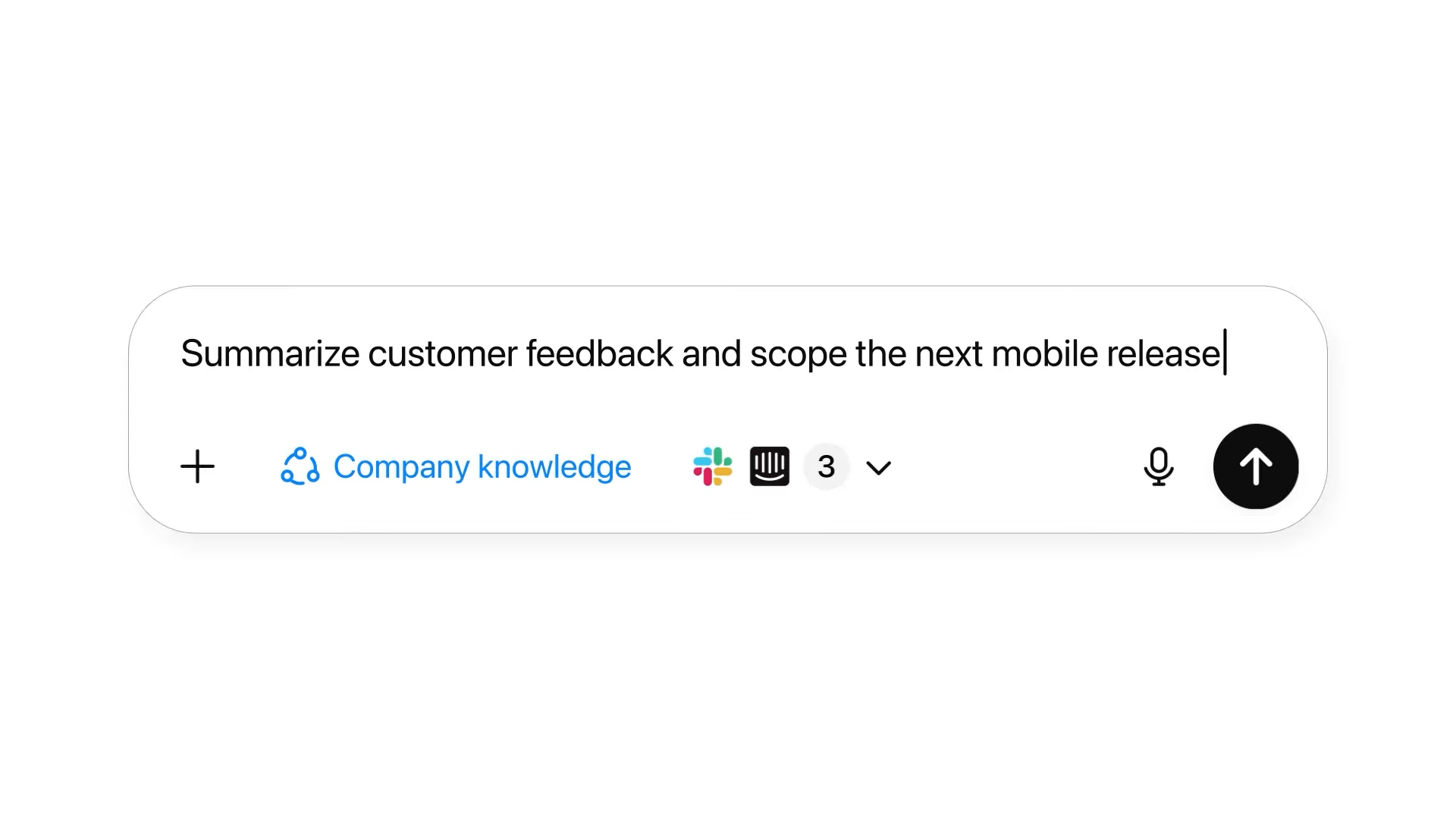

OpenAI has rolled out "Company Knowledge," a new feature for ChatGPT designed to help business, enterprise, and education users search work data from tools like Slack, SharePoint, Google Drive, and GitHub.

The feature is powered by GPT-5 and aims to position ChatGPT as a search engine for internal company information. According to OpenAI, answers include source references and can handle vaguely worded questions. The feature must be enabled manually and doesn't support web searches or generating images or diagrams.

LLMs are still a long way from being reliable citation engines

On paper, features like this could be a real productivity boost. But in practice, it's unclear whether ChatGPT or similar systems can handle such broad, open data sources reliably. Pulling citations from multiple sources at once is technically tough and often leads to unclear or incorrect answers—a problem a recent study calls "AI workslop," which is already costing companies millions and hurting morale.

So far, large language models work best with well-defined tasks in a fixed context, or for exploratory searches that help users surface relevant sources. Every LLM-based system struggles with citations at this scale, sometimes giving inaccurate details, leaving out important information, or misinterpreting context.

Research also shows that irrelevant information in long contexts can drag down model performance. That's why context engineering - carefully selecting and structuring the information fed into the model - is becoming increasingly important. If the context is too broad, too narrow, or not well organized, answer quality drops and costs can rise. Google DeepMind researcher Nikolay Savinov notes that picking the right context is essential, even though today's models can handle hundreds of thousands or even millions of tokens.

Companies rolling out these systems need to make sure employees understand their limitations. Human expertise and review remain critical, since it's easy to put too much trust in the machine. With current technology, relying entirely on the model will inevitably lead to mistakes. OpenAI doesn't mention these risks in its official announcement.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.