Google for Education and the educational organization ISTE+ASCD are launching a joint initiative to provide free AI training to all six million teachers in the US. Google says it's the largest program of its kind. The courses cover how to use Google's AI products Gemini and NotebookLM, with the goal of helping teachers and their more than 74 million students use AI safely in the classroom. The modules are designed to be short and practical, with concrete examples teachers can apply directly to their lessons. The initiative is set to launch in the coming months. Those interested can sign up via a Google form.

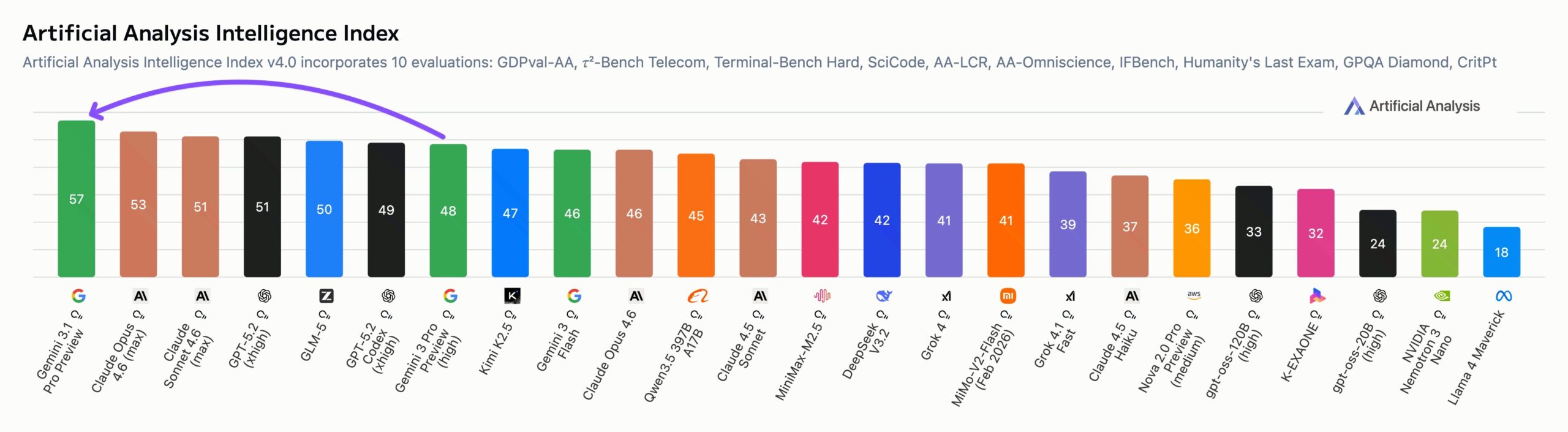

There's a clear strategic play behind the effort, of course. Getting your products embedded in the education system early means getting young people comfortable with your ecosystem while they're still in school and keeping them there well into their professional lives. Competitors like OpenAI and Anthropic are running similar playbooks, though they tend to focus on university partnerships and enticing offers for students, such as free or discounted access to their AI models.