Researchers show how Stable Diffusion can read minds. The method reconstructs images from fMRI scans with amazing accuracy.

Researchers have been using AI models to decode information from the human brain for years. At their core, most methods involve using pre-recorded fMRI images as input to a generative AI model for text or images.

In early 2018, for example, a group of researchers from Japan demonstrated how a neural network reconstructed images from fMRI recordings. In 2019, a group reconstructed images from monkey neurons, and Meta's research group, led by Jean-Remi King, has published new work that derives text from fMRI data, for example.

In October 2022, a team at the University of Texas, Austin showed that GPT models can infer text that describes semantic content a person has seen in a video from fMRI scans.

In November 2022, researchers at the National University of Singapore, the Chinese University of Hong Kong, and Stanford University used MinD-Vis to show how diffusion models, which power current generative AI models such as Stable Diffusion, DALL-E, and Midjourney, can reconstruct images from fMRI scans with significantly higher accuracy than available approaches at the time.

Stable Diffusion can reconstruct brain images without finetuning

Researchers at the Graduate School of Frontier Biosciences, Osaka University, and CiNet, NICT, Japan, are now using a diffusion model - more specifically, Stable Diffusion - to reconstruct visual experiences from fMRI data.

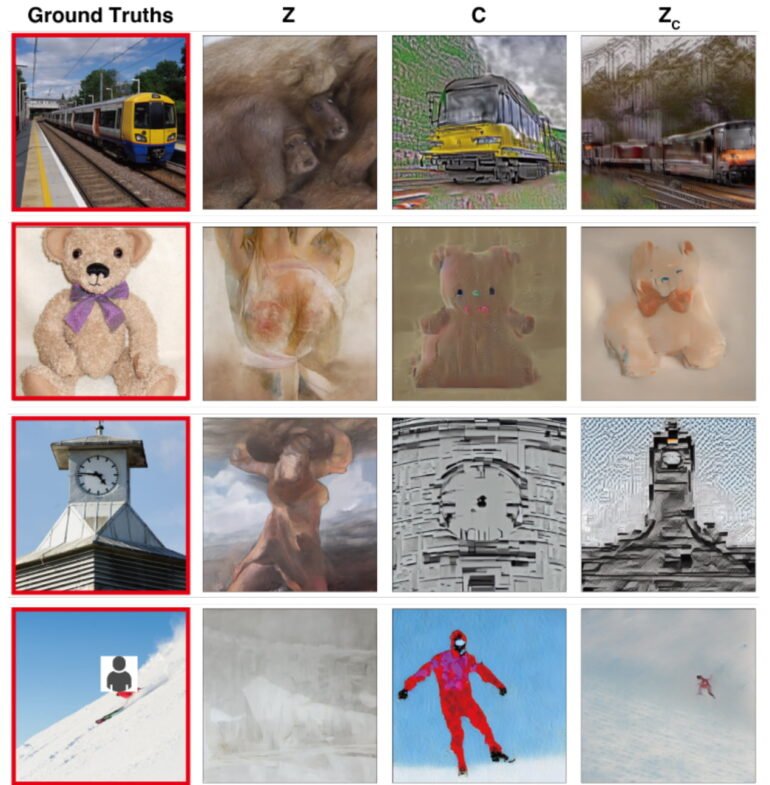

In doing so, the team eliminates the need to train and fine-tune complex AI models. All that needs to be trained are simple linear models that map the fMRI signals of the lower and upper visual brain regions to individual Stable Diffusion components.

Specifically, the researchers map brain regions as inputs to image and text encoders. The lower brain regions are mapped to the image encoder and the upper brain regions are mapped to the text encoder. This allows the system to use image composition and semantic content for reconstruction, they say.

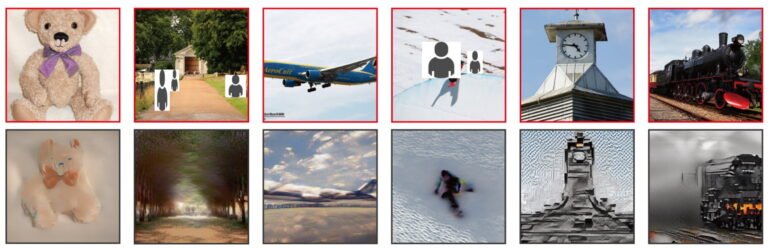

The researchers use fMRI images from the Natural Scenes Dataset (NSD) for their experiment and test whether they can use Stable Diffusion to reconstruct what subjects saw.

They show that the combination of image and text decoding provides the most accurate reconstruction. There are differences in accuracy between subjects - but these correlate with the quality of the fMRI images, the team says.

fMRI reconstruction leads to a better understanding of diffusion models

According to the team, the quality of the reconstructions is on par with the best current methods, but without the need to train the AI models used there.

Conversely, the team also uses models derived from the fMRI data to investigate individual building blocks of Stable Diffusion, such as how semantic content is generated in the inverse diffusion process or what processes occur in the U-Net.

In addition, the team is quantitatively interpreting the image transformations at different stages of diffusion. In this way, the researchers aim to contribute to a better understanding of diffusion models from a biological perspective, which are widely used but still poorly understood.