Undressed by a prompt: expert fears new "DeepNude" scandal

While AI image generators are mainly used for creative purposes, they also open the doors for fake nude photos. AI artist Denis Shiryaev wants to educate the public about this.

With great power comes great responsibility - a phrase that also applies to AI image generators. Because closely linked to them are deepfakes, which we define as AI-generated synthetic visual media for ethically questionable or criminal purposes.

The history of deepfakes shows that public AI image generators have their origins in pornographic images and videos without consent. Especially celebrities, for whom there is plenty of AI training material on the Internet, were the target of early deepfake creators.

But with the latest tools, anyone can become a victim - all it takes is a single image as a template, as AI artist Denis Shiryaev shows.

Undressed by AI

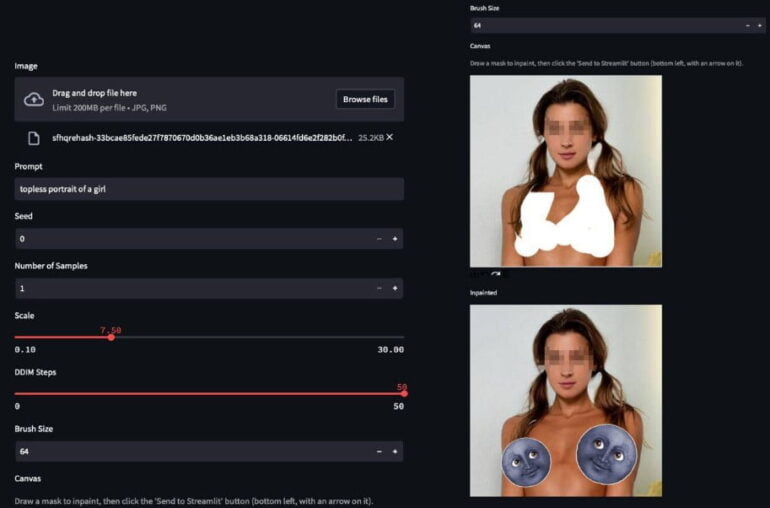

Technological achievements like img2img ("picture to picture") or inpainting, i.e. replacing certain image content with your own visuals, make it even easier to create deepfakes. That's why we need to talk about what people with bad intentions can do with the technology that is currently available.

Anyone who hears about deepfakes probably first thinks of entertaining videos like that of the fake Margot Robbie or Tom Cruise on TikTok, or the possible spread of misinformation. Things get intimate when people are virtually stripped and their underwear is replaced with realistic-looking sexual characteristics.

A software called DeepNude was capable of doing this three years ago. The program made headlines and spread quickly, but was just as quickly discontinued due to heavy criticism. The reason given by the operators at the time: "The world is not ready yet."

Whether the world is ready in 2022 remains to be seen, but technology is advancing unabated.

Image AI providers ban NSFW content, but those bans have limited effect

The companies behind DALL-E 2, Midjourney, or Stable Diffusion know the risks and are taking precautions, at least on their platforms, such as word filters and permanent blocks for repeated violations of content guidelines.

"No adult content or gore. Please avoid making visually shocking or disturbing content. We will block some text inputs automatically," for example, Midjourney's terms of use state.

Stability AI, the company behind Stable Diffusion, also prohibits the generation of "NSFW, lewd, or sexual material" for its DreamStudio web service. The restrictions of the open-source model, however, are somewhat different: only sexual content without the consent of the people "who might see it" is prohibited.

Furthermore, compliance with rules for open-source models cannot be controlled. This is also not possible for other freely available generative image AIs, which do not need to be as powerful as the known models, but could probably be fine-tuned with manageable effort, especially for special use cases like nudity. DeepNude already achieved this three years ago.

Shiryaev sees another DeepNude scandal brewing

Denis Shiryaev, CEO of AI toolbox "neural.love" and known as an AI artist, thinks a new DeepNude scandal is possible once the public learns how easy it is to use Stable Diffusion to turn a photo with clothes into a nude photo.

He demonstrates AI-generated nudity using Stable Diffusion as an example. To do so, he commits a violation of the open-source model's guidelines.

Today's software is also much easier to use than the GAN-based DeepNude, Shiryaev says. Basically, any person who has even a single photo available on the Internet or on a hard drive is at risk of being defamed by fake nude photos.

"I believe it is better to prepare the general audience (outside of the AI industry bubble) that not all that they see in photos is real anymore, since almost anyone can reproduce those results quite easily and fast," Shiryaev writes on Twitter.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.