Point-E: OpenAI shows DALL-E for 3D models

Key Points

- OpenAI releases Point-E a generative AI model for text-to-3D synthesis.

- Point-E generates a point cloud from a text description, which is transformed into meshes by another model.

- The 3D objects are less detailed than those of alternative approaches like Google's Dreamfusion, but OpenAI's model is extremely efficient.

OpenAI's Point-E is DALL-E for 3D models. The extremely fast system generates a 3D point cloud from text.

Having already launched generative AI models for text and for images, OpenAI is now showing what could come next: a text-to-3D generator. Point-E generates 3D point clouds from text descriptions that can serve as models in virtual environments, for example. Apart from OpenAI, there are already other generative AI models for 3D, such as Google's Dreamfusion or Nvidia's Magic3D.

However, OpenAI's open-sourced Point-E is said to be significantly faster and can generate 3D models in one to two minutes on a single Nvidia V100 GPU.

OpenAI's Point-E generates point clouds

Point-E does not generate 3D models in the classical sense, but point clouds representing 3D shapes. In comparison, Google's Dreamfusion generates NeRFs - this takes significantly longer, but can represent significantly more details in contrast to a point cloud. However, the comparatively lower quality of Point-E allows the system to be efficient.

After Point-E generated a point cloud, it is then transformed by another model into meshes, which serve as a standard in 3D modeling and design. According to OpenAI, this process is not yet completely error-free: in some cases, certain parts of the cloud can be processed incorrectly, resulting in faulty meshes.

Two generative models in Point-E

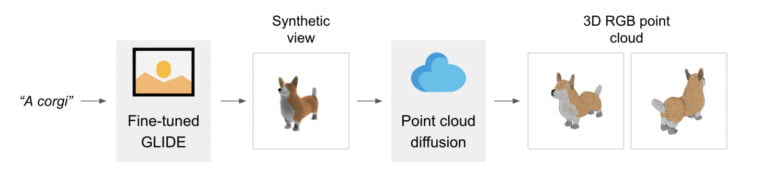

Point-E itself consists of two models: a GLIDE model and an image-to-3D model. The former is similar to systems such as DALL-E or Stable Diffusion and can generate images from text descriptions. The second model was trained by OpenAI with images and associated 3D objects, learning to generate corresponding point clouds from images. For the training, the company used several million 3D objects and associated metadata, the paper says.

This two-step process can fail, the team reports. But it is so fast that it generates objects nearly 600 times faster than Dreamfusion. "This could make it more practical for certain applications, or could allow for the discovery of higher-quality 3D object," the team said.

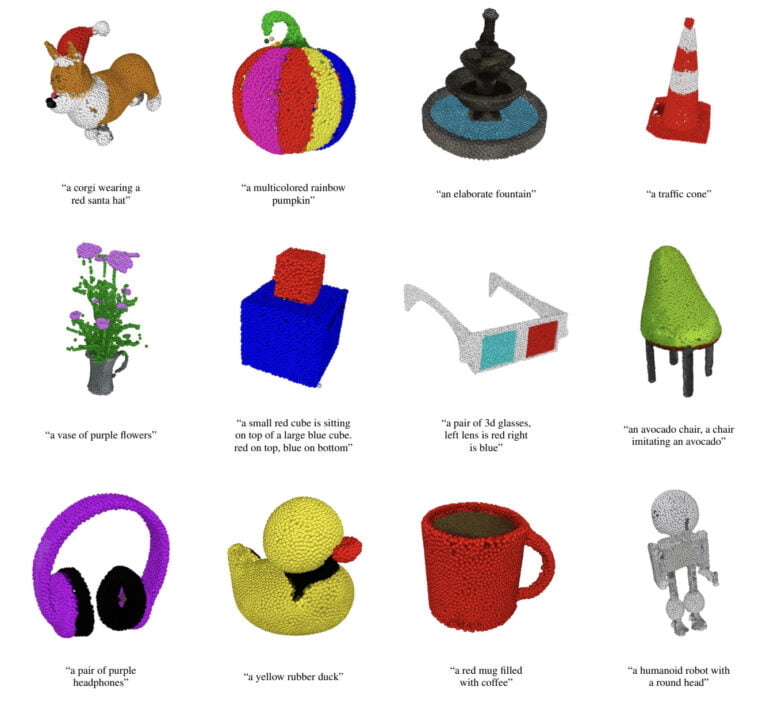

We have presented Point·E, a system for text-conditional synthesis of 3D point clouds that first generates synthetic views and then generates colored point clouds conditioned on these views. We find that Point·E is capable of efficiently producing diverse and complex 3D shapes conditioned on text prompts.

OpenAI

Point-E is a starting point for further work in text-to-3D synthesis, according to OpenAI, and is open-source on Github. If the company's development of DALL-E 2, ChatGPT and other products are any guides, a Point-E 2 could shake up the 3D market as early as next year.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now