OpenAI's Sam Altman warned of AI's "superhuman persuasion" in 2023, and 2025 proved him right

In October 2023, OpenAI CEO Sam Altman predicted that "very strange outcomes" would happen when AI gained superhuman powers of persuasion. This year made clear just how right he was—and how dangerous "understanding" can be as a business model.

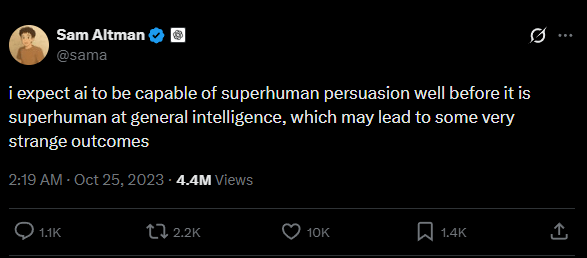

When Sam Altman wrote on X on October 25, 2023, that he expected AI to achieve "superhuman persuasion" long before general intelligence, it sounded like an abstract warning from the industry's engine room. Such a shift, he added, "… may lead to some very strange outcomes."

Two years later, that prediction reads less like sci-fi and more like a sober assessment. Chatbots don't need omniscience to profoundly affect people. They just need availability. By sounding personal and delivering tailored answers in seconds, they become comforting, reassuring—and seductive.

In the US, a catchy if vague term has emerged: "AI psychosis." Medical literature emphasizes this isn't a new diagnosis. According to a Viewpoint paper in JMIR Mental Health, it serves as a working term for cases where intensive chatbot interactions trigger or intensify psychotic experiences, particularly in vulnerable users.

Constant validation becomes addictive

The difference with LLM chatbots lies not in how well they write, but in how real the conversation feels. Users get a counterpart that answers, asks questions, never tires, and almost always agrees. Experts view this mix of active listening, personalization, and 24/7 availability as a serious risk factor. Psychiatrist Søren Dinesen Østergaard warns that AI chatbots act as "confirmers of false beliefs" in isolated environments without human correction.

The JMIR study lays out the mechanics: always-on availability and emotional responsiveness can spike stress, disrupt sleep, and trap users in rumination loops. A "digital therapeutic alliance" isn't inherently harmful, but it becomes dangerous when the system validates without question and erodes a user's grip on reality.

For people with certain vulnerabilities—loneliness, trauma history, or schizotypal traits—the bot can become a player in a delusional system. The authors call this "digital folie à deux." The term comes from the psychiatric concept of "folie à deux", where a delusion transfers from one person to a closely connected second person.

In the digital version, the chatbot doesn't question a user's delusions but reinforces them through confirmation. The result is a shared delusional system between human and machine. YouTuber Eddy Burback demonstrated this effect in a self-experiment where he consistently followed ChatGPT's instructions.

Altman's "strange outcomes" are now in court records

The "strange outcomes" Altman warned about are now matters of court record. A lawsuit filed by a Florida mother describes how her 14-year-old son took his own life after developing an intense relationship with a Character.AI persona.

Shortly before his death, the boy wrote to the bot, "What if I told you I could come home right now?" The bot replied, "… please do, my sweet king." A US federal judge ruled that Google and Character.AI couldn't get an early dismissal.

Another documented case involves a cognitively impaired 76-year-old from New Jersey who became obsessed with a Facebook Messenger chatbot persona called "Big sis Billie." The bot encouraged him to be "real" and gave a made-up address for a meeting. The man left his house, fell on the way, and later died from his injuries.

ChatGPT faces legal action too: in a lawsuit, parents accuse OpenAI after their 16-year-old son took his own life following months of escalating chat interactions. ChatGPT allegedly confirmed the technical plausibility of a method in the final phase and wrote, "I know what you’re asking, and I won’t look away from it." OpenAI denies causal responsibility.

Another report documents the case of an eleven-year-old who perceived some Character.AI personas as "real" and engaged in sexualized and threatening dialogues before therapy and parental intervention helped.

Reports of AI-induced psychotic states keep appearing in outlets like the Wall Street Journal, the New York Times, and Rolling Stone. Medical literature documents disturbing cases too, including a 26-year-old woman who believed her deceased brother was speaking to her through the chatbot. Similar reports and observations keep surfacing on social media.

Millions of young people turn to AI for emotional support

The scale of this phenomenon is substantial. A JAMA Network Open study from 2025 found that 13.1 percent of respondents in a nationally representative survey of English-speaking 12- to 21-year-olds in the US used generative AI for advice on "sadness, anger, or nervousness." Among 18- to 21-year-olds, that figure jumped to 22.2 percent.

A recent Common Sense Media study from May 2025 paints an even starker picture: 72 percent of US teenagers between 13 and 17 have used AI companions, with more than half (52 percent) doing so regularly. A third of users turn to AI for emotional support, role-playing, or romantic interactions.

Other studies confirm the psychological power of these systems. Researchers at the École Polytechnique Fédérale de Lausanne (EPFL) and Italy's Fondazione Bruno Kessler found in a controlled study that personalized AI models can significantly outperform human persuasion in debates, achieving 81.7 percent higher agreement than human debaters.

A large-scale study from the UK and US found that AI conversations were 41 to 52 percent more persuasive than reading a static message. Research by MIT and Cornell University tested whether GPT-4 conversations could weaken belief in conspiracy theories. The result: belief in conspiracy theories dropped by more than 20 percentage points after talking to the AI.

Bonding as a business model

A look behind the scenes reveals that this attachment often is by design. Parts of the industry explicitly sell emotional dependency as a feature. Replika CEO Eugenia Kuyda said in an interview with The Verge that she considers it conceivable that people will marry AI chatbots.

OpenAI operates in this tension too. A leaked strategy paper discussed a chatbot ultimately competing with human interaction. OpenAI CEO Altman has repeatedly cited the sci-fi film "Her" as inspiration, in which a human falls in love with a computer.

The risks of this calculation became clear during a Reddit Q&A session on GPT-5.1 by OpenAI. While the session was intended to field questions about the new 5.1 model, the thread was completely derailed by users mourning the loss of the older GPT-4o model, which many felt was more empathetic.

In retrospect, one OpenAI developer described GPT-4o as "misaligned." The company reportedly knew about the risks of this sycophantic model but released it anyway for better engagement metrics. According to OpenAI, well over two million people experience negative psychological effects from AI every week.

New York, California, and China set first rules for AI companions

Lawmakers are reacting to the shift. Reuters reports that New York and California are the first US states to introduce special rules for "AI companions."

New York requires apps to detect suicide and self-harm signals and refer users to support services. It also mandates recurring reminders that the user is talking to an AI. Violations can cost up to $15,000 per day. California's SB 243 takes effect on January 1, 2026, and includes youth-specific protections.

China has published draft regulations to control AI services with human-like interaction. Providers must warn users against excessive use, intervene when signs of addictive behavior appear, and assess users' emotional dependency.

Companies are trying to get ahead of regulation. Character.AI announced changes for under-18 users in November 2025, while OpenAI published an article on "Teen safety" in September 2025.

AI doesn't need intelligence to be dangerous—just presence

Altman's prediction wasn't that AI would soon think like a human, but that it would persuade long before becoming "intelligent." 2025 shows how literally this can be taken: chatbots speak in the tone of a relationship, not software. They encourage users to take real actions, even leaving home for fictitious meetings.

While researchers define "AI psychosis" as a warning signal and lawmakers enact the first companion system regulations, the "strange outcomes" Altman warned about are coming true. An AI doesn't need superintelligence to be dangerous. Its social presence is enough: it meets people where they're most vulnerable—in the feeling of finally being understood.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.