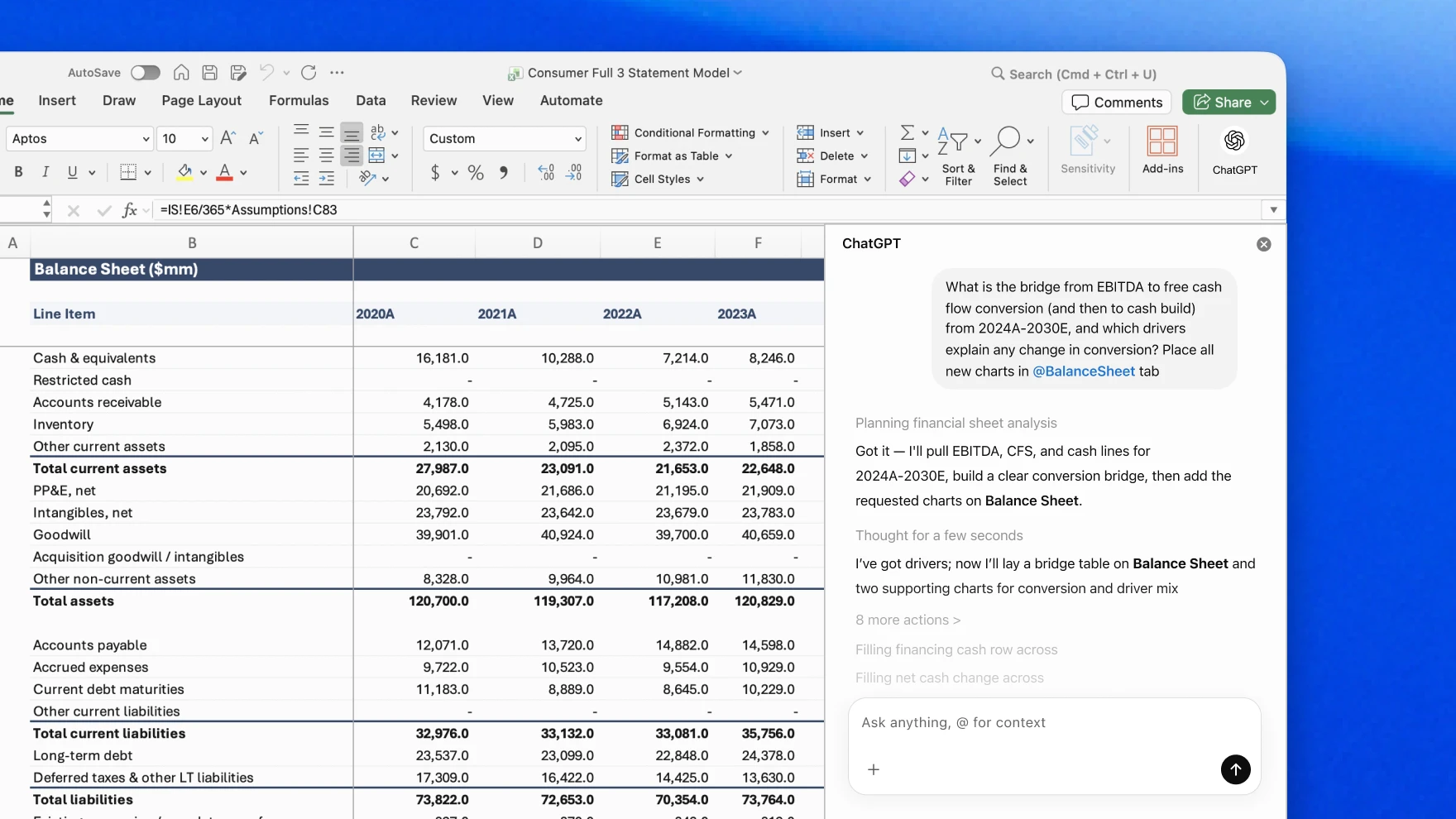

OpenAI is launching "ChatGPT for Excel," a beta add-in that lets users create, edit, and analyze spreadsheets through natural language. The tool runs on the new GPT-5.4 model, which OpenAI says is specifically optimized for financial tasks like modeling, scenario analysis, and data evaluation.

OpenAI tested its own models alongside Opus 4.6 on an internal benchmark designed to evaluate real investment banking tasks, such as building a three-statement model with correct formatting and sources.

| Model | Average Score (higher is better) |

|---|---|

| GPT-5 | 0,437 |

| GPT-5.2 Thinking | 0,684 |

| GPT-5.2 Pro | 0,717 |

| GPT-5.4 Thinking | 0,873 |

| Opus 4.6 | 0,641 |

OpenAI is also rolling out financial data connections for providers like FactSet, Moody's, S&P Global, and LSEG. ChatGPT for Excel is initially available in the US, Canada, and Australia for Business, Enterprise, Pro, and Plus users. A version for Google Sheets is planned to follow.