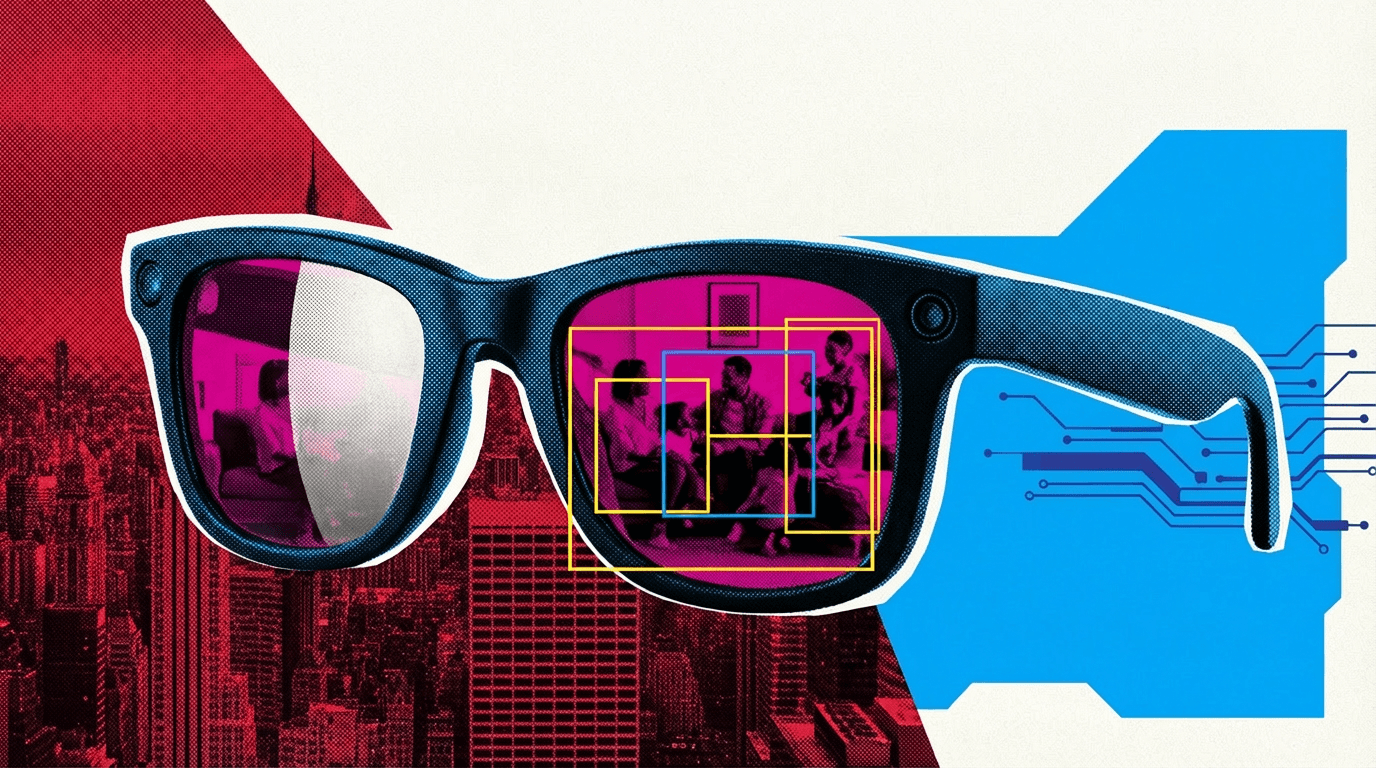

Read full article about: Meta creates new applied AI engineering division

Meta is building a new applied AI engineering organization, according to an internal memo obtained by the Wall Street Journal. The new teams will be led by Maher Saba, currently a vice president in Meta's Reality Labs division, and will report to CTO Andrew Bosworth.

The structure is designed to be extremely flat, with up to 50 employees per manager. The new division will work alongside Meta's Superintelligence Lab to build the "data engine" that speeds up improvements to Meta's AI models.

According to Saba, the organization consists of two teams: one focused on interfaces and tools, and another on tasks, data collection, and evaluations.

Meta restructured its AI operations last summer, creating the Superintelligence Lab under former Scale AI CEO Alexandr Wang. CEO Mark Zuckerberg announced in January that the company would release new models and products in the coming months.