Google downplays AI's environmental impact in new study

Google's latest report on the environmental impact of its Gemini AI apps leads with a bold headline: a typical text prompt uses just 0.24 watt-hours of energy, supposedly less than watching TV for nine seconds.

The comparison is classic PR. By focusing on one low-impact query, Google shifts attention away from the main issue: scale. The real environmental cost comes from billions of prompts processed every day. No one watches TV for nine seconds, and almost no one sends just one chatbot request.

Still, Google presents the study as a comprehensive look at AI energy use in real-world conditions. The key stats: 0.24 watt-hours, 0.03 grams of CO₂, and about five drops of water (0.26 milliliters) per text prompt.

All the things Google didn't count (it's a lot)

While Google's move toward transparency is notable, the study leaves out major details. The biggest omission: it only counts a "typical" Gemini App text prompt. But what does "typical" mean?

There's no information on average prompt length or how more intensive tasks are factored in. The real energy drains—document analysis, media uploads, image generation, audio transcription, live video, advanced web searches, and code generation—don't factor in. By highlighting only simple, basic text queries, Google sidesteps the higher energy costs of complex workloads and leaves out the likelihood that real averages are much higher once edge cases are included.

Google's AI also powers products beyond the Gemini app. It runs behind the scenes in services like Google Search, multiplying energy use far past what the study measures.

There's a technical gap, too. Newer models use "reasoning tokens" for logical tasks, which can drive up token counts dramatically. In June, Google processed 980 trillion tokens across its AI products and APIs—more than double May's total, likely due to heavier use of reasoning-intensive models. But the study just says "Gemini App models," so it's unclear whether these newer reasoning models are included.

Infrastructure is another blind spot. Requests with more tokens or non-text inputs put extra strain on the massive networks linking thousands of AI chips. Google's study only measures what happens inside individual "AI computers," excluding internal data center networks and external data transfers. For simple text prompts, this might be minor, but for complex jobs, it's a chunk of energy left out.

Training is left out as well. Training a large model can take weeks or months, using thousands of chips and consuming gigawatt-hours of electricity. It's still a huge part of total energy consumption. Google's report only looks at inference, the power needed to run a trained model. The company says it plans to analyze training energy in a future report.

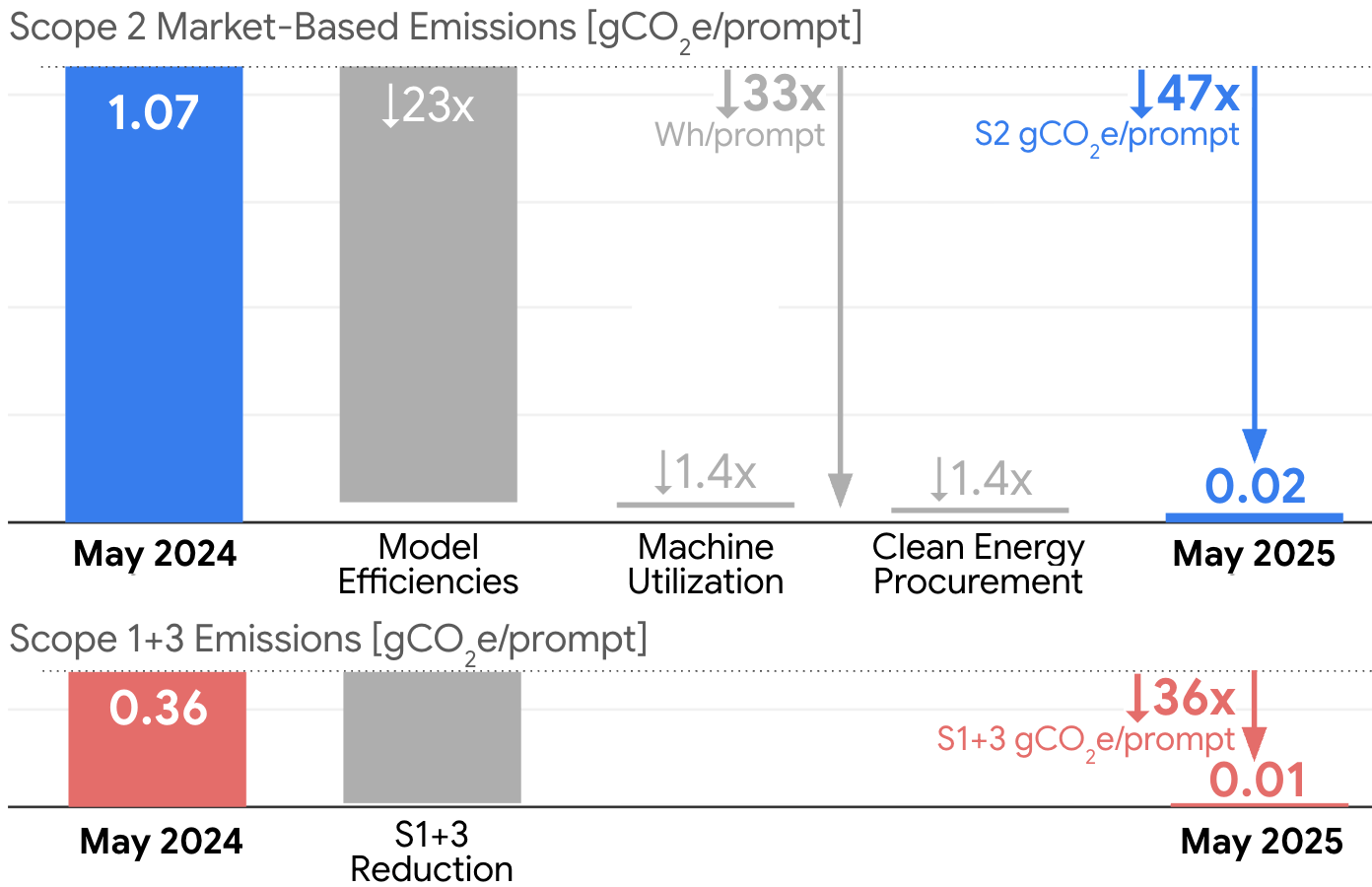

Google also uses a "market-based" method for reporting CO₂ emissions. Instead of showing actual, location-based emissions, Google lowers its reported numbers by buying renewable energy certificates, even if the data center itself still runs on fossil fuels. The real, location-based emission factor is almost four times higher than the market-based value Google uses. This approach makes the carbon numbers look better on paper, but it doesn't reduce the actual emissions coming from the data center.

Efficiency gains, but only for select workloads

Despite these gaps, Google's numbers do show efficiency improvements. The company says it cut energy use per request by 33 times in a year and reduced its CO₂ footprint per prompt by a factor of 44, partly thanks to cleaner electricity.

These gains come from software tweaks and better server utilization. For heavier tasks, only the necessary parts of the model activate. Google says its latest AI chips are 30 times more efficient than the first generation, and its data centers now run with just nine percent overhead.

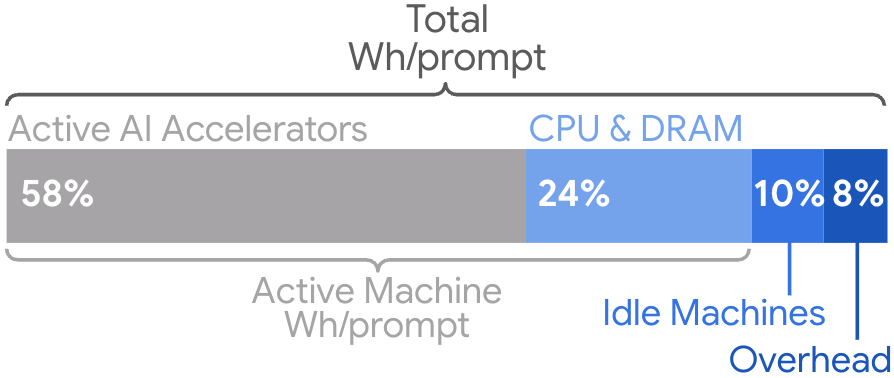

According to Google, 58 percent of energy goes to AI chips, 25 percent to RAM and CPUs, 10 percent to standby machines for failover, and 8 percent to cooling and infrastructure.

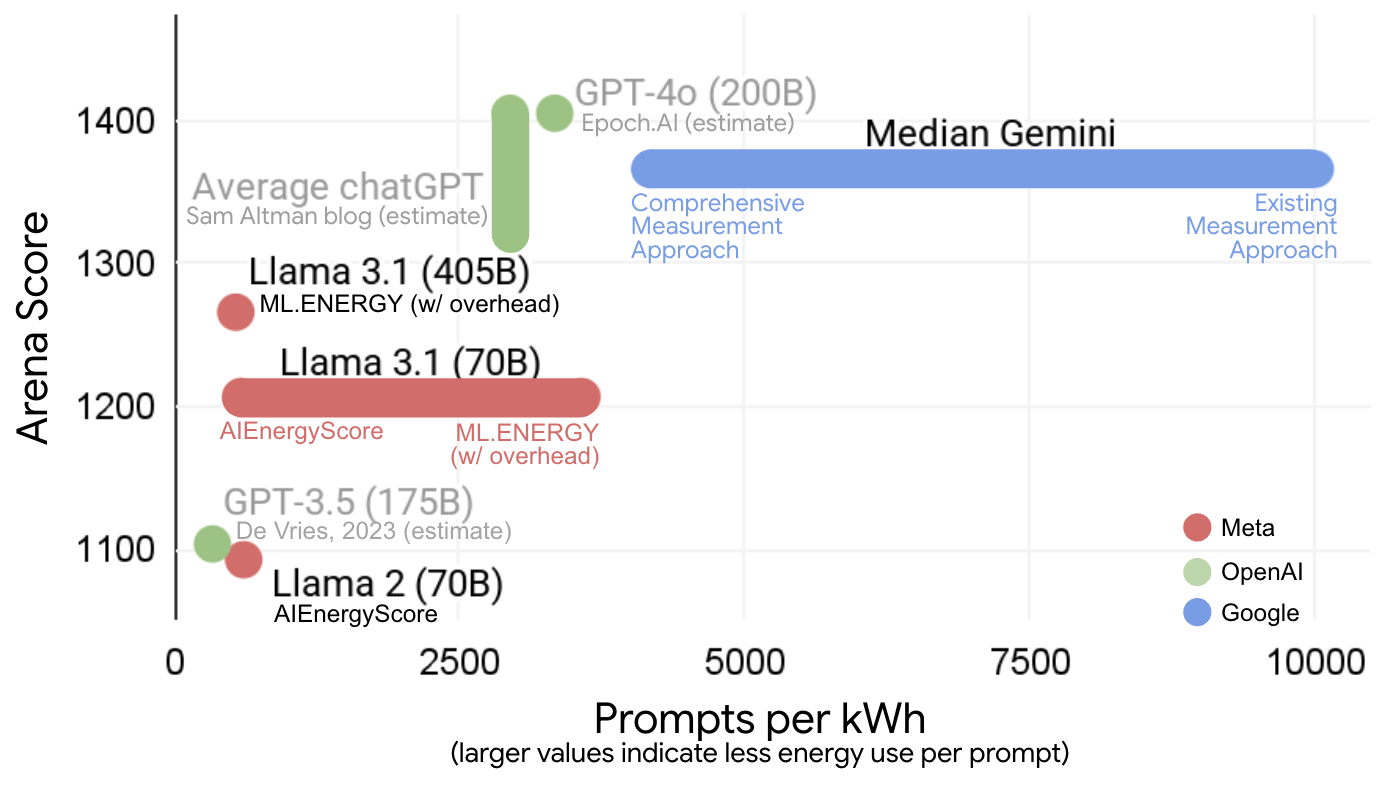

Independent studies estimate energy use for AI models between 0.3 and 3 watt-hours or more per request. OpenAI CEO Sam Altman recently said ChatGPT uses about 0.34 watt-hours per prompt, though he didn't say how he got that number. Mistral's most recent report found higher per‑request emissions and water use consumption for a large language model.

Google points out that comparing studies is tough, since there's no standard for what counts toward total energy use. That's fair, but Google's selective, fine-tuned approach doesn't help transparency.

Spin over substance

At first glance, the environmental impact of a single text prompt looks trivial. Yet the central question remains unresolved: What are the actual resource demands of a global AI system processing billions of, often significantly more complex, queries each day? Google's study doesn't answer that.

The scale of investment shows this is more than a theoretical issue. Trillions of dollars are flowing into new data centers and energy supplies worldwide. Even DeepMind CEO Demis Hassabis recently boasted that Google's AI chips could "melt" under the strain of surging demand—hardly the image you'd expect from a minimalist energy balance sheet.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.