"Made by Google": Google outpaces Apple with Gemini

Google showcased how the AI assistant Gemini will be more deeply integrated into Android, apps, and the new Pixel devices at the "Made by Google" keynote. The company also provided updates on accessibility and camera features.

At its ninth "Made by Google" event, Google unveiled a series of updates that bring new and old AI features to Android devices. The focus was on the AI assistant Gemini and the new Pixel devices.

Google is responding to Apple's recent announcement that it plans to integrate its AI, "Apple Intelligence," into many parts of iOS. However, not many of the promised innovations are available yet, and the US company is proceeding cautiously in the EU.

Google did not mention such restrictions in the presentation and instead demonstrated how Gemini, as a multimodal assistant, will shape the company's future. Numerous new features are already being delivered to users or are expected to be available in the coming weeks. This suggests that the tech giant has left behind its initially cautious position in the field of generative AI. Google is now slowly living up to the expectations of a company that calls itself "AI first."

Deep integration of Gemini into Android

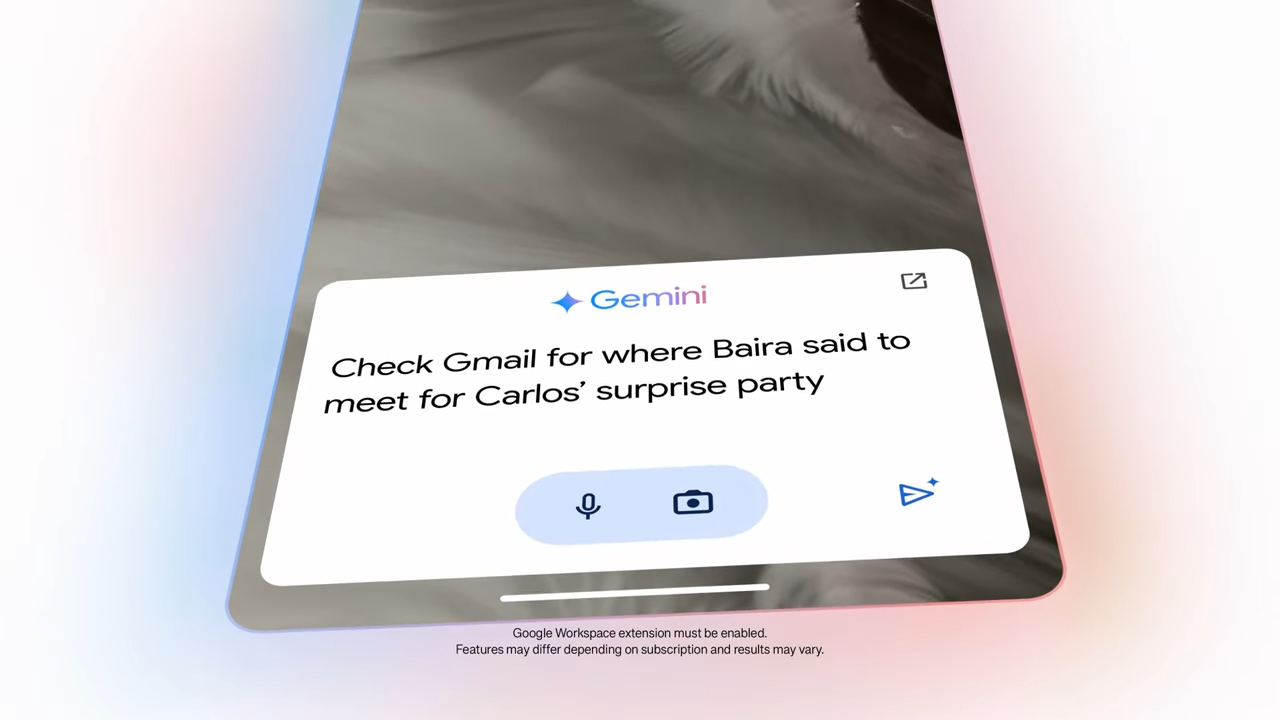

Similar to Siri, Gemini will receive a comprehensive upgrade and be deeply integrated into the Android operating system. Previously, the assistant was only available as a separate app. The system is designed to perform complex tasks linked with system apps such as to-do lists or calendars using natural language and can access the camera to understand images.

On a landing page, Google explains the differences between the well-known Google Assistant and Gemini. Many of the previously known commands, such as checking the weather or controlling smart home devices, are already available in Gemini. Gemini represents a fundamental change in how we can interact with AI to get things done, according to Google. Because it is based on a larger, more mature language model, simple queries may sometimes take longer. This is expected to change in the future. Potentially incorrect answers can be checked with a few clicks through a Google search.

On the new Pixel smartphones, Gemini is deeply integrated into the user experience. For example, users can ask questions about running YouTube videos or drag and drop AI-generated images into apps like Gmail. For sensitive applications, data remains on the device thanks to the on-device model "Gemini Nano," Google emphasizes.

This is particularly relevant for the new "Pixel Screenshots" feature and Call Notes. Pixel Screenshots allow users to organize and retrieve important information from screenshots. Call Notes automatically creates summaries and transcripts of phone calls.

According to Google, Gemini should be able to help with preparing a dinner party, for example, by finding recipes from emails, putting ingredients on shopping lists, and creating appropriate music playlists. Integration into the calendar is also planned: by taking a photo of a concert flyer, users should be able to check if they have time for the event. However, in a live demo on stage, the assistant failed twice before the presenter had to switch to a new device.

Gemini Live is designed to enable Gemini Advanced subscribers ($21.99 per month including 2 TB of cloud storage) to have fluid conversations with the assistant, similar to OpenAI's Voice Mode. Various voices are available to choose from, but at launch this will only be in English.

The company promises that Gemini will continue to improve over time. New models like "Gemini 1.5 Flash" and even deeper integration into services like Google Home, Phone, and Messages should ensure this. However, according to Google, there are still challenges, such as small delays in simple tasks, which they are working to resolve.

Gemini is expected to be available in 45 languages on hundreds of smartphone models from dozens of manufacturers, including Pixel, Samsung, and Motorola devices. On supported Samsung devices, the assistant can be accessed by swiping, while on Pixel smartphones, it can be accessed by pressing the power button. Starting now, the new Gemini features will be rolled out to the first users, with iOS support and additional languages following in the coming weeks.

Pixel 9, 9 Pro and 9 Pro XL with local and cloud AI

As expected, the new Pixel devices, especially the smartphones, were the focus of the hardware announcements. The Pixel 9 starts at 899 euros, the Pixel 9 Pro at 1,099 euros. The latter is now also available in an XL variant starting at 1,199 euros, which only differs in its larger display. The Pixel 9 Pro Fold is Google's thinnest folding phone with the largest display and is available starting at 1,899 euros. All feature improved cameras, but Google is focusing heavily on post-processing with AI.

To do this, they use the new Google Tensor G4 chip, whose production is being handled by Samsung for the last time. As usual, it has a dedicated AI chip that enables things like natural language processing in the Gemini Assistant or image generation in Pixel Studio locally on the device. This saves Google computing power in the cloud and increases user privacy. The Tensor G4 is the first processor that can run Gemini Nano multimodally, i.e. with an understanding of sounds and images.

In the new Pixel Studio app, Google combines a local diffusion model with the more powerful Imagen 3 in the cloud. Advances like MobileDiffusion, with which Google has already demonstrated the creation of AI images on smartphones in under a second, have likely been incorporated into the development. With the Magic Editor, areas in photos can be selected and details can be added via text input. The feature is reminiscent of Adobe's Generative Fill in Photoshop, but Google is only adapting the inpainting method supported in many diffusion models.

For some time now, elements could be removed similarly in a matter of seconds using the Magic Eraser. Google is using the possibilities of generative AI in other creative ways as well, such as through "Add me": After a group photo, the photographer could hand the smartphone to another person and enter the image, and the AI would then combine the two shots into one photo.

Google is using new lenses for sharper photos and videos in the cameras, which promise more light capture. In addition, Google is using a new HDR+ image processing pipeline and HDR videos, which are intended to provide more natural shots. With HDR, the camera takes the same scene in different exposure levels in quick succession and layers them on top of each other to increase the dynamic range.

With Super Res Zoom Video, high-quality videos can be recorded with up to 20x zoom. Video Boost, which is reserved for the Pixel 9 Pro, 9 Pro XL, and 9 Pro Fold, uses AI upscaling for 8K videos from which 33 MP stills can be extracted.

Improved accessibility through AI in photos and translations

Accessibility is also expected to be improved by AI functions. The Pixel camera now has a new feature called "Guided Frame." This improves object recognition, filters faces, and sharpens subjects. With the Pixel-exclusive app "Magnifier," users can now search for specific words in their environment and use the picture-in-picture mode for better context.

For "Live Transcribe," there is now a dual-screen mode for foldable smartphones that better displays transcriptions during conversations. "Live Caption" and "Live Transcribe" support seven new languages that can be used offline on the device.

Pixel Buds Pro 2 and Pixel Watch 3: Wearables are also getting an AI upgrade

In addition to new Pixel smartphones, Google also introduced the Pixel Buds Pro 2, which are equipped with a specially developed Tensor A1 chip for improved audio performance and stronger noise cancellation. The Tensor A1 chip is designed to enable twice as strong active noise cancellation with significantly improved battery life compared to the first-generation Buds Pro. Gemini can be accessed by pressing on one of the earbuds.

Also new is the next generation of the Pixel Watch. Pixel Watch 3 comes in two sizes for the first time and also offers new AI features, for example to analyze the user's running technique. The "Loss of Pulse Detection" function detects cardiac arrest and alerts emergency services.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.