Nvidia's new cloud business competes with AWS

Nvidia is using its dominant role in AI hardware to gain a foothold in the cloud software business.

Not only does Nvidia produce what is arguably the most sought-after AI chip, the H100 GPU, but the company has also been offering its own cloud service, DGX Cloud, since March. With this, Nvidia is opening up a new business area: the sale of cloud software and direct contact with the business customers of its GPUs.

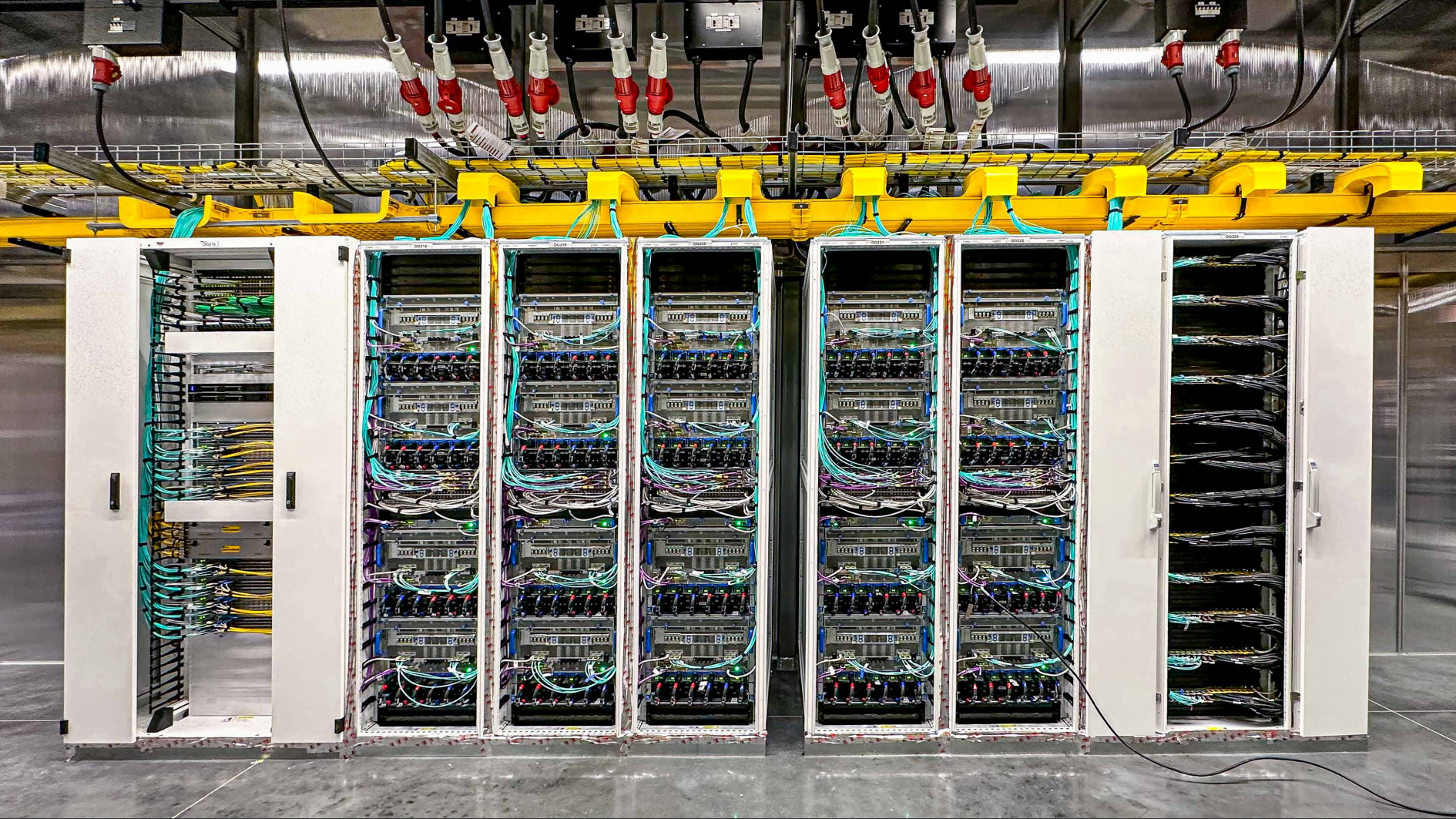

The servers for DGX Cloud are located at large cloud providers: According to The Information, Nvidia offered several providers last year to rent their own Nvidia servers from these cloud providers and rent access to them directly to AI developers.

Amazon's AWS declined the offer, while Microsoft, Google, and Oracle agreed.

Nvidia gains direct customer access

Strictly speaking, the cloud providers don't lose any revenue from the deal - Nvidia pays for the servers and rents them on at a higher cost. But the customers using Nvidia's DGX cloud are customers who aren't buying AI services from AWS, Microsoft, Google, or Oracle.

“I can totally understand why Amazon is not participating because at the end of the day, it’s Nvidia that actually owns the customer relationship here,” said Stacy Rasgon, an analyst at Bernstein. The cloud providers involved in the deal are smaller than AWS, so they could theoretically gain market share from AWS through DGX Cloud, Rasgon said.

Nvidia is focused on providing the compute and software infrastructure that allows companies to train their own AI models - and then run them anywhere on DGX Cloud. This way, companies are not tied to a specific cloud provider, but only to Nvidia's hardware.

Customers of Nvidia's DGX Cloud service already include Adobe, Getty Images, and Shutterstock, which use Nvidia's service to train generative AI models, as well as some of the largest cloud service customers, including IT software giant ServiceNow, biopharmaceutical company Amgen, and insurance company CCC Intelligent Solutions. Last month, Nvidia CEO Jensen Huang told analysts that DGX Cloud has been "an enormous success."

Nvidia supports small cloud providers

The goal of DGX Cloud, according to Nvidia, is primarily to use its knowledge of its own hardware to show cloud providers how to configure GPU servers in their data centers. These could then scale up the optimal configurations.

In this way, Nvidia also benefits from DGX Cloud outside of direct customer contact, as better performance makes its own hardware more attractive and thus ensures its own dominance in the AI hardware market.

In addition, the company has sold many of its valuable H100 GPUs to smaller vendors such as CoreWeave, Lambda Labs and Crusoe Energy to help them compete with the big players. From Nvidia's perspective, this move makes sense as AWS, Microsoft, and Google develop their own AI chips to reduce their dependence on Nvidia.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.