OpenAI DALL-E 2 Prompt Guide: How to use the generative AI model

Update –

- Added details about alternatives

- Prompt engineering tips added

OpenAI's DALL-E 2 shows impressive AI creativity - if you know how to control it. A little tour of DALL-E 2 in 2023.

OpenAI's DALL-E 2 pioneered generative AI models and was the first text-to-image offering on the market. A lot has happened since then: Alternatives such as Midjourney have emerged, usually producing better results with less complicated prompts, and the underlying model is improved regularly. There is also an open-source alternative with Stable Diffusion and Stable Diffusion XL.

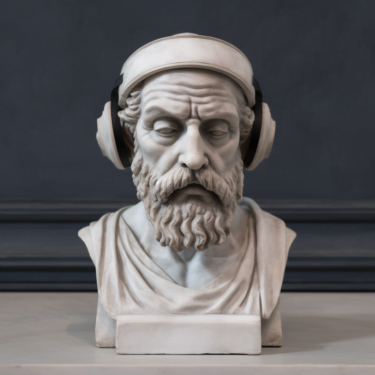

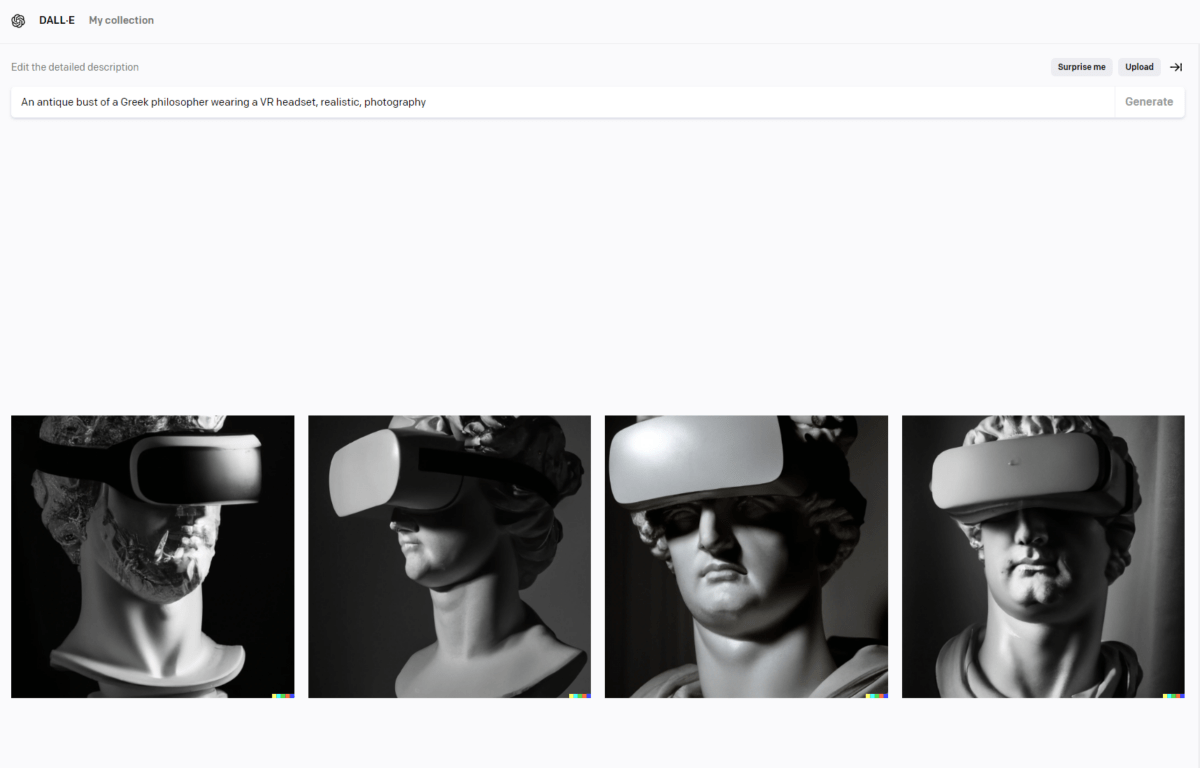

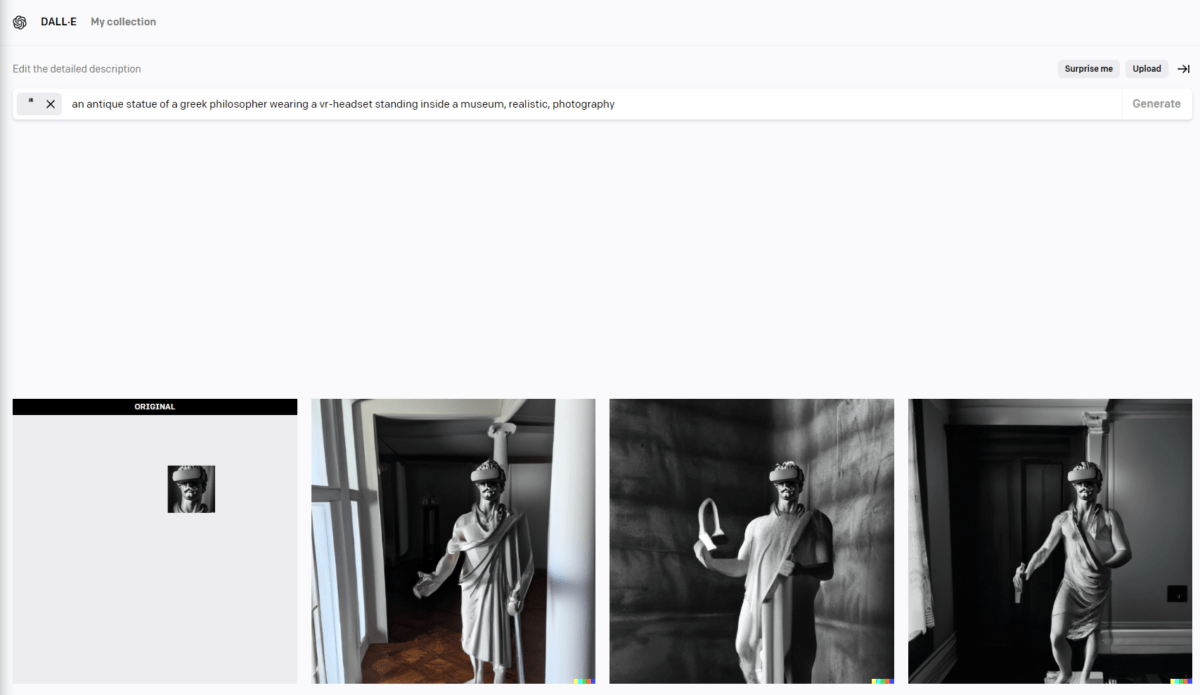

But with the right prompts and for special applications like inpainting, DALL-E can still make sense. An example: DALL-E converts my prompt "an antique bust of a Greek philosopher wearing a vr headset, realistic, photography, 2023" into a suitable - albeit low-resolution - image, but Midjourney refuses to add a VR headset to the much higher-resolution bust.

In the following I would like to give you a short insight into the functions of DALL-E 2 and the basics of prompt engineering.

OpenAI DALL-E 2 can create, edit or modify images

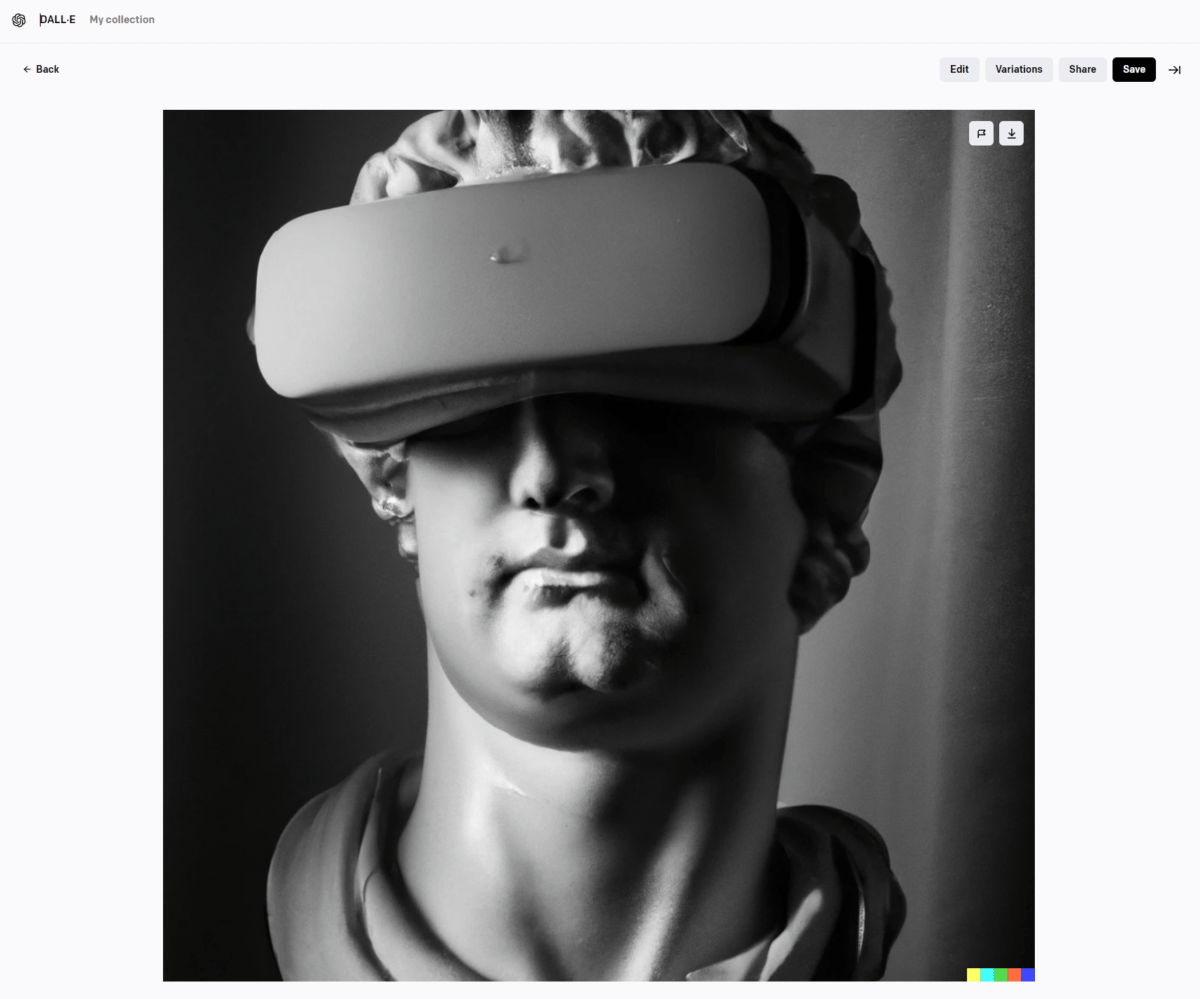

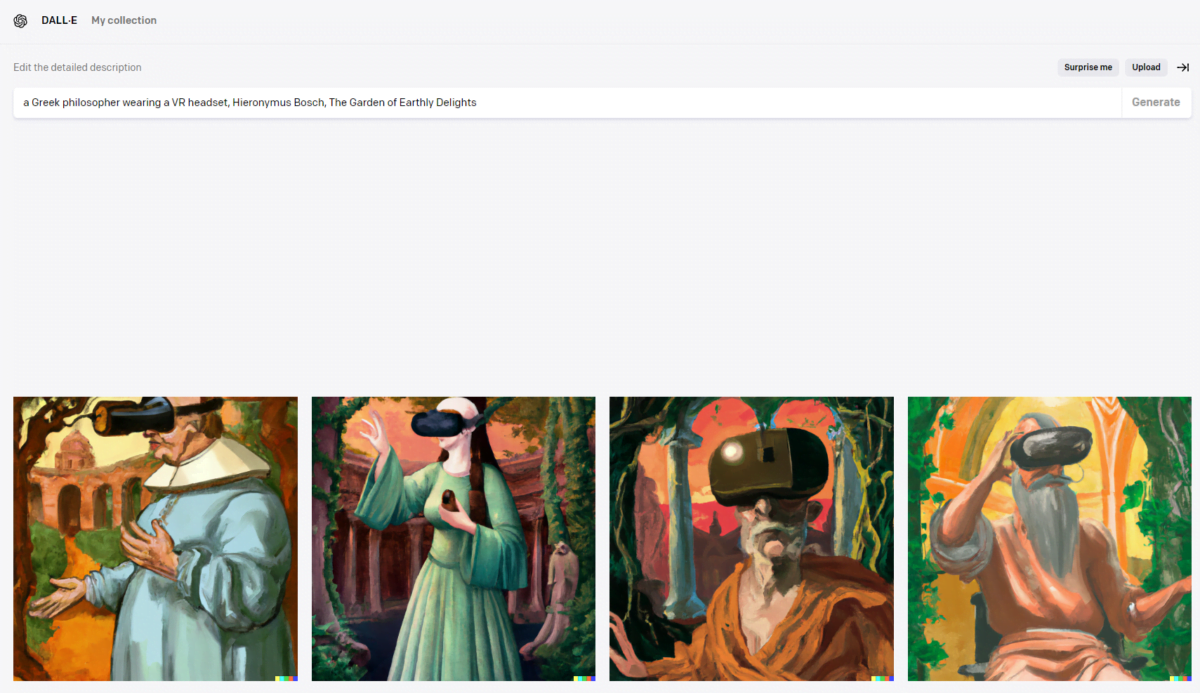

The user interface of DALL-E 2 is kept simple: Via an input field you can enter your text image command, the so-called "prompt", and send it to the AI system by pressing "Generate". After a short wait, four generated images are displayed.

Below the input field, you can alternatively upload your own picture - as long as it does not show a real person. From uploaded and newly created images, DALL-E 2 can generate variants. This makes it relatively easy to create images inspired by existing subjects that can then be further edited. In this way, the AI system can be controlled even more precisely.

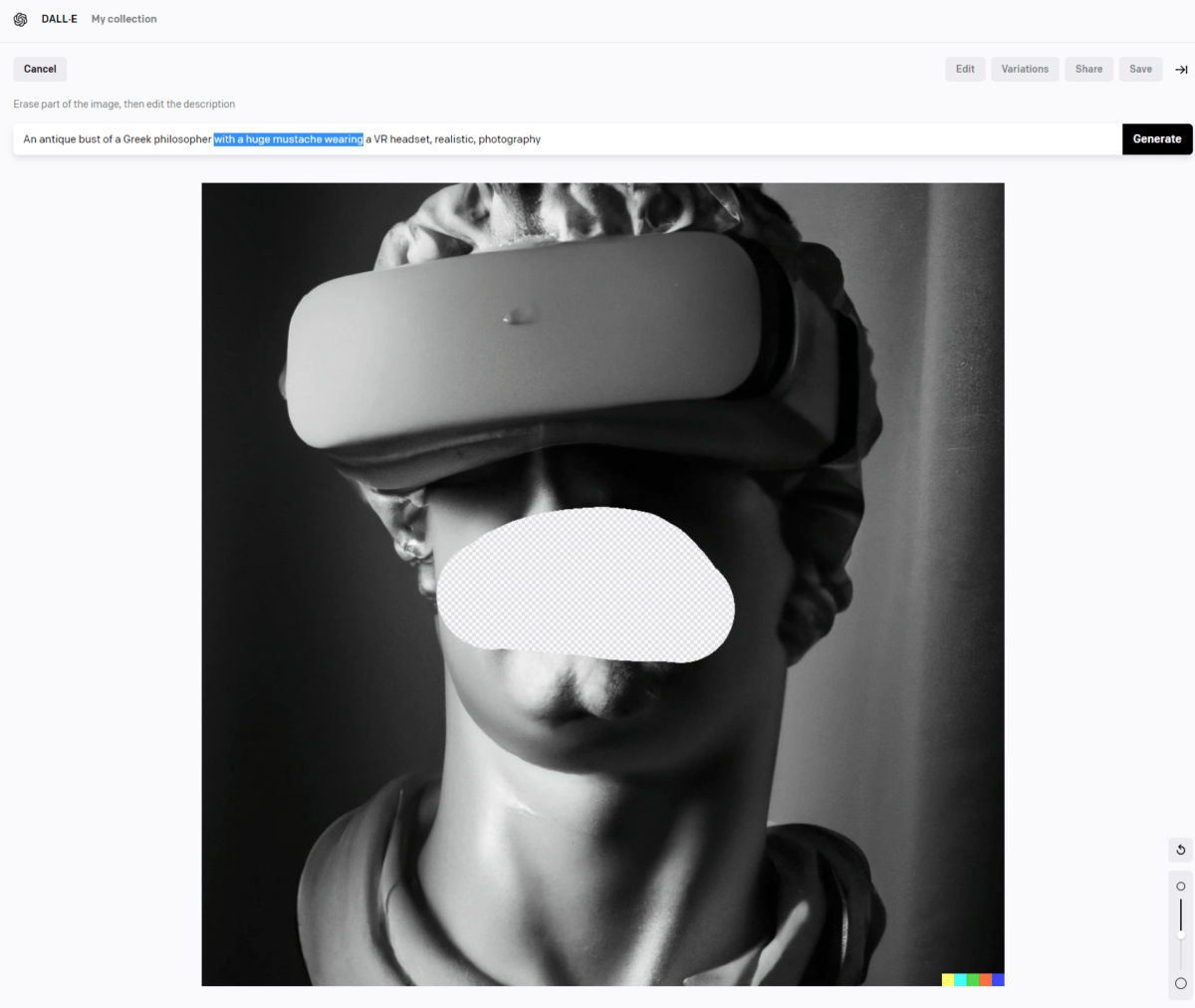

In addition, the edit function can be used to mark an area in the image, which can then be changed by DALL-E 2. For this, the desired result must simply be described via text prompt again.

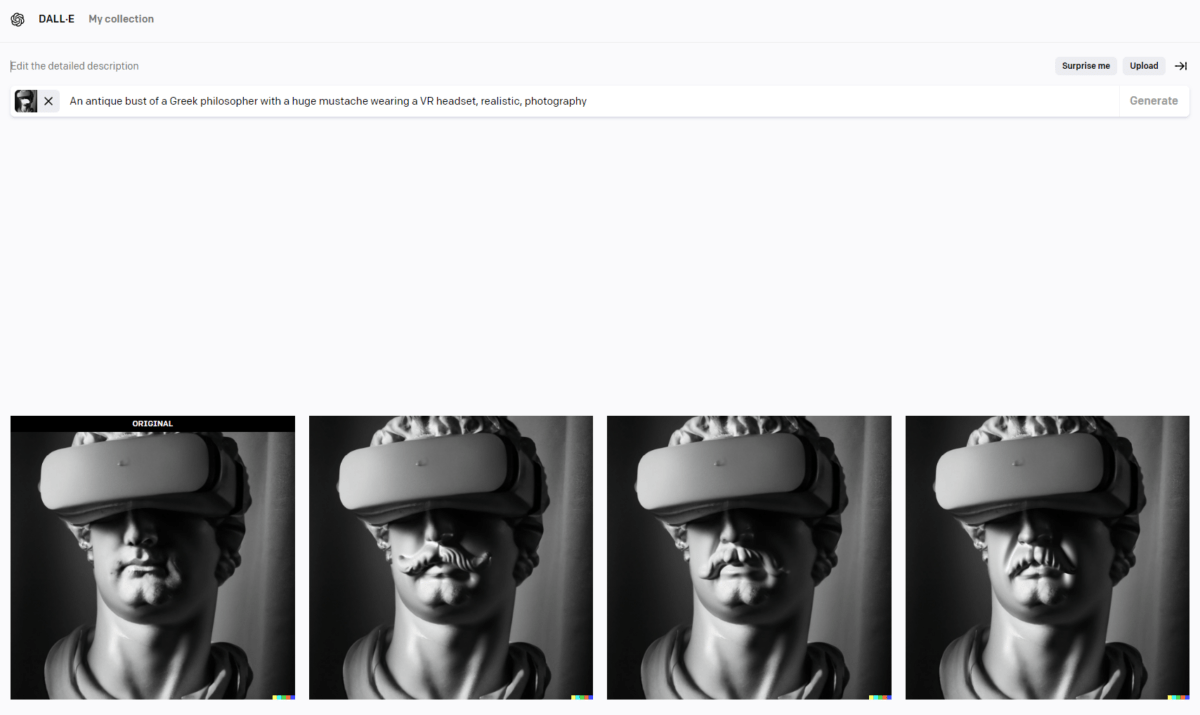

DALL-E 2 then generates three variants of the original containing the corresponding changes. Here I have added a fancy mustache to the statue.

OpenAI DALL-E 2 and prompt engineering

As is already clear from the example of the ancient bust of the Greek VR pioneer, DALL-E 2 can be controlled via text input. OpenAI has trained the AI system with over 650 million images - so DALL-E 2 has seen and can reproduce numerous subjects, styles, exposures, and other image properties.

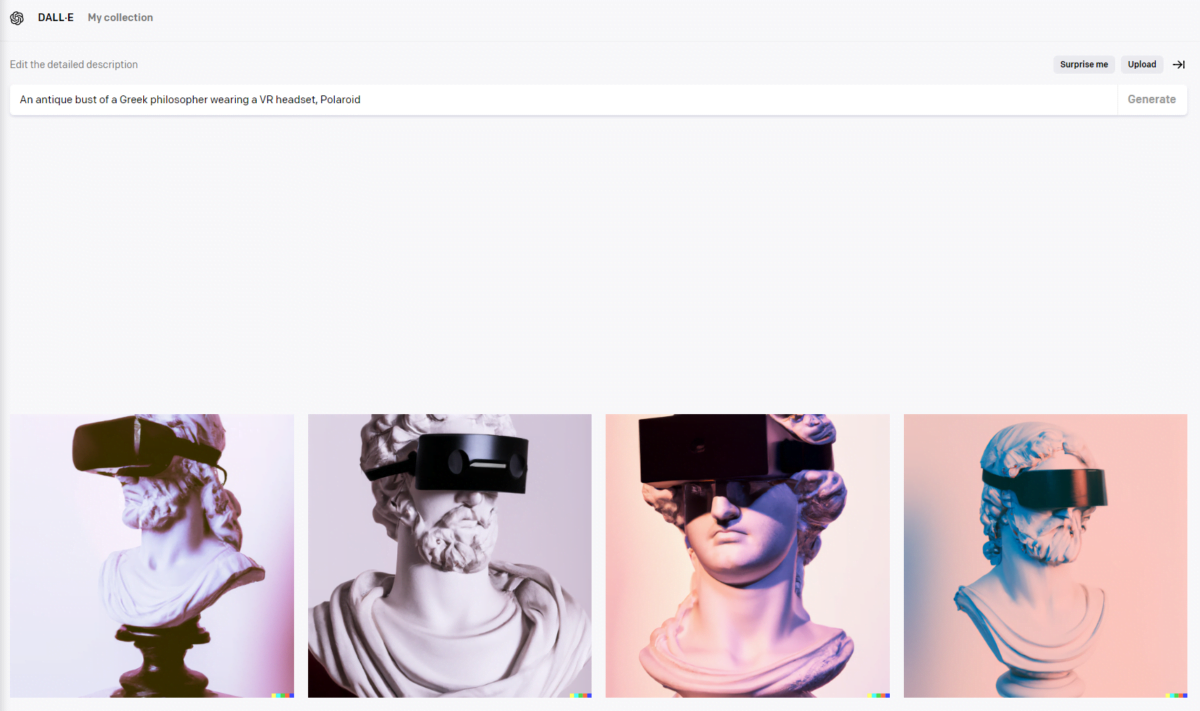

Using so-called prompt engineering - the design of the appropriate text description - DALL-E 2 can, for example, generate photorealistic images with different lens specifications to simulate small focal lengths or motion blur.

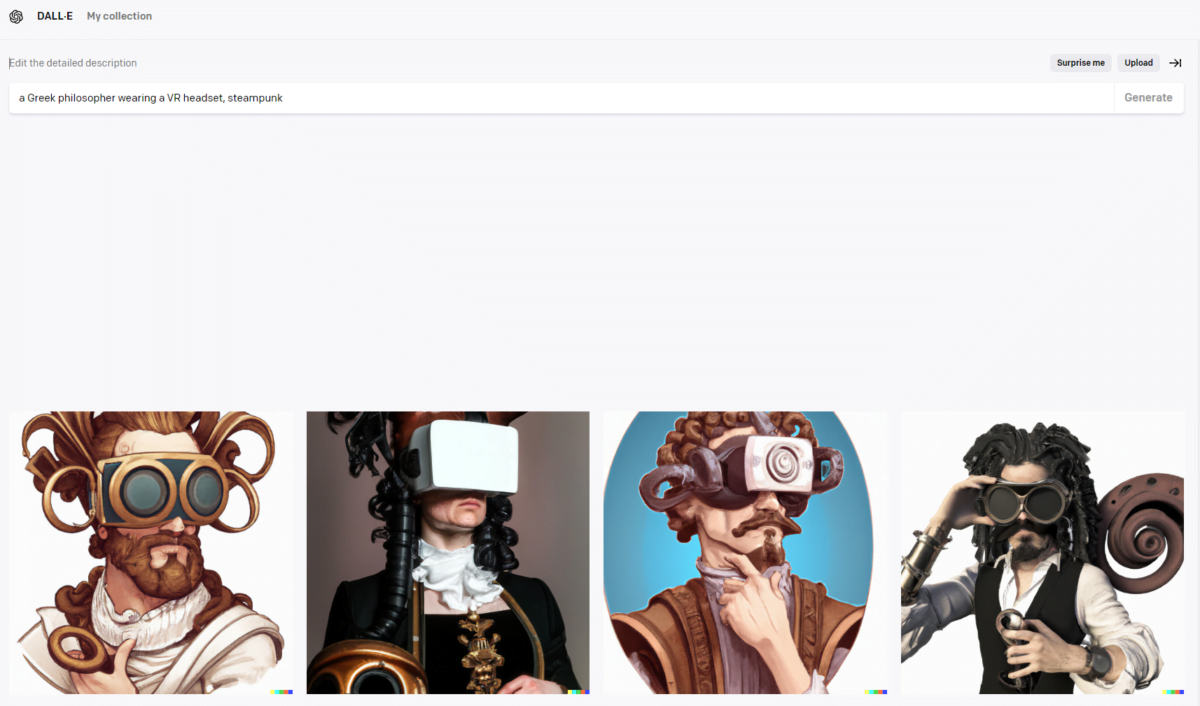

With the right descriptions, you can also capture moods, define structures or proportions, reproduce styles such as steampunk or cyberpunk, determine camera angles and exposure, or use the design of TV series or movies as a template.

Numerous illustration styles can be imitated by DALL-E 2, as well as 3D art or historical paintings. This ability to imitate styles is also demonstrated by DALL-E 2 for numerous artistic styles, individual artists, or specific works.

If you want to capture the style of a particular work of art or artist, you can also use AI help: In the so-called unbundling, you can ask models like ChatGPT or GPT-4 to describe the characteristics and style of a painting. The AI response can then be used for prompt engineering.

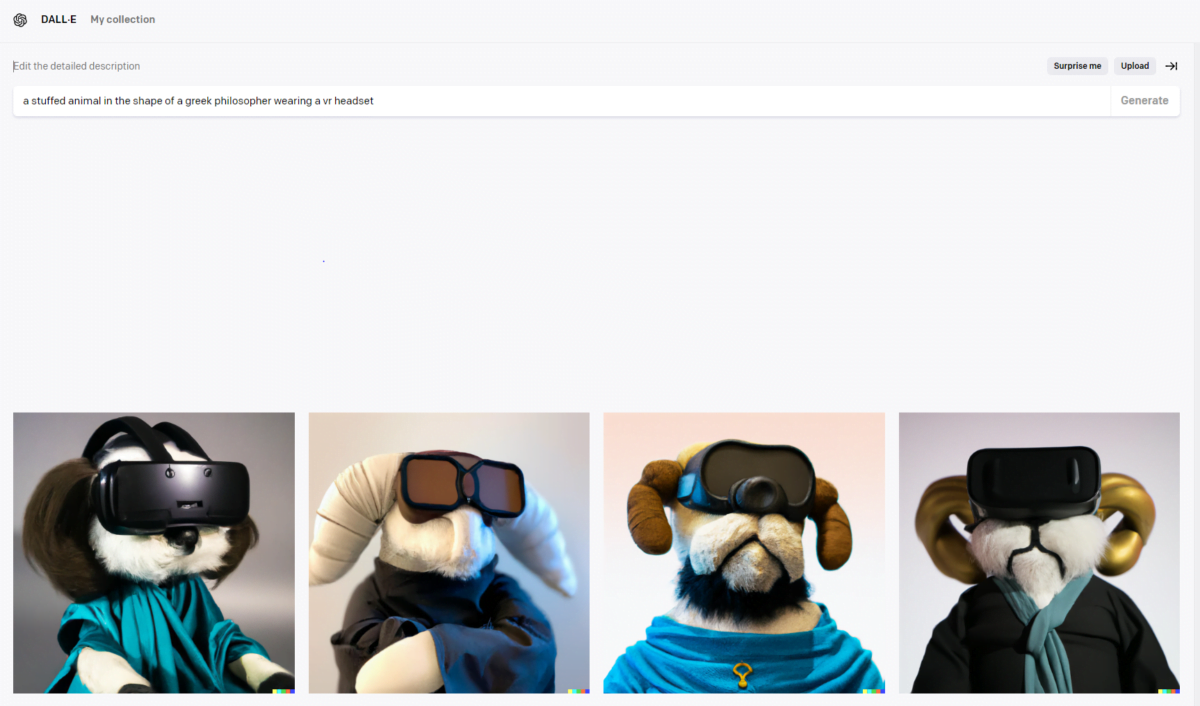

In addition to antique busts, DALL-E 2 can also create other objects - from embroidery to statues, bodies, stuffed animals, architecture, or designer chairs, it's all there.

DALL-E 2: Six tips for prompt engineering

| Prompt aspects | Explanation |

|---|---|

| Precision | Use precise descriptions for the desired objects or scenes, e.g., "a white husky playing in a snowy forest." |

| Adjectives and adverbs | Add adjectives and adverbs to provide more detail, e.g., "a sparkling blue road bike on an empty path." |

| Creativity | Be creative with your prompts, e.g., "a dog made of clouds." |

| Compare | Use comparisons to make your ideas clearer, e.g., "a house whose color is as yellow as ripe bananas." |

| Context | Consider the context in which the images are used, e.g., pictures of colorful butterflies for a children's book. |

| Simplicity | Keep your prompts concise and focus on one or two key elements, e.g., the main character and the setting. |

DALL-E 2: External image editing and outpainting

With the already introduced editing function, details in the image can be changed, such as adding a mustache, replacing objects, or the entire background.

Since the generated images can also be downloaded, an external image editing program can be used to get even more out of DALL-E 2. In the simplest version, our bust of the Greek philosopher can be reduced in size and used as the basis for a new image.

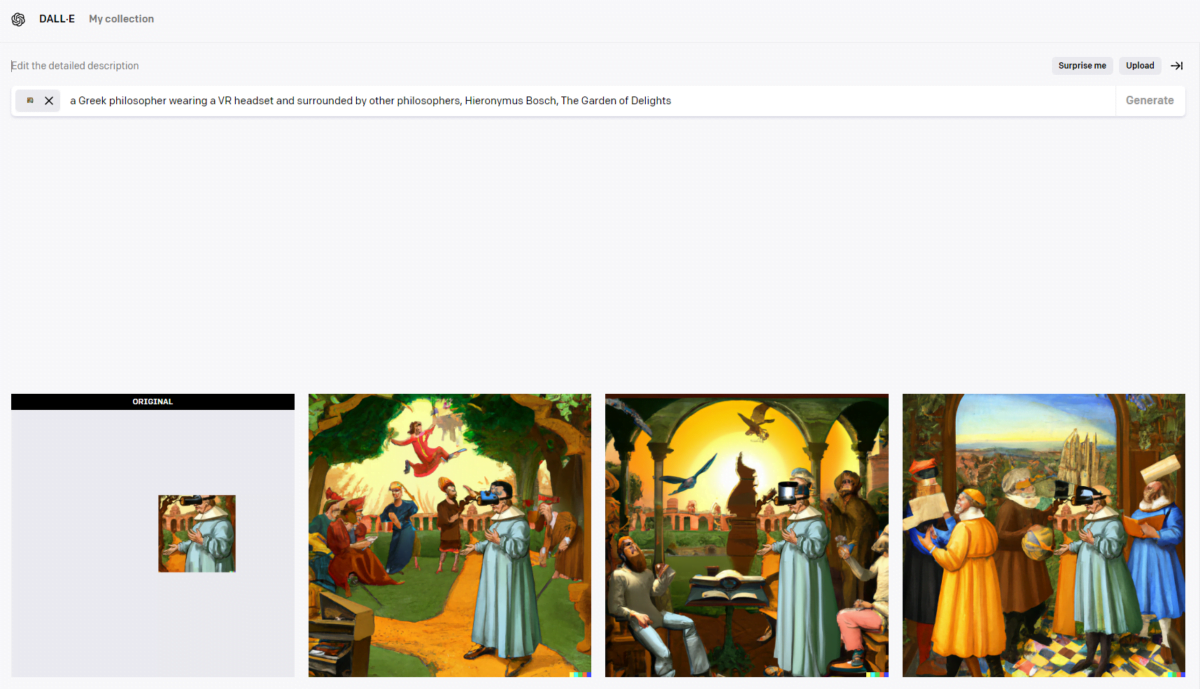

Paintings can be added using the same method. DALL-E 2 can give Mona Lisa a body, and our Greek VR philosopher gets company.

If you repeat this process often, you can zoom out further and further - some artists already create impressive journeys through DALL-E 2 worlds or giant murals.

Worlds Within Worlds #aiart #dalle2 #aianimation #animation #dalle #infinitezoom #loop #fantasy #scifi pic.twitter.com/LB8eo2GZof

- Michael Carychao (@MichaelCarychao) May 22, 2022

Inpainting with DALL-E 2 is super fun. With some ingenuity, you can create arbitrarily large artwork like the murals shown below - which I assume are the largest #dalle-produced images created so far. pic.twitter.com/DDQUMSmgYq

- David Schnurr (@_dschnurr) April 19, 2022

By combining external image processing, intelligent prompt engineering, and the editing function of DALL-E 2, many other applications are possible.

If you want to dig deeper, you should check out the DALL-E 2 Prompt Book by Guy Parsons. This gives a comprehensive overview of many of the prompt engineering tips discovered so far, and additional methods for getting the most out of DALL-E 2. Many of these tips can also be applied to Midjourney or Stable Diffusion.

Will there be a DALL-E 3? We don't know for sure yet, but OpenAI is already researching alternative architectures for generative AI models, such as consistency models.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.