Tencent introduces open source video generator HunyuanVideo and challenges Sora

Tencent announced HunyuanVideo, a new open source AI model for video generation that aims to match the capabilities of existing commercial solutions. With more than 13 billion parameters, Tencent says it's the largest publicly available model of its kind.

According to technical documentation, HunyuanVideo performs better than current systems like Runway Gen-3 and Luma 1.6, as well as three major Chinese video generation models. The system shows particularly strong results in motion quality testing.

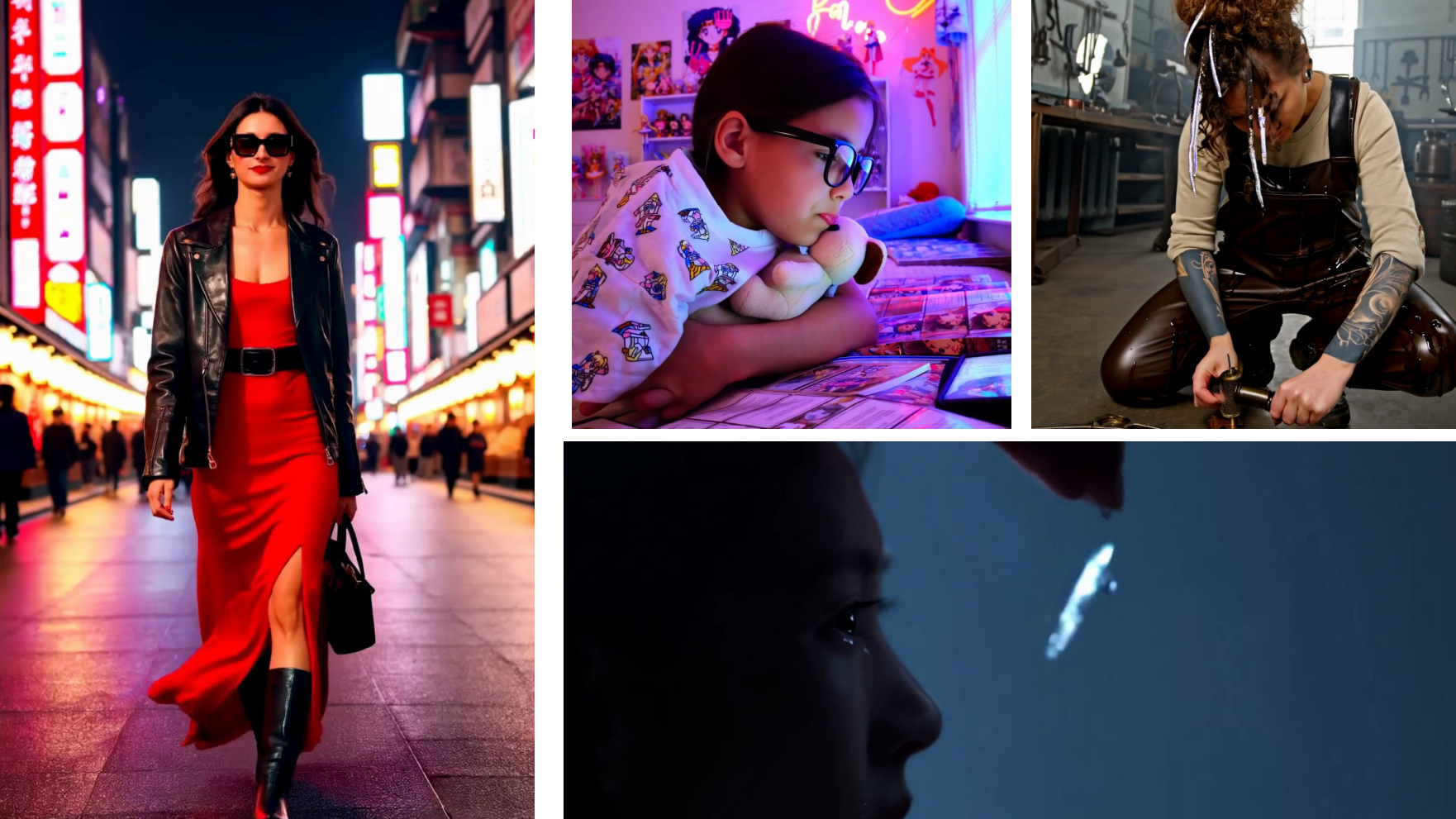

Video: Tencent

The model can handle multiple tasks, including generating videos from text descriptions, converting still images into videos, creating animated avatars, and producing audio for video content.

Tencent's engineers developed a multi-stage training process for HunyuanVideo. The model starts with low-resolution image training at 256 pixels, then moves to mixed-scale training at higher resolutions.

The final stage involves progressive video and image training, where both resolution and video length increase gradually. The development team reports this approach leads to better convergence and higher quality video output.

HunyuanVideo is open source

By releasing HunyuanVideo as open source, Tencent aims to reduce the gap between proprietary and open systems. The company has published the code on GitHub and plans ongoing development with new features.

The release puts Tencent in direct competition with established players like Runway and OpenAI's Sora project, as well as several other Chinese companies developing video models, including KLING.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.