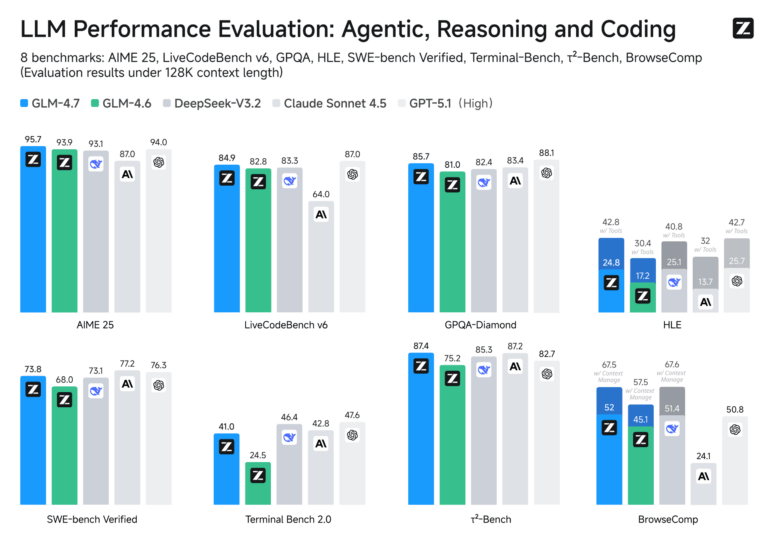

Zhipu AI has introduced GLM-4.7, a new model specialized in autonomous programming that uses "Preserved Thinking" to retain reasoning across long conversations. This capability works alongside the "Interleaved Thinking" feature introduced in GLM-4.5, which allows the system to pause and reflect before executing tasks. The model shows a significant performance jump over its predecessor, GLM-4.6, scoring 73.8 percent on the SWE-bench Verified test. Beyond writing code, Zhipu says GLM-4.7 excels at "vibe coding" - generating aesthetically pleasing websites and presentations. In a blog post, the company showcased several sites reportedly created from a single prompt. Benchmark comparisons show a tight race between GLM-4.7 and commercial Western models from providers like OpenAI and Anthropic. | Image: Zhipu AI

The model is available through the Z.ai platform and OpenRouter, or as a local download on Hugging Face. It also integrates directly into coding workflows like Claude Code. Z.ai is positioning the release as a cost-effective alternative, claiming it costs just one-seventh as much as comparable models.