Google Deepmind unveils its most advanced AI image generator, Imagen 2

Deepmind's new Imagen 2 image generator promises perfect hands, faces and more, down to the smallest detail.

Google Deepmind has unveiled its latest AI image generator, Imagen 2, based on widely used diffusion technology. Imagen 2 is said to produce the highest quality and most photorealistic images of any Google model to date, while strictly following user prompts.

Imagen 2 can follow prompts more accurately

Google Deepmind has improved Imagen 2's understanding of prompts by including additional descriptions in the captions of its training dataset. As a result, Imagen 2 learns different labeling styles and develops a deeper understanding of a variety of prompts.

The improved image-text relationships should lead to a more profound understanding of context and nuance in prompts. OpenAI used a similar method to improve prompt following in DALL-E 3.

Thanks to advances in the dataset and model, Google says Imagen 2 can achieve improvements in many areas where text-to-image systems often struggle. These include realistic human hands and faces. Google says it has largely eliminated typical AI image flaws.

To improve image quality, an aesthetic model was developed based on human preferences for quality attributes such as good lighting, composition, exposure, and sharpness. Each image was given an aesthetic score, which helped Imagen 2 give more weight to images in the training dataset that matched human preferences.

Imagen 2 comes with inpainting, outpainting and flexible style control

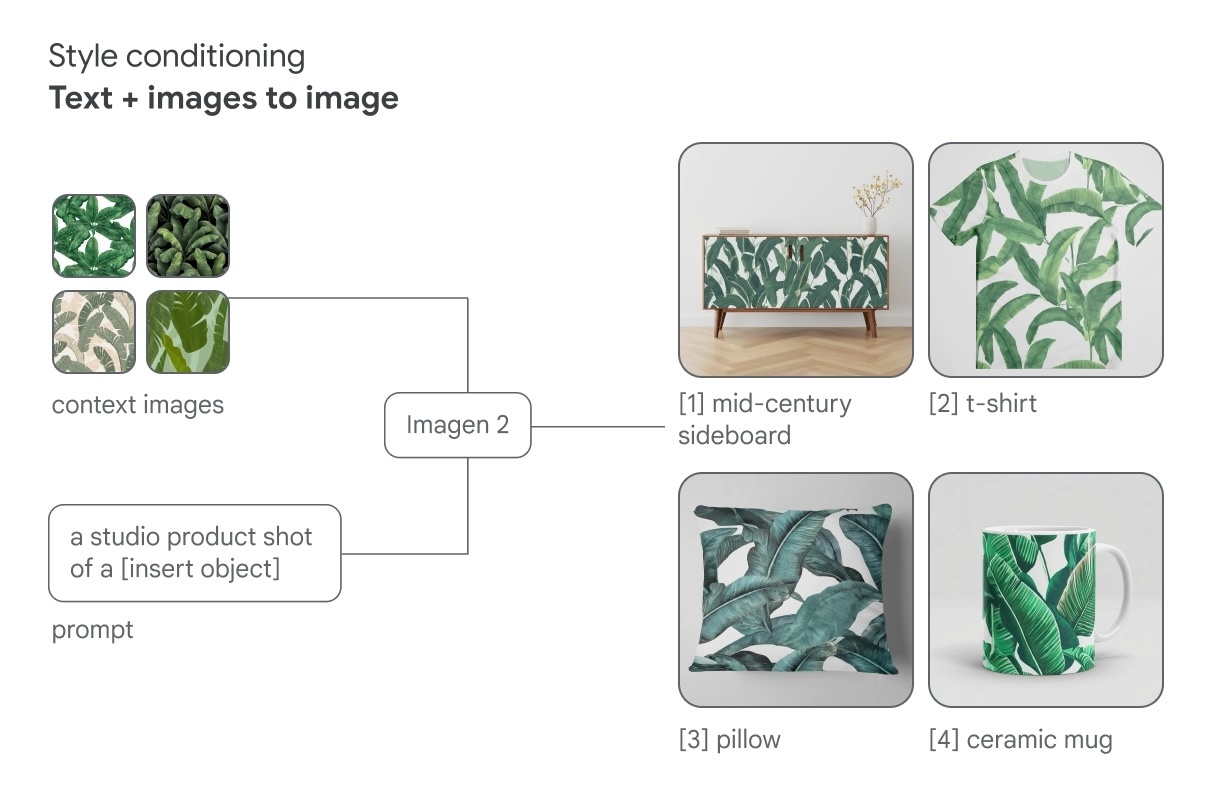

According to Google, Imagen 2's diffusion technology offers a high degree of flexibility, making it easier to control and customize the style of an image, for example by using reference images in addition to text.

Imagen 2 includes image editing features such as inpainting and outpainting right out of the box. These techniques allow users to insert new content directly into the original image or extend the original image beyond its boundaries. Such features are essential to keep up with Adobe Firefly's Generative Fill or Midjourney's Zoom-Out.

Google's Imagen 2 is initially available to developers and cloud customers through the Imagen API in Google Cloud Vertex AI. The Google Arts and Culture team is using the technology in its Cultural Icons experiment.

Imagen 2 is not yet secure enough for personal use

End users should be able to access Imagen 2 in the future. But first, Google wants to minimize the potential risks and challenges. From design to implementation, Google says it has taken safety measures but wants to do more testing.

One of the safety measures is SynthID, a toolkit for tagging and identifying AI-generated content. It allows authorized Google Cloud customers to insert an invisible digital watermark directly into image pixels without affecting image quality. SynthID preserves the watermark even if the image is filtered, cropped, or compressed during storage.

In addition, Google says it has implemented technical safeguards to limit problematic output, such as violent, offensive, or sexually explicit content. Security testing was performed on the training data, as well as the prompts and output generated by the system during generation.

Imagen 2 is a response to OpenAI's latest image model, DALL-E 3, which scores points not only for its image quality, but also for its easy accessibility via ChatGPT. Google has not yet announced how it plans to bring Imagen 2 to the masses, but integration with Bard seems logical.

The new version follows the first generation of Imagen, which was announced in May 2022. At that time, Google still had a small technological lead over OpenAI, which its competitor has long since caught up with.

Imagen 2 could also pave the way for another video AI from Google, just as Imagen was the basis for Imagen Video or Meta used its image generator Emu for Emu Video.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.