Meta has detailed how the social giant is preparing for the expected election manipulation by generative AI during this year's EU elections. But even with the best efforts, gaps in content moderation are likely to remain and fake content will go viral on Facebook, Instagram, Threads, and WhatsApp.

In the detailed blog post, Meta outlines its approach to maintaining election integrity, which is based on experience from more than 200 elections around the world since 2016. The company has invested more than $20 billion in security measures and has a team of 40,000 people working in this area, including 15,000 content reviewers who speak more than 70 languages, including all 24 EU languages.

The U.S. company works with 26 fact-checking organizations across the EU and is expanding its program to include three additional partners in Bulgaria, France, and Slovakia. Meta's strategy has three main focuses: Fighting misinformation, curbing targeted influence by outsiders, and addressing the misuse of generative AI technologies.

However, with increasingly photorealistic images from AI generators such as the latest version of Midjourney, even trained personnel may find it difficult to reliably identify AI content as such. To minimize this risk, Meta is building on standards such as C2PA and IPTC to tag AI images not only from its own image generator but also from third-party providers such as OpenAI's DALL-E 3, Midjourney, Adobe Firefly, Google Imagen, and Shutterstock.

These watermarks are not yet considered very reliable. If human reviewers detect AI images as such, they may assign them to the "Altered" category, which will have a negative impact on the ranking of the post. Posts with disproven false information are also prohibited. There have been several examples in the past of less photorealistic AI images being misused for propaganda purposes.

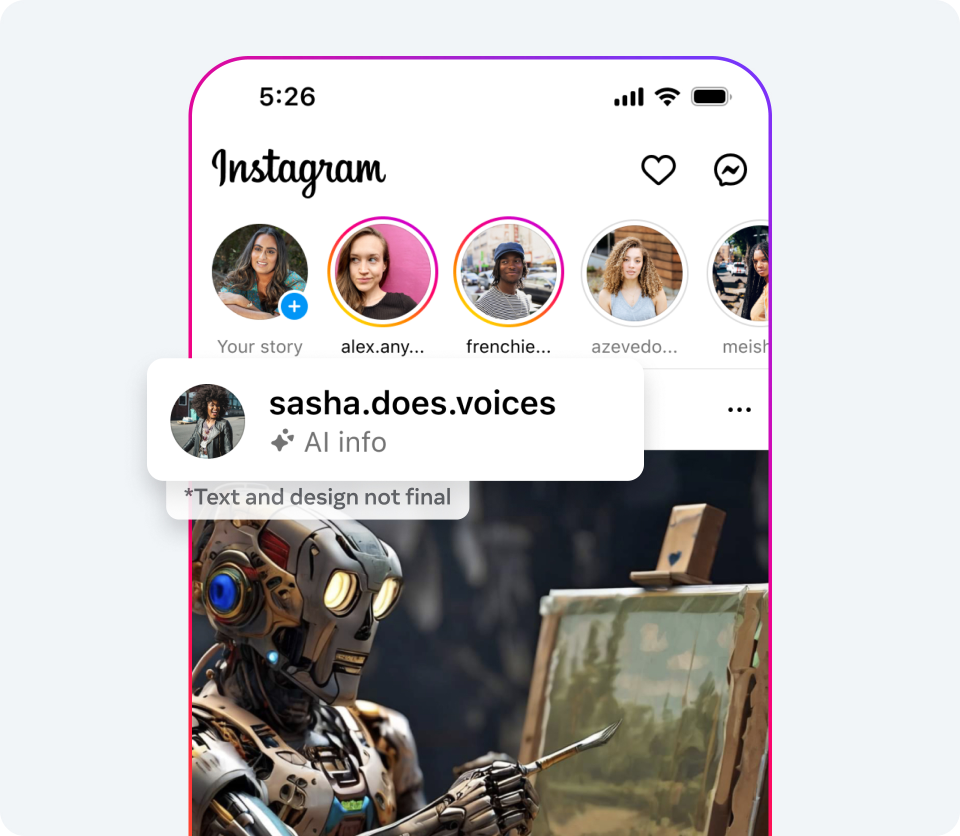

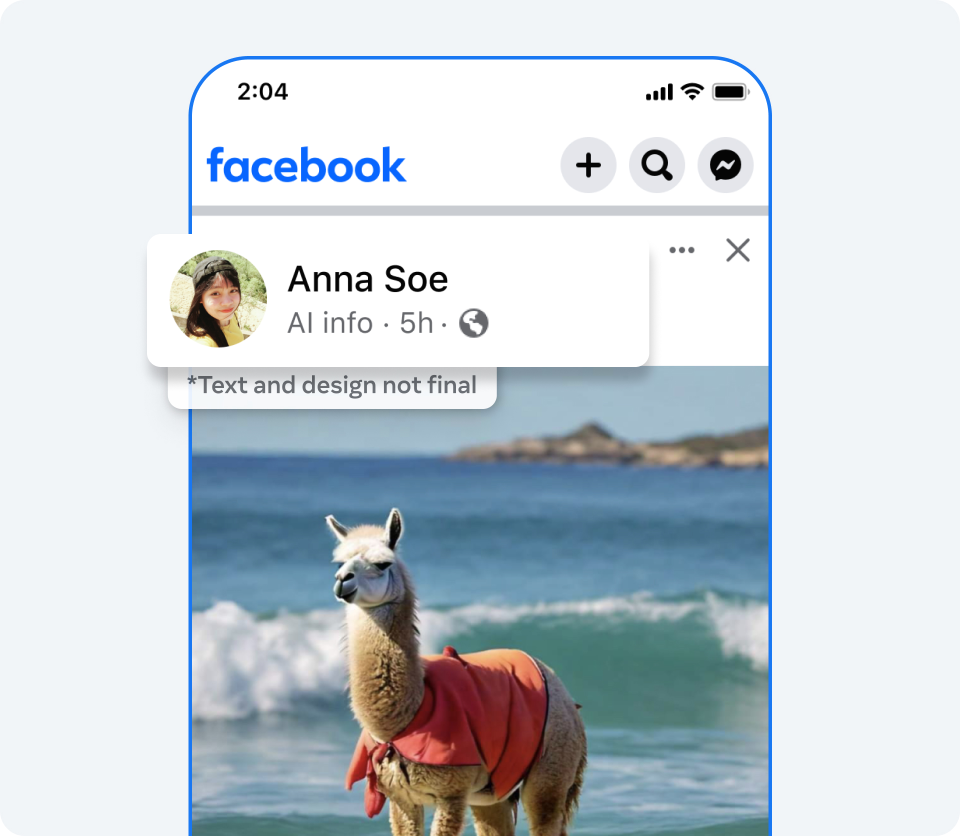

Meta wants to punish users who do not label AI content

In addition to giving users the option to voluntarily label their content as AI-generated, Meta is considering penalties for not doing so. TikTok introduced such a feature a few months ago, although it is not known if there will be penalties for failing to label content as AI-generated.

"If we determine that digitally created or altered image, video or audio content creates a particularly high risk of materially deceiving the public on a matter of importance, we may add a more prominent label, so people have more information and context," the company added.

Fighting AI fake news remains a group effort

Meta also points to alliances such as the "Tech Accord to Combat Deceptive Use of AI in the 2024 Elections," in which social networks such as Meta, TikTok, and X, as well as other key industry players such as OpenAI, Google, and Amazon have pledged to work together to combat misinformation related to this year's elections. In addition to the EU elections, the U.S. presidential election is also playing a major role in global politics this year.

An open letter from leading AI researchers calling on governments to legally require companies to take countermeasures shows how acute the threat of deepfakes is. And a recent study found that humans cannot distinguish between human-generated and AI-generated fake news.