Microsoft's mini LLM Phi-2 is now open source and allegedly better than Google Gemini Nano

Update –

- Added information about MIT license

- New benchmarks added

Update from January 6, 2024:

Microsoft is releasing Phi-2 under the MIT Open-Source License. The MIT license is a permissive license that permits commercial use, distribution, modification, and private use of the licensed software. The only condition is that you retain the copyright and license notices.

The license also grants permission to use, copy, modify, merge, publish, distribute, sublicense and/or sell copies of the software. However, the software, in this case the AI model, is provided "as is" without any warranty or liability on the part of the authors or copyright holders.

Updated December 12, 2023:

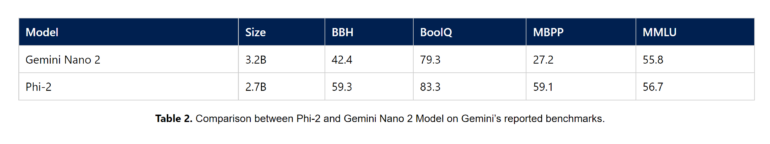

Phi-2 is Microsoft's smallest language model. New benchmarks from the company show it beating Google's Gemini Nano.

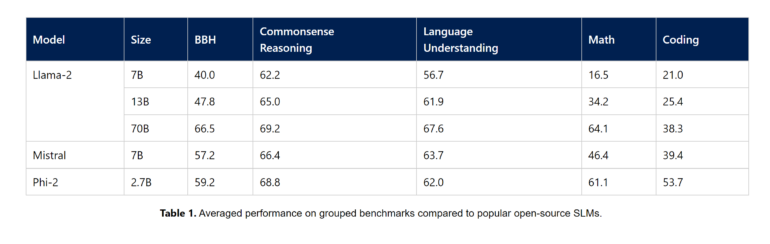

Microsoft has released more details on Phi-2, including detailed benchmarks comparing the 2.7 billion parameter model with Llama-2, Mistral 7B and Google's Gemini Nano.

In comparison with Google's smallest language model of the Gemini family, Phi-2 shows better performance in all benchmarks presented by Microsoft.

Microsoft has also carried out extensive tests with frequently used prompts. The conclusion: "We observed a behavior in accordance with the expectation we had given the benchmark results."

Gemini Nano is to be used on end devices such as the Pixel 8.

Original article from 16 November 2023

In June, Microsoft researchers presented Phi-1, a transformer-based language model optimized for code with only 1.3 billion parameters. The model was trained exclusively on high-quality data and outperformed models up to ten times larger in benchmarks.

Phi-1.5 followed a few months later, also with 1.3 billion parameters and trained on additional data consisting of various AI-generated texts. Phi-1.5 can compose poems, write emails and stories, and summarize texts. One variant can also analyze images. In benchmarks on common sense, language comprehension, and reasoning, the model was in some areas able to keep up with models with up to 10 billion parameters.

Microsoft has now announced Phi-2, which with 2.7 billion parameters is twice as large, but still tiny compared to other language models. Compared to Phi-1.5, the model shows dramatic improvements in logical reasoning and safety, according to the company. With the right fine-tuning and customization, the small language model is a powerful tool for cloud and edge applications, the company said.

Microsoft's Phi-2 shows improvements in math and coding

The company has not yet published any further details about the model, however, Sebastien Bubeck, head of the Machine Learning Foundations Group at Microsoft Research, published a screenshot on Twitter of the "MT-Bench" benchmark, which attempts to test the real capabilities of large - and small - language models with powerful language models such as GPT-4.

According to the results, Phi-2 outperforms Meta's Llama-2-7B model in some areas. A chat version of Phi-2 is also in the pipeline and may address some of the model's existing weaknesses in these areas.

Microsoft announces "Models as a Service"

Phi-2 and Phi-1.5 are now available in the Azure AI model catalog, along with Stable Diffusion, Falcon, CLIP, Whisper V3, BLIP, and SAM. Microsoft is also adding Code Llama and Nemotron from Meta and Nvidia.

Microsoft also announced "Models as a Service": "Pro developers will soon be able to easily integrate the latest AI models such as Llama 2 from Meta, Command from Cohere, Jais from G42, and premium models from Mistral as an API endpoint to their applications. They can also fine-tune these models with their own data without needing to worry about setting up and managing the GPU infrastructure, helping eliminate the complexity of provisioning resources and managing hosting."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.