GPT-5 allegedly solves open math problem without human help

GPT-5 solves an open math problem for the first time, and the mathematician behind it shows exactly which line came from which AI. Does science really need this level of transparency?

GPT-5 solves an open math problem for the first time, and the mathematician behind it shows exactly which line came from which AI. Does science really need this level of transparency?

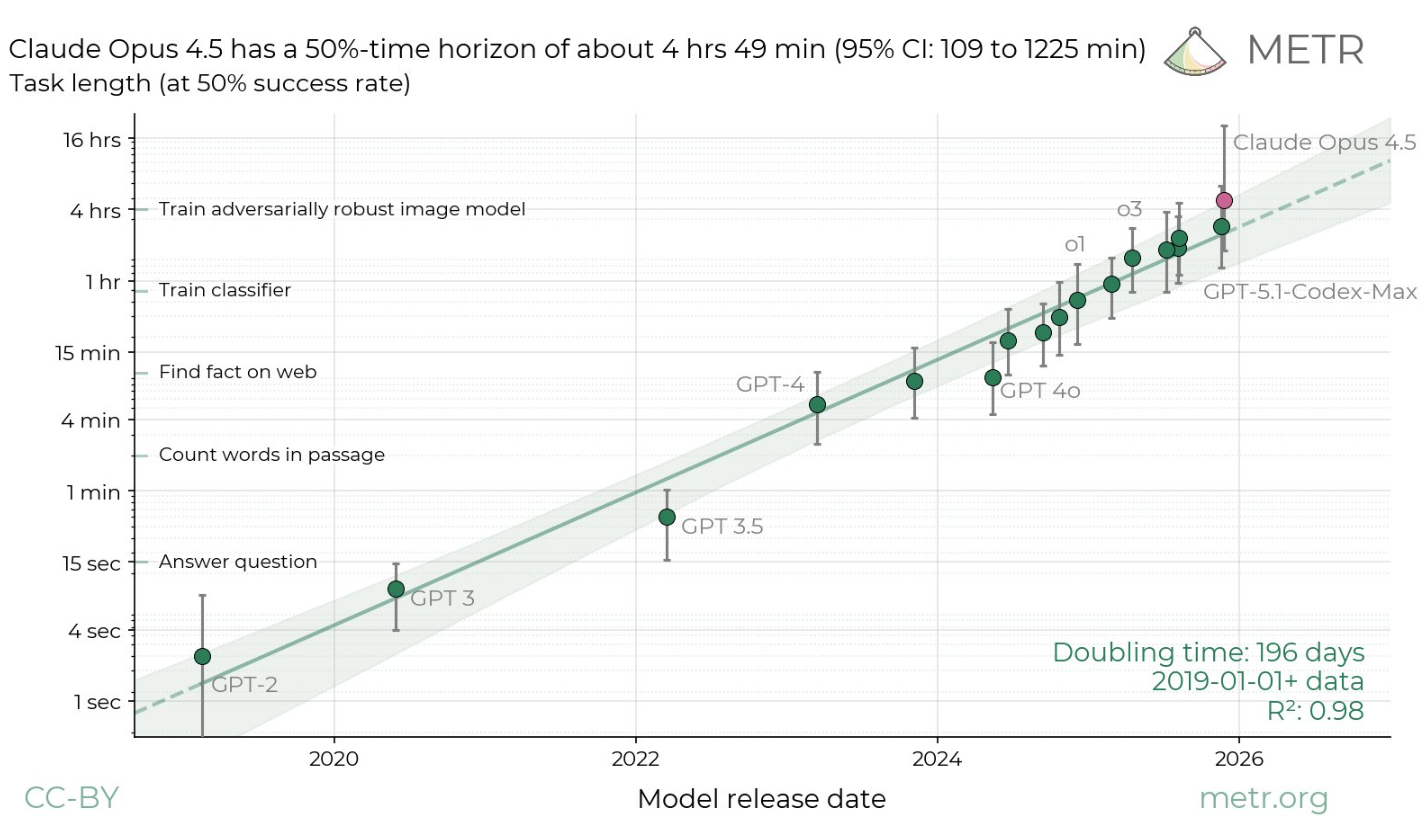

AI research organization METR has released new benchmark results for Claude Opus 4.5. Anthropic's latest model achieved a 50 percent time horizon of roughly 4 hours and 49 minutes—the highest score ever recorded. The time horizon measures how long a task can be while still being solved by an AI model at a given success rate (in this case, 50 percent).

The gap between difficulty levels is big. At the 80 percent success rate, the time horizon drops to just 27 minutes, about the same as older models, so Opus 4.5 mainly shines on longer tasks. The theoretical upper limit of over 20 hours is likely noise from limited test data, METR says.

Like any benchmark, the METR test has its limits, most notably, it only covers 14 samples. A detailed breakdown by Shashwat Goel of the weaknesses is here.

Google’s new A2UI standard gives AI agents the ability to create graphical interfaces on the fly. Instead of just sending text, AIs can now generate forms, buttons, and other UI elements that blend right into any app.