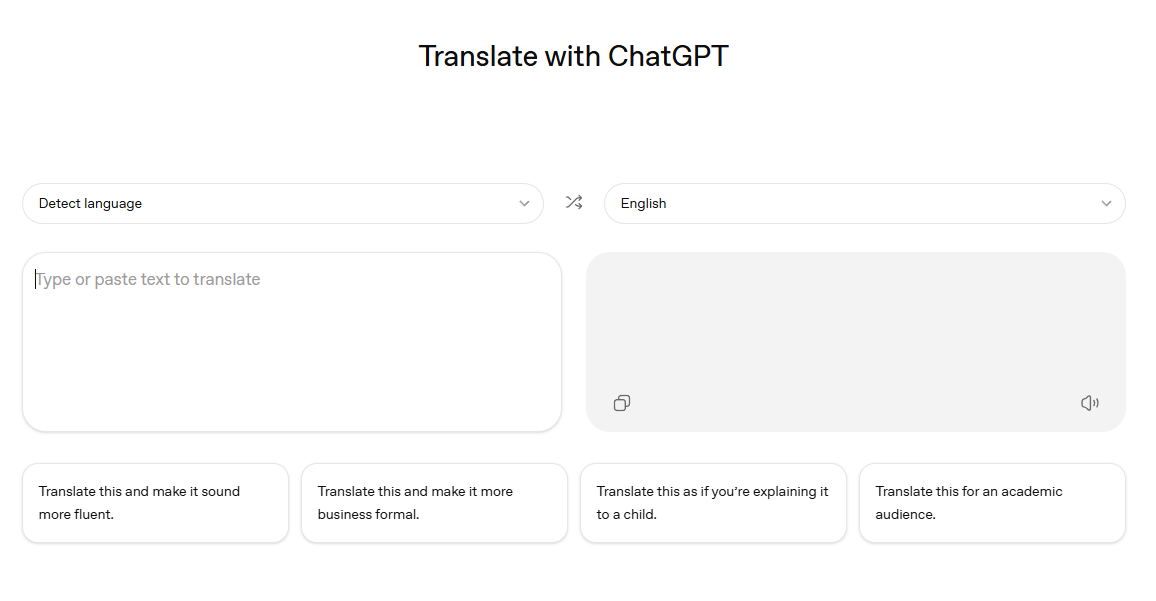

OpenAI has launched a standalone translation tool built on ChatGPT. "ChatGPT Translate" supports more than 25 languages with an interface similar to Google Translate or DeepL—two text fields with automatic language detection for the input.

Users can refine translations with additional prompts, for example, switching to a business tone or simplifying for children. These prompts redirect to the main ChatGPT interface, suggesting the tool is mainly designed as a gateway to the chatbot. OpenAI hasn't officially announced it yet.

Unlike full ChatGPT, the tool only handles text with apparent length limits. During testing, it sometimes returned chatbot responses asking for clarification instead of translations, with no way to respond. This suggests it's essentially a prompt in a new interface rather than a specialized translation model. For now, ChatGPT itself remains the more capable option.